The booming e-commerce sector in China, home to some of the world's largest platforms like Alibaba, JD.com, Pinduoduo, and others, presents an immense opportunity for businesses seeking to leverage product and market data. Chinese E-commerce Data Scraping Services can help businesses gain valuable insights into product trends, pricing strategies, consumer preferences, and competitor performance. Scraping Search Results from E-commerce Apps provides critical information that can drive decision-making. However, scraping these platforms involves overcoming anti-scraping measures to prevent large-scale data extraction. This article will explore how to develop an efficient web scraping solution for Chinese e-commerce applications using Python or Java, addressing the challenges and tools required to ensure stability and efficiency.

Understanding the E-Commerce Landscape in China

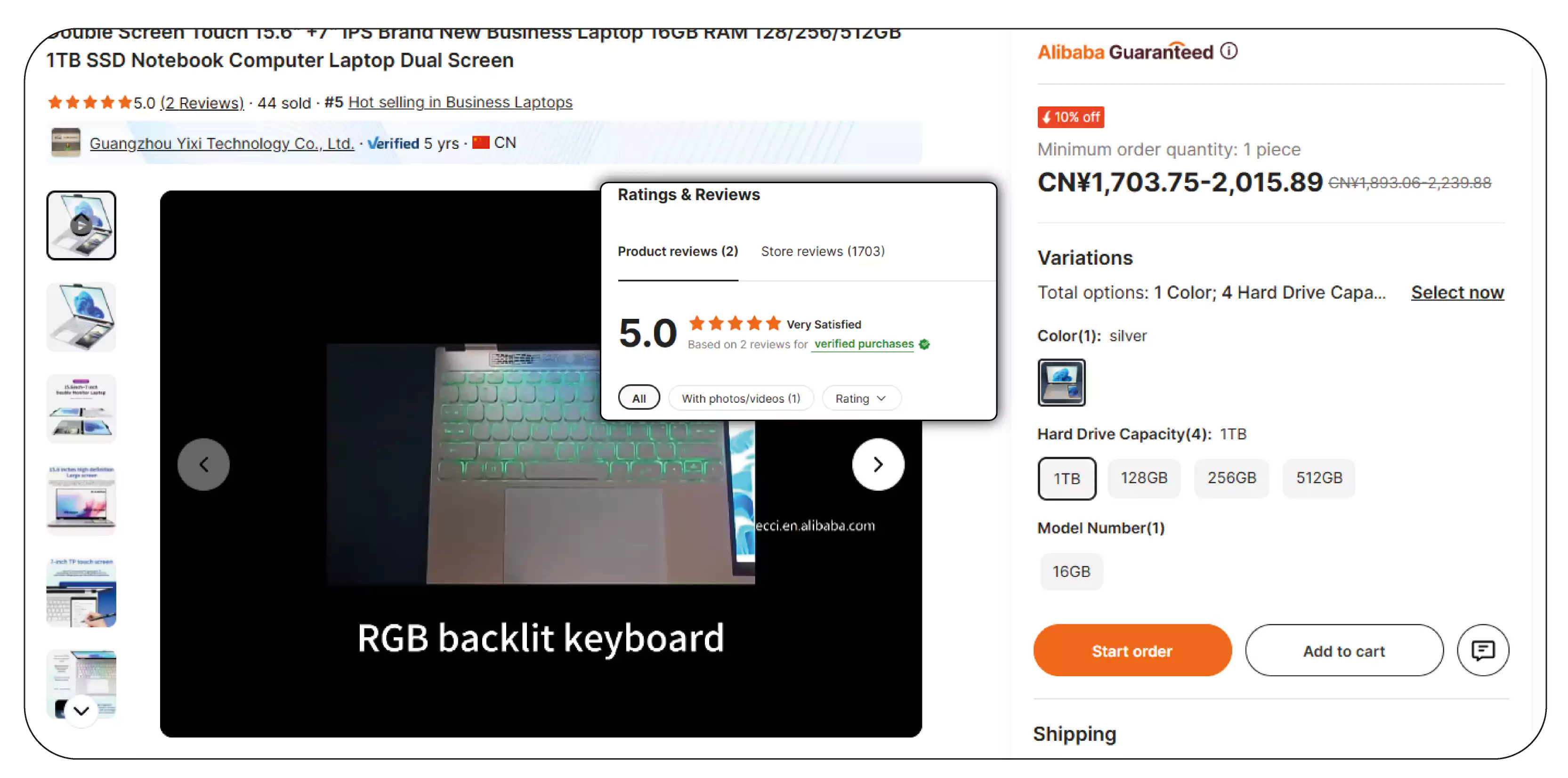

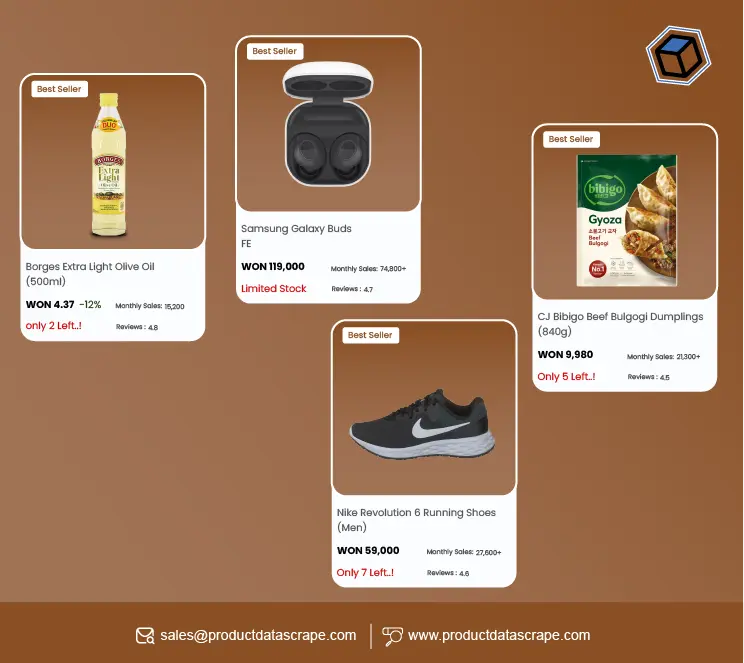

China's e-commerce market is one of the largest and fastest-growing in the world. It is dominated by key players such as Alibaba, which operates Taobao and Tmall, JD.com, and Pinduoduo. These platforms collectively account for a vast majority of the country's e-commerce transactions. With millions of product listings, user reviews, and product attributes, there is a significant amount of data available for scraping. E-commerce Search Results Scraping Services can provide invaluable insights into these data points.

This data can be valuable for market research, competitor analysis, price monitoring, and even for insights into consumer behavior. Real-time Product Data Scraping from Chinese Apps is essential to stay updated with dynamic market trends and consumer preferences. However, scraping data from Chinese e-commerce platforms poses unique challenges due to sophisticated anti-scraping mechanisms that prevent bots from accessing and extracting data efficiently.

Challenges in Scraping Chinese E-Commerce Websites

Before diving into the scraping solution, it's crucial to understand the primary challenges associated with scraping Chinese e-commerce websites:

- Anti-Scraping Mechanisms: Many e-commerce platforms employ techniques like CAPTCHA, IP blocking, rate limiting, and JavaScript rendering to prevent automated bots from scraping data. These platforms frequently detect and block repeated access from the same IP address or user agent, especially if requests are made too quickly. Overcoming these mechanisms is crucial for Product Data Extraction from Chinese E-commerce to ensure continuous access to the desired data.

- Dynamic Content Loading: Modern Chinese e-commerce websites often rely on JavaScript to load dynamic content (e.g., product details, images, and reviews). This makes traditional scraping methods that rely on static HTML parsing ineffective. To extract valuable data successfully, Chinese App Search Interface Scraping techniques are necessary to handle dynamically generated content.

- Proxies and Rotating IPs: To overcome these blocks, scrapers must utilize proxies or rotating IPs to distribute requests across multiple addresses, avoiding detection. This is essential for Scraping Product Prices from Chinese E-commerce websites and ensuring uninterrupted access to real-time data.

- Handling ShumeiIds: On Chinese platforms, ShumeiIds are often used for tracking user sessions, and bypassing this mechanism is necessary to simulate human-like browsing behavior. Adequate Real-time Product Data Scraping from Chinese Sites involves handling these session identifiers to maintain continuous access to the required data.

Essential Tools and Technologies for Web Scraping

To effectively scrape search results from Chinese e-commerce platforms, we must employ the right combination of tools, libraries, and techniques. The scraping system should be robust, resilient to anti-scraping defenses, and efficient. Below are the essential tools and technologies needed for developing a scraping solution:

- Programming Languages: Python and Java are the most popular languages for web scraping due to their rich ecosystems of libraries and frameworks. For this project, we'll focus on Python, which provides more flexibility and ease of use for web scraping tasks.

Libraries for Web Scraping:

- Requests: This is a popular Python library for making HTTP requests. It is essential to send requests to retrieve raw HTML from the target websites.

- Selenium: Selenium is a powerful web scraping tool that interacts with JavaScript-heavy websites. It can simulate user behavior by rendering pages dynamically, making it ideal for platforms where content is loaded via JavaScript.

- BeautifulSoup: Once you have the raw HTML, BeautifulSoup is used to parse and navigate the HTML to extract the required data, such as product names, prices, descriptions, and reviews.

- Scrapy: Scrapy is a robust, fast, scalable web scraping framework. It can be used to build large-scale scraping projects, especially for handling multiple concurrent requests and managing extracted data efficiently.

Proxies and IP Rotation:

- Proxy Servers: Proxy servers are essential for circumventing IP blocking mechanisms. Using a pool of proxies, requests can be routed through different IP addresses to appear as if they come from multiple users.

- Rotating Proxies: A rotating proxy service automatically rotates IP addresses with each request. This prevents the platform from blocking a single IP address, which could be flagged for scraping activities.

- ShumeiIds: To interact with Chinese e-commerce websites that use ShumeiIds, a session handling mechanism must be implemented. This involves setting up cookies and headers and possibly using session persistence in libraries like Selenium.

Developing a Web Scraping Solution

Given the complexity of scraping search results from Chinese e-commerce applications, here is an overview of how to build a scraping solution:

Handling Anti-Scraping Mechanisms

To bypass anti-scraping mechanisms, the following techniques must be employed:

- Proxy Rotation: When sending HTTP requests to e-commerce websites, it is crucial to rotate IP addresses regularly. This can be done by integrating a proxy rotation service or using a pool of proxies. Distributing requests across multiple proxies significantly reduces the risk of IP bans.

- User-Agent Rotation: In addition to rotating IPs, you should rotate user-agent strings to simulate requests coming from different browsers and devices. This helps prevent the detection of scraping bots.

- Handling CAPTCHAs: Some websites may present CAPTCHAs to verify that a request is coming from a human user. Various third-party services, such as 2Captcha or AntiCaptcha, provide automated CAPTCHA-solving services.

- Session Management and ShumeiIds: Websites that use session tracking mechanisms like ShumeiIds must manage cookies and headers properly. You must also extract and store session IDs for each interaction with the site, ensuring that requests look like they come from legitimate users.

Scraping Dynamic Content with Selenium

Since many e-commerce platforms rely on dynamic content loading, a tool like Selenium is invaluable. Selenium interacts with the page like a browser, making it suitable for scraping JavaScript-rendered data. With Selenium, you can simulate user actions, such as scrolling and clicking, to trigger dynamic content loading.

- Page Navigation: Selenium allows navigation through multiple pages of search results, just as a user would.

- Data Extraction: Once the page is loaded, Selenium can extract the relevant product details like product name, price, ratings, and availability. This data is then parsed and processed using BeautifulSoup for further extraction.

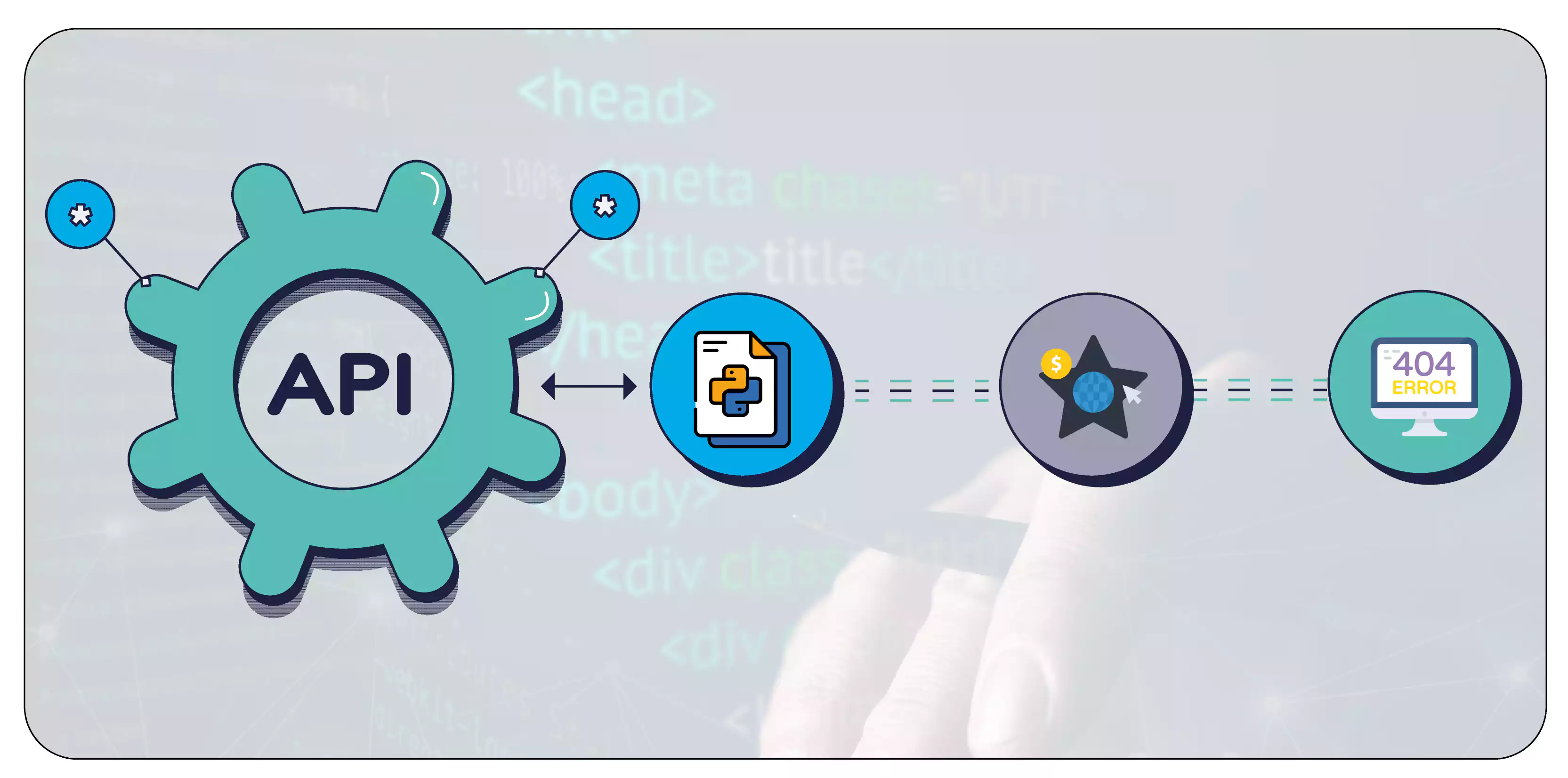

Implementing the API

Once the scraping solution is developed and optimized, the next step is to expose the scraped data via an API. The API will retrieve the scraped product search results for integration with other systems or for direct consumption by clients.

- Flask (in Python) or Spring Boot (in Java) can be used to develop the web API. The API will handle requests, scrape data from e-commerce platforms, and send the results in a structured format like JSON.

- Rate Limiting: Implementing rate limiting is essential to ensure stability and prevent overloading the target websites. The API can avoid detection and blocking by restricting the number of requests made in a given time frame.

- Error Handling: Robust error handling is crucial. In case of network failure or page structure changes, the API should gracefully handle errors and notify users of issues.

Data Storage and Management

Organizing the scraped data is necessary to manage it efficiently. Databases like MySQL, PostgreSQL, or MongoDB are commonly used for this purpose. Depending on the volume of data, you may also choose to use a NoSQL database, which offers better scalability for large datasets.

- Data Cleaning: Scraped data is often messy, containing missing values or duplicate entries. Implementing data-cleaning processes helps maintain the quality of the data.

- Data Aggregation: Aggregating data into meaningful reports, such as pricing trends, product comparisons, or sentiment analysis, can provide businesses with actionable insights.

Legal and Ethical Considerations

Understanding the legal and ethical considerations surrounding eCommerce Dataset Scraping is crucial. Scraping e-commerce websites may violate their terms of service and, in some cases, could be considered illegal. To minimize legal risks, it is essential to:

- Review the Terms of Service: Always check the terms of service of the website you are scraping to ensure you are not violating any clauses.

- Obtain Permission: In some cases, websites may offer an API for accessing their data, which is a legitimate way to gather information.

By being mindful of these considerations, businesses can effectively leverage data for Pricing Strategies without risking legal or ethical issues.

Conclusion

Scraping search results from Chinese e-commerce applications presents significant opportunities for businesses, enabling them to gather valuable market data, track competitors, and optimize their product offerings. However, it also comes with challenges, including sophisticated anti-scraping measures and dynamic content. Businesses can effectively navigate these obstacles and extract relevant product data by utilizing tools such as Selenium, requests, and proxy rotation services. Web Scraping E-commerce Websites allow businesses to access large amounts of data, which is crucial for Price Monitoring and identifying market trends. With careful handling of legal and ethical considerations, a well-developed web scraping solution can provide invaluable insights into the competitive landscape of Chinese e-commerce.

At Product Data Scrape, we strongly emphasize ethical practices across all our services, including Competitor Price Monitoring and Mobile App Data Scraping. Our commitment to transparency and integrity is at the heart of everything we do. With a global presence and a focus on personalized solutions, we aim to exceed client expectations and drive success in data analytics. Our dedication to ethical principles ensures that our operations are both responsible and effective.

.webp)