Introduction to Web Scraping

Web scraping is the automated process of extracting data from websites. Whether you're scraping for e-commerce product data, news articles, job postings, or any other type of information, web scraping helps to collect and organize data for analysis. As businesses and industries increasingly rely on web data, web scraping has become a valuable tool for marketing teams, data scientists, researchers, and developers.

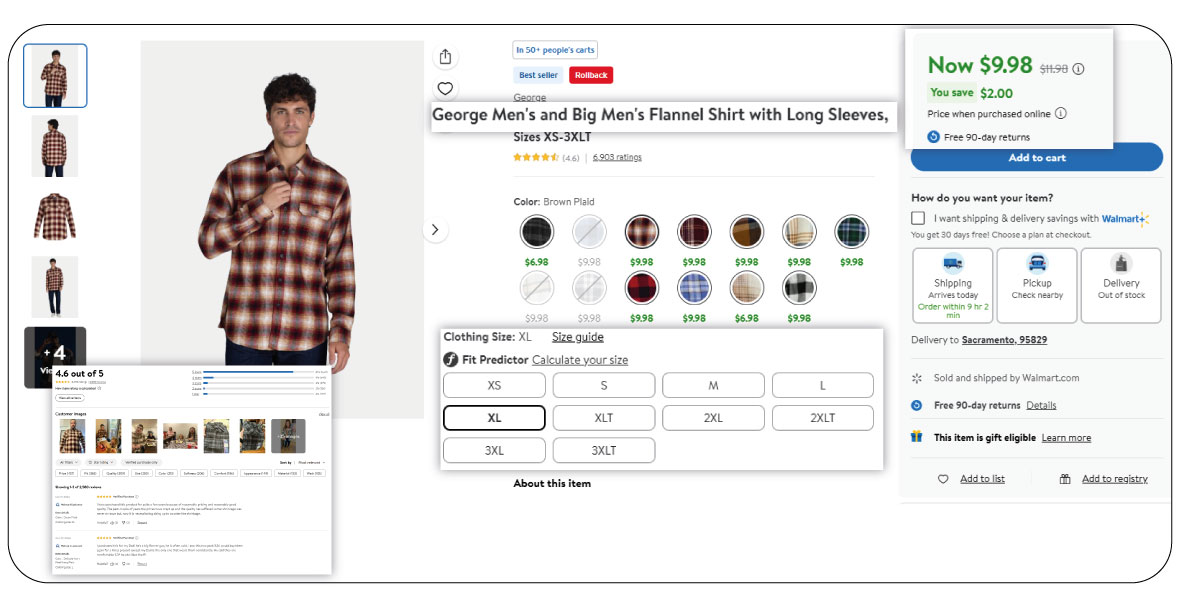

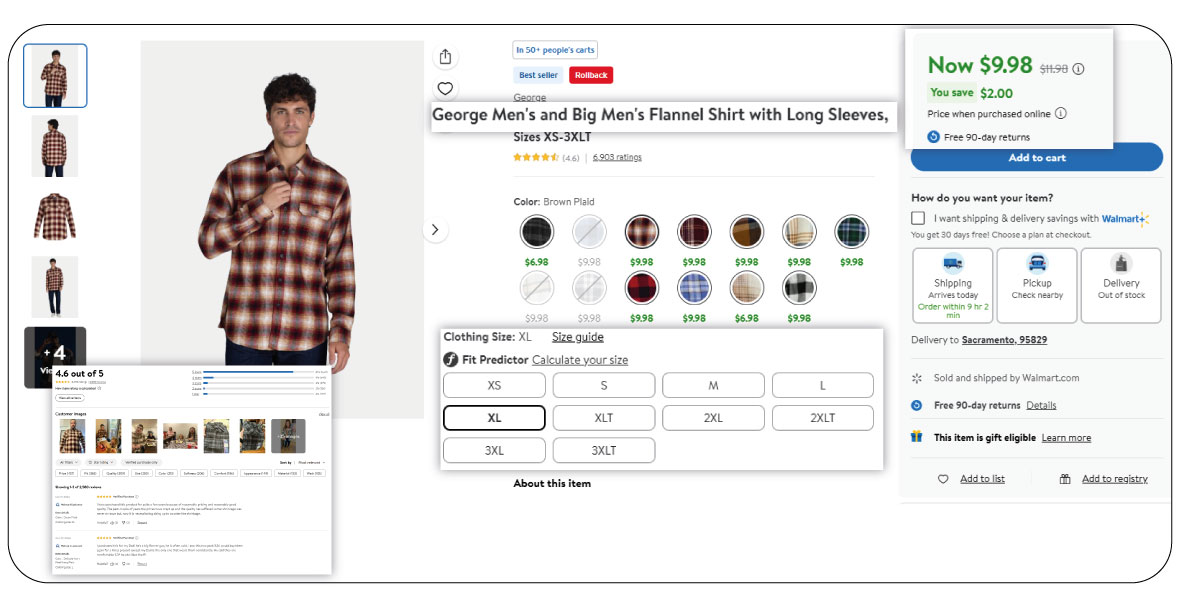

In this guide, we'll focus on web scraping for e-commerce platforms, and how Ruby can be used to extract data such as product listings, pricing, reviews, and more.

Why Use Ruby for Web Scraping?

Ruby offers several advantages when it comes to web scraping:

- Simplicity and Elegance: Ruby is known for its readable and concise syntax, which makes it easy to write and understand scraping scripts.

- Powerful Libraries: Ruby has a rich ecosystem of libraries (gems) like Nokogiri, Mechanize, and HTTParty that make web scraping simple and efficient.

- Active Community: Ruby has a strong developer community that regularly contributes to the creation of powerful tools and gems, allowing developers to access resources, tutorials, and support easily.

- Cross-Platform: Ruby runs on various operating systems, ensuring that scraping scripts can be executed on multiple platforms, including Windows, macOS, and Linux.

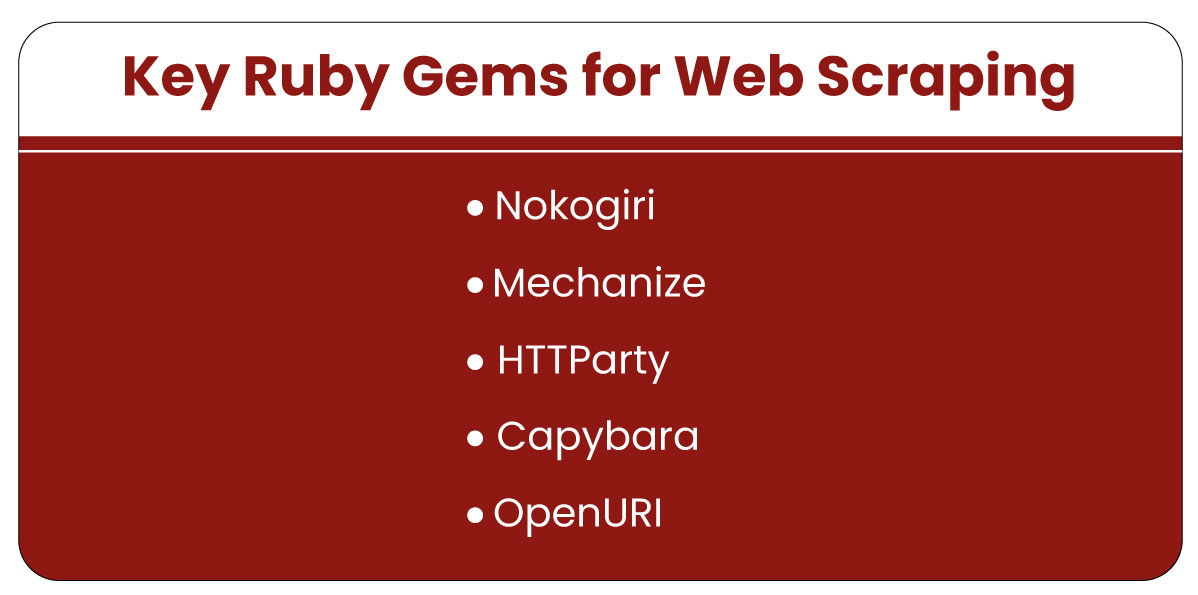

Key Ruby Gems for Web Scraping

When building web scrapers in Ruby, the following gems are commonly used:

- Nokogiri: A widely used gem for parsing HTML and XML documents. It is extremely fast and provides a rich set of features for extracting data from websites.

- Mechanize: This gem automates interaction with websites and can handle form submissions, cookies, sessions, and redirections.

- HTTParty: HTTParty allows you to make HTTP requests and handle responses easily. It simplifies working with APIs and getting data from websites.

- Capybara: Primarily used for testing, Capybara can simulate a browser, making it useful for scraping dynamic content rendered by JavaScript.

- OpenURI: Part of Ruby's standard library, this gem allows you to easily open and read content from web pages.

Setting Up Your Ruby Environment for Web Scraping

To start web scraping with Ruby, you need to have Ruby and the necessary gems installed on your system. Here’s a basic setup guide:

1. Install Ruby:

- On macOS, you can install Ruby using Homebrew:

brew install ruby

sudo apt-get install ruby-full

Install Required Gems: Use the gem install command to install the required gems.

gem install nokogiri mechanize httparty capybara

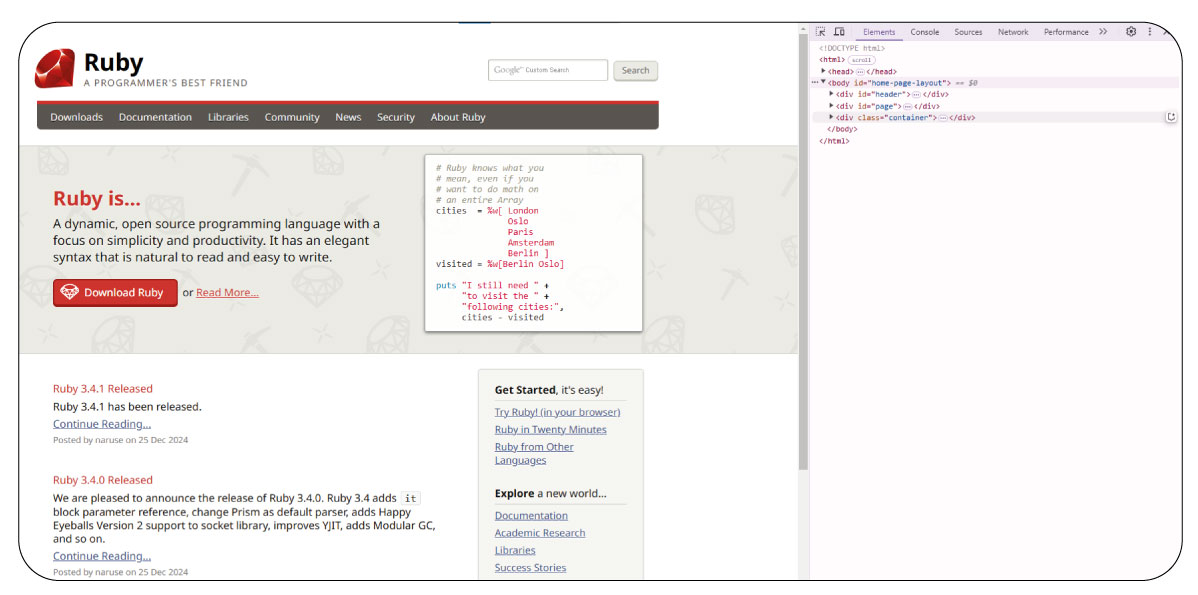

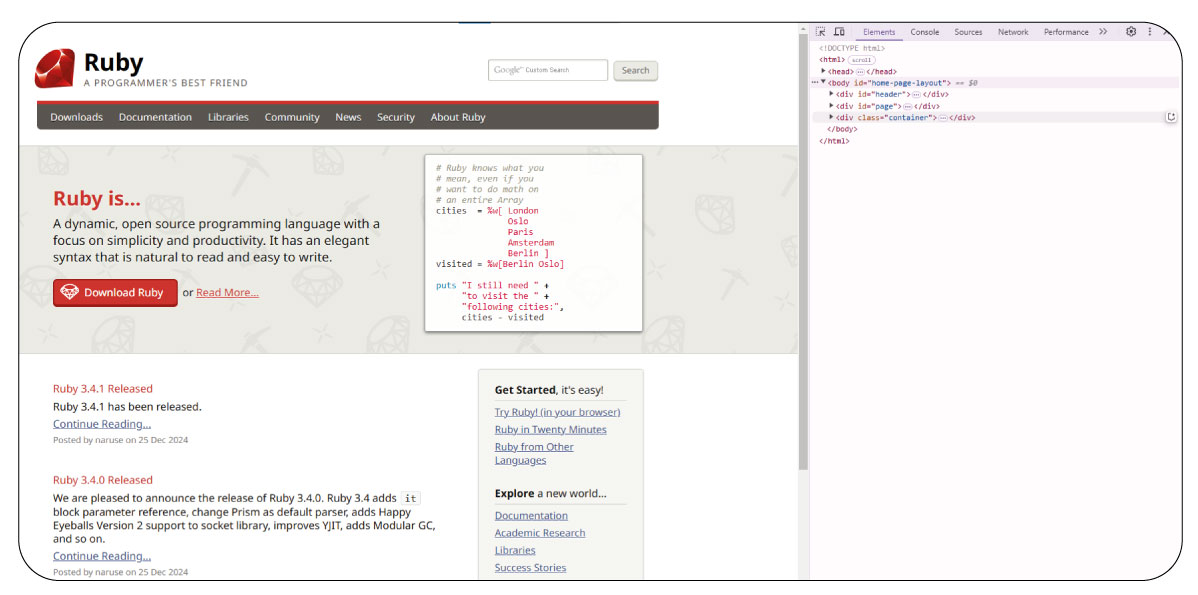

Understanding the Structure of a Website

Before scraping data, it’s important to understand the structure of the website you're targeting. Websites are usually built with HTML, CSS, and JavaScript, and knowing the structure of the HTML will help you efficiently extract the data you need.

Here’s how to examine the structure of a webpage:

1. Open the Page Source: Right-click on a webpage and select “Inspect” to open the developer tools in your browser. This will allow you to see the HTML structure of the page.

2. Identify Key Elements: Look for HTML tags like div, span, a, and others that contain the data you want to scrape.

3. Use CSS Selectors: You’ll use CSS selectors to target specific elements in the HTML.

Introduction to Product Data Scrape

Product Data Scrape is a platform designed to help users extract and analyze product data from various e-commerce websites. It’s tailored for scraping product details such as:

- Product names

- Prices

- Availability

- Images

- Reviews

- Ratings

This platform allows you to set up scraping configurations to gather data from various e-commerce sites and analyze market trends. Ruby can integrate seamlessly with Product Data Scrape, enabling you to automate the collection of e-commerce data.

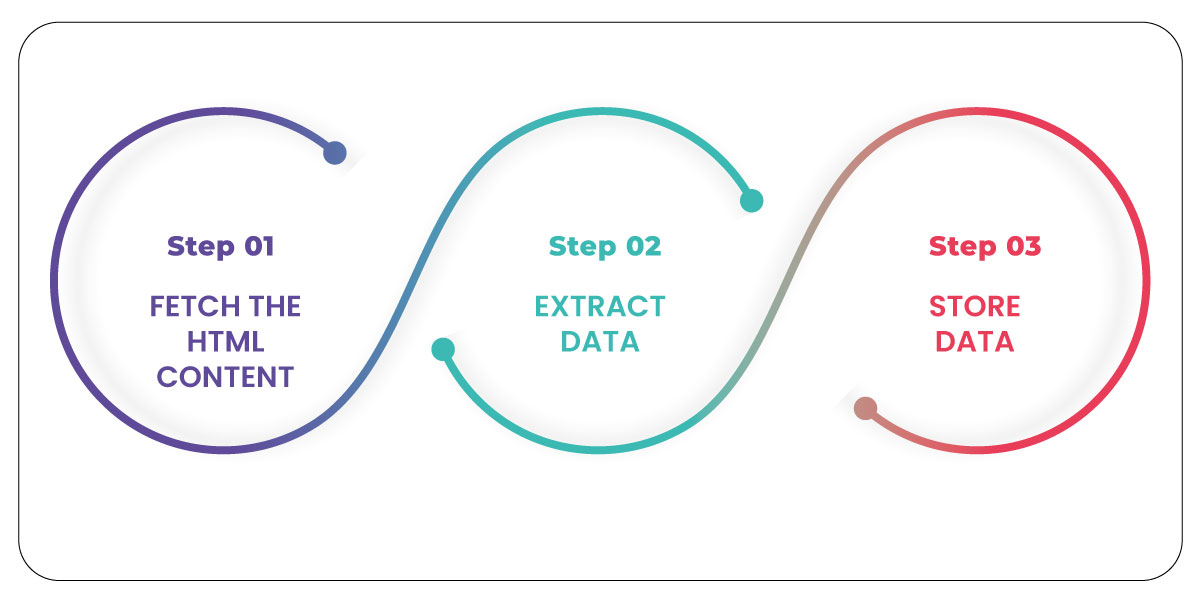

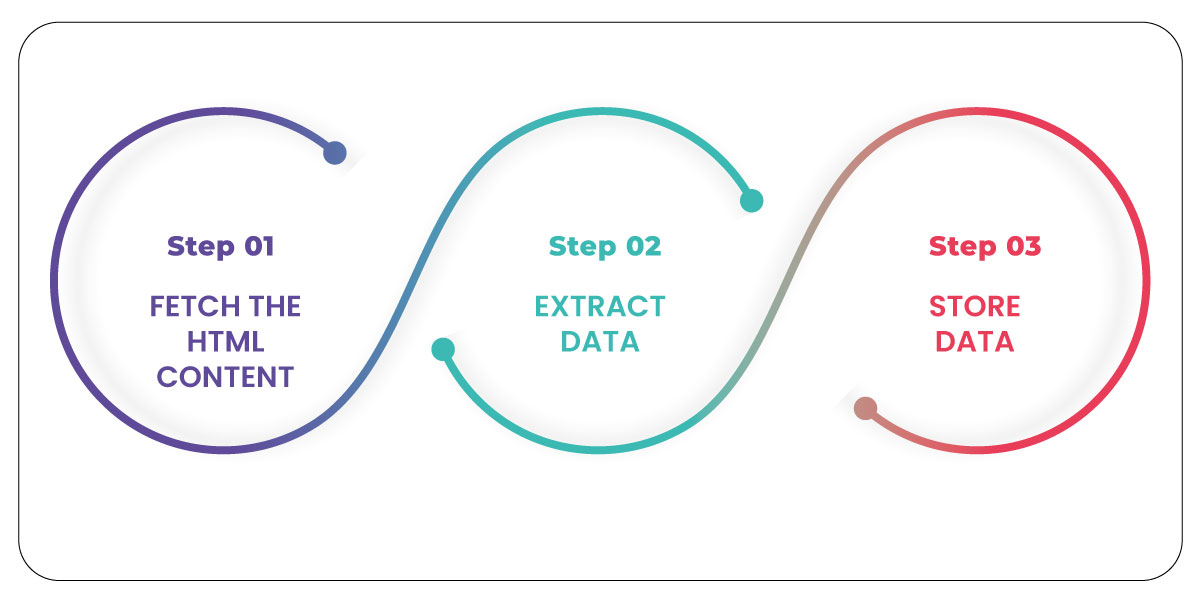

Step-by-Step Guide to Web Scraping with Ruby

Let’s walk through a simple example of scraping product data from an e-commerce website using Ruby and Nokogiri.

Step 1: Fetch the HTML Content

Step 2: Extract Data

Let’s extract product names and prices:

Step 3: Store Data

You can store the extracted data in a CSV file for later use:

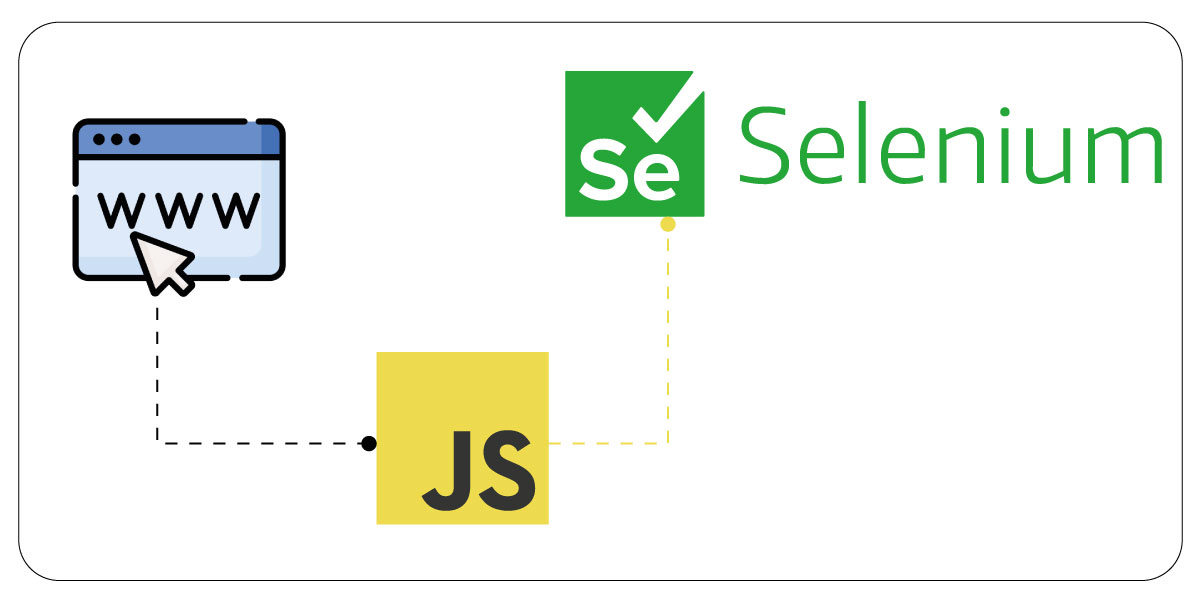

Handling Dynamic Websites and JavaScript Rendering

Many modern websites use JavaScript to load content dynamically, making it difficult to scrape using traditional methods. In such cases, you can use tools like Capybara or Selenium to interact with the page as a browser would.

For instance, with Capybara:

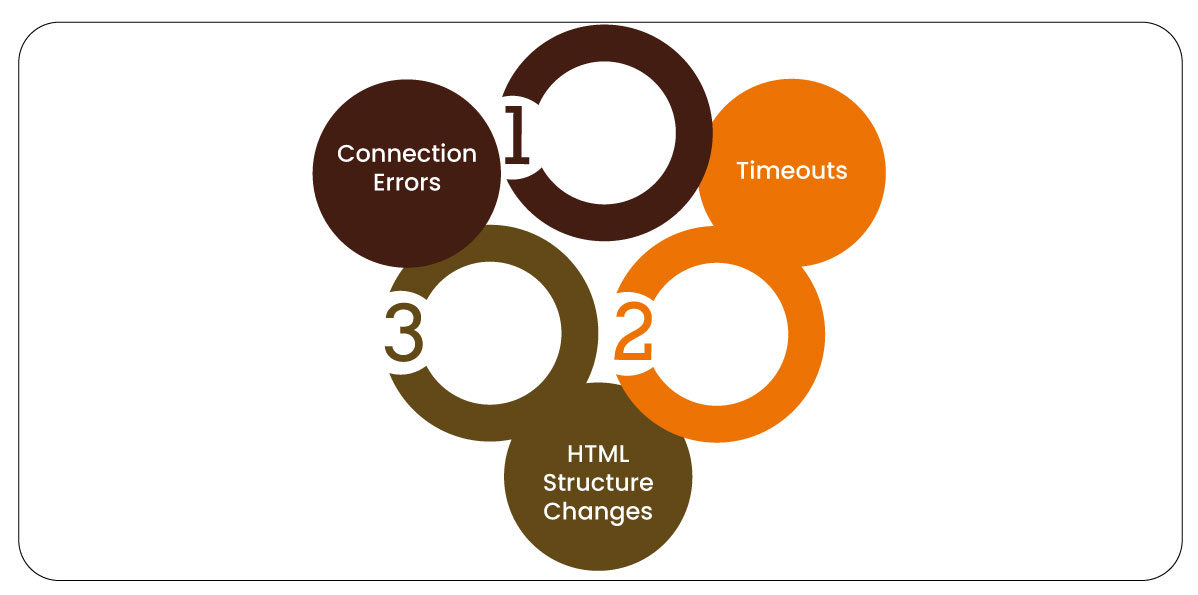

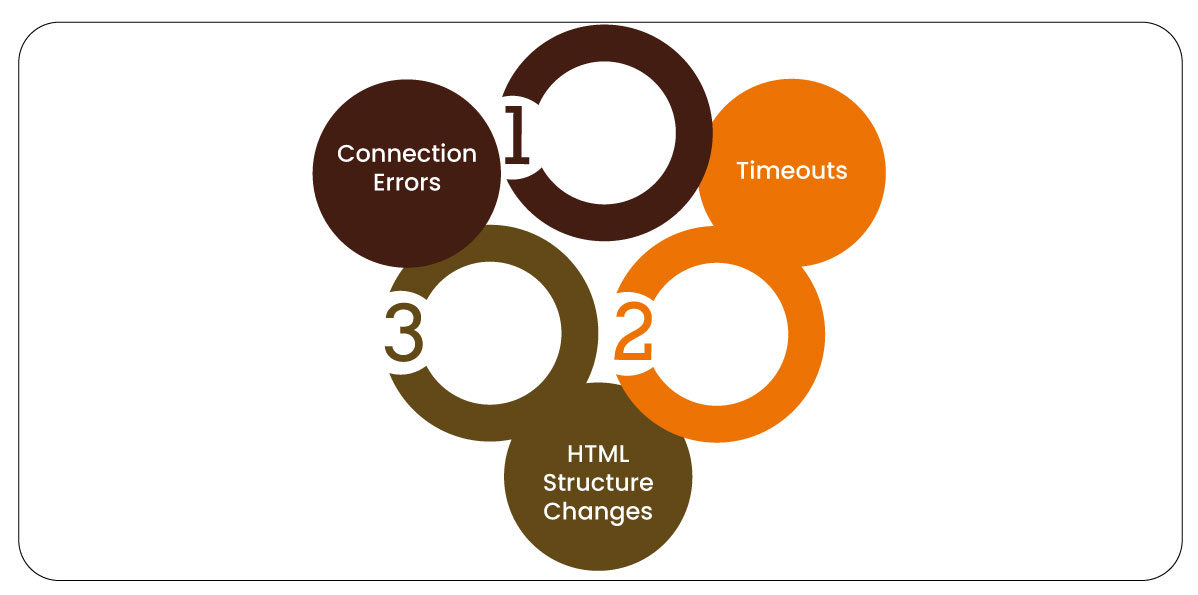

Managing Web Scraping Errors

Web scraping often involves dealing with a variety of errors:

- Connection Errors: Network issues or websites going down.

- Timeouts: The server takes too long to respond.

- HTML Structure Changes: Websites often change their structure, breaking your scraping code.

To handle these errors, you can use begin-rescue blocks in Ruby to catch and manage exceptions:

Best Practices for Ethical Web Scraping

When scraping data, it’s important to follow ethical guidelines:

- Respect Robots.txt: Always check if the website allows scraping by reviewing its robots.txt file.

- Avoid Overloading Servers: Implement rate limiting and use delays between requests to avoid overwhelming the server.

- Check Terms of Service: Ensure that scraping is not prohibited by the website's terms.

Automating Scraping Tasks

To automate the scraping process, you can use cron jobs on Linux or Task Scheduler on Windows to run your scraper at specified intervals.

For instance, to run your Ruby script every hour, you can add a cron job like this:

0 * * * * /usr/bin/ruby /path/to/your/script.rb

Conclusion

Ruby is a powerful and flexible language for web scraping, with tools like Nokogiri and Mechanize that simplify the process of extracting data from websites. By integrating with platforms like Product Data Scrape, you can gather valuable e-commerce data to stay ahead in a competitive market. However, it’s important to follow ethical scraping practices to ensure that your web scraping activities are both legal and responsible.

.webp)