Introduction to Web Scraping

What is Web Scraping?

Web scraping refers to the process of extracting data from websites. It involves downloading the web page's HTML content, parsing it, and extracting specific pieces of data, which can then be used for analysis, reporting, or further processing. For instance, businesses scrape product prices, reviews, and ratings to track market trends.

Legal Considerations in Web Scraping

Web scraping can potentially violate a website's terms of service (ToS), especially if it involves excessive requests or bypassing restrictions like CAPTCHA. It’s essential to review the ToS before scraping a website and ensure compliance with legal regulations such as the GDPR.

Key Challenges in Web Scraping

- Dynamic Content: Many modern websites load content dynamically through JavaScript.

- Captcha and Anti-bot Measures: Websites may use CAPTCHA or other anti-scraping technologies to prevent bots.

- HTML Structure Variability: Web page layouts and structures can change frequently, requiring frequent updates to scraping code.

Why Use Java for Web Scraping?

Advantages of Java in Web Scraping

- Platform Independence: Java can run on any platform that supports the Java Virtual Machine (JVM), making it highly portable.

- Robust Libraries: Java offers numerous libraries for web scraping, such as Jsoup, Selenium, and Apache HttpClient.

- Multithreading: Java supports multithreading, which can help optimize scraping tasks by running multiple tasks concurrently.

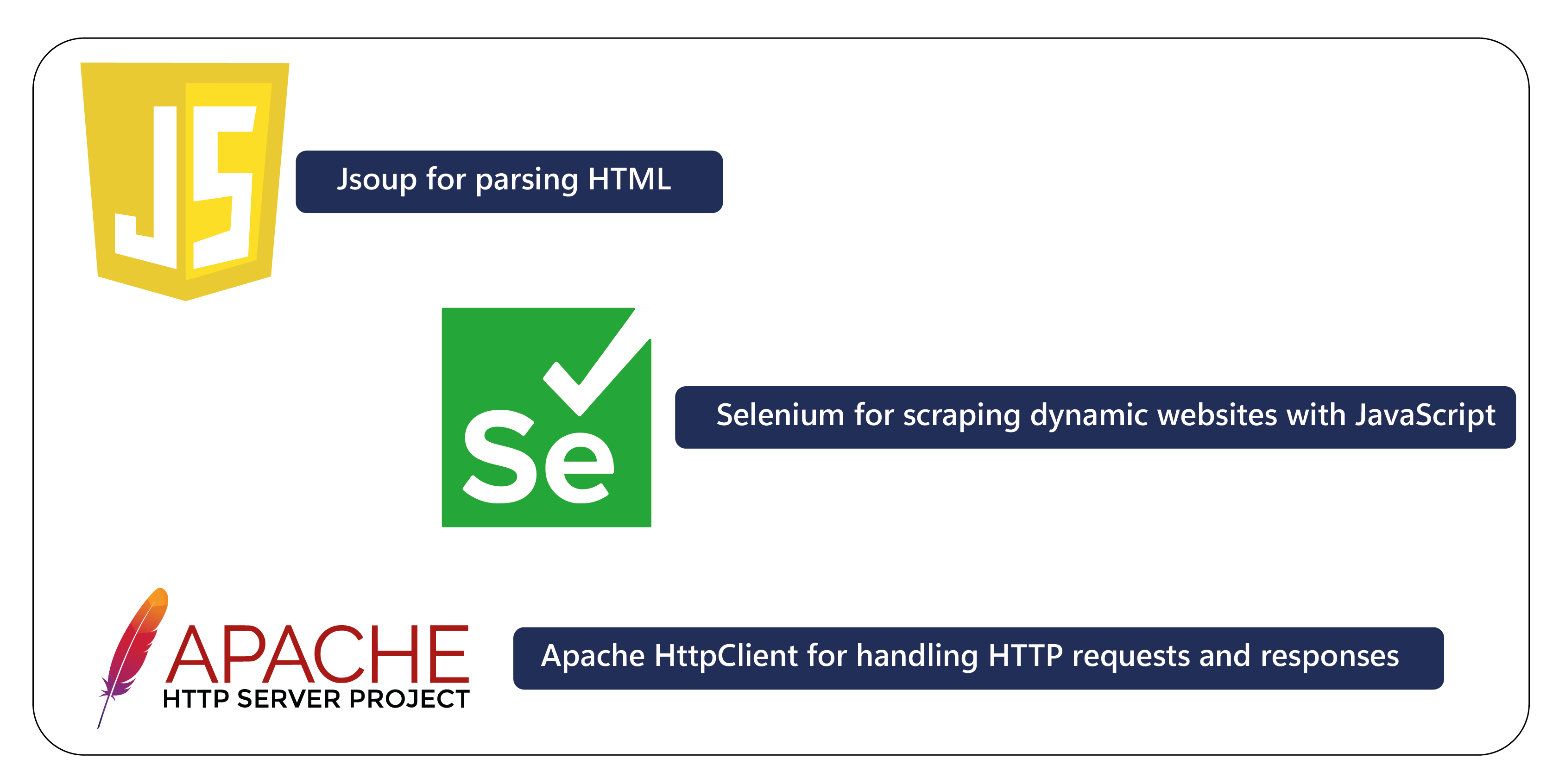

Java’s Ecosystem for Web Scraping

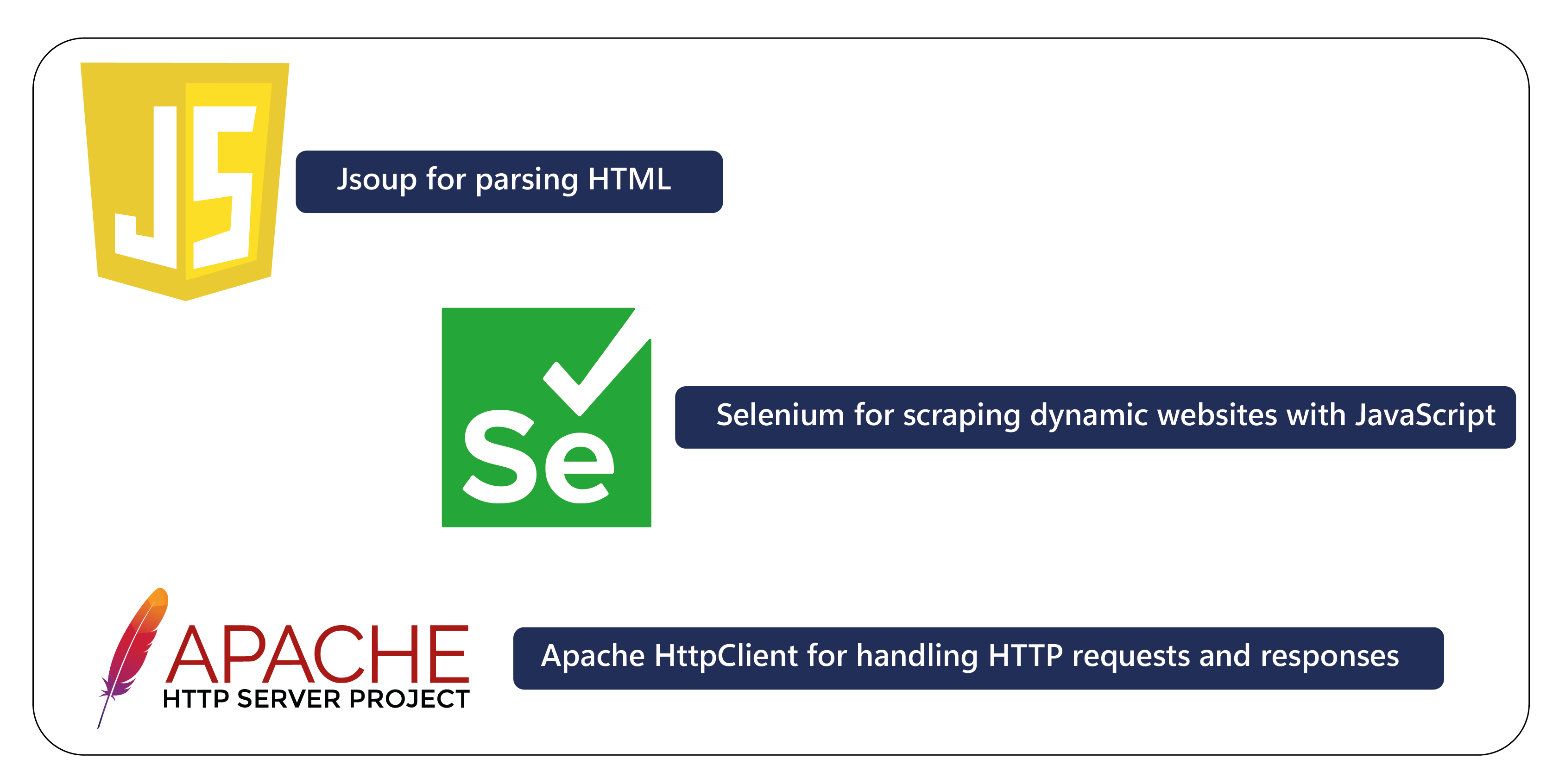

Java provides a vast ecosystem of libraries and frameworks that allow developers to build robust web scraping tools. Some popular libraries include:

- Jsoup for parsing HTML

- Selenium for scraping dynamic websites with JavaScript

- Apache HttpClient for handling HTTP requests and responses

Setting Up Your Java Development Environment for Web Scraping

Before you start scraping, you need to set up the Java environment on your machine.

Installing Java Development Kit (JDK)

Download and install the latest JDK from Oracle's official website.

Set up your environment variables (JAVA_HOME) and ensure the javac command is available in your terminal.

Using Maven or Gradle for Dependency Management

Both Maven and Gradle are popular build tools that help manage libraries and dependencies. If you’re using Maven, add dependencies for libraries like Jsoup and Selenium to your pom.xml file.

Popular Java Libraries for Web Scraping

Jsoup: The Go-to Library for HTML Parsing

Jsoup is a simple and fast HTML parser that can parse HTML from files, URLs, or strings and extract or manipulate the data. It is widely used in web scraping for parsing static HTML pages.

Example usage:

Selenium WebDriver: For Scraping Dynamic Websites

Selenium allows you to control a web browser programmatically. It’s particularly useful for scraping websites that rely heavily on JavaScript to render content.

Example usage:

HtmlUnit: A Headless Browser for Java

HtmlUnit is a headless browser, meaning it doesn't display the GUI but can still load web pages and execute JavaScript. It’s lightweight and often used for scraping.

Apache HttpClient: Making HTTP Requests

HttpClient is useful for sending HTTP requests and receiving responses. It can be used when scraping data from APIs or making HTTP requests to websites without requiring a browser.

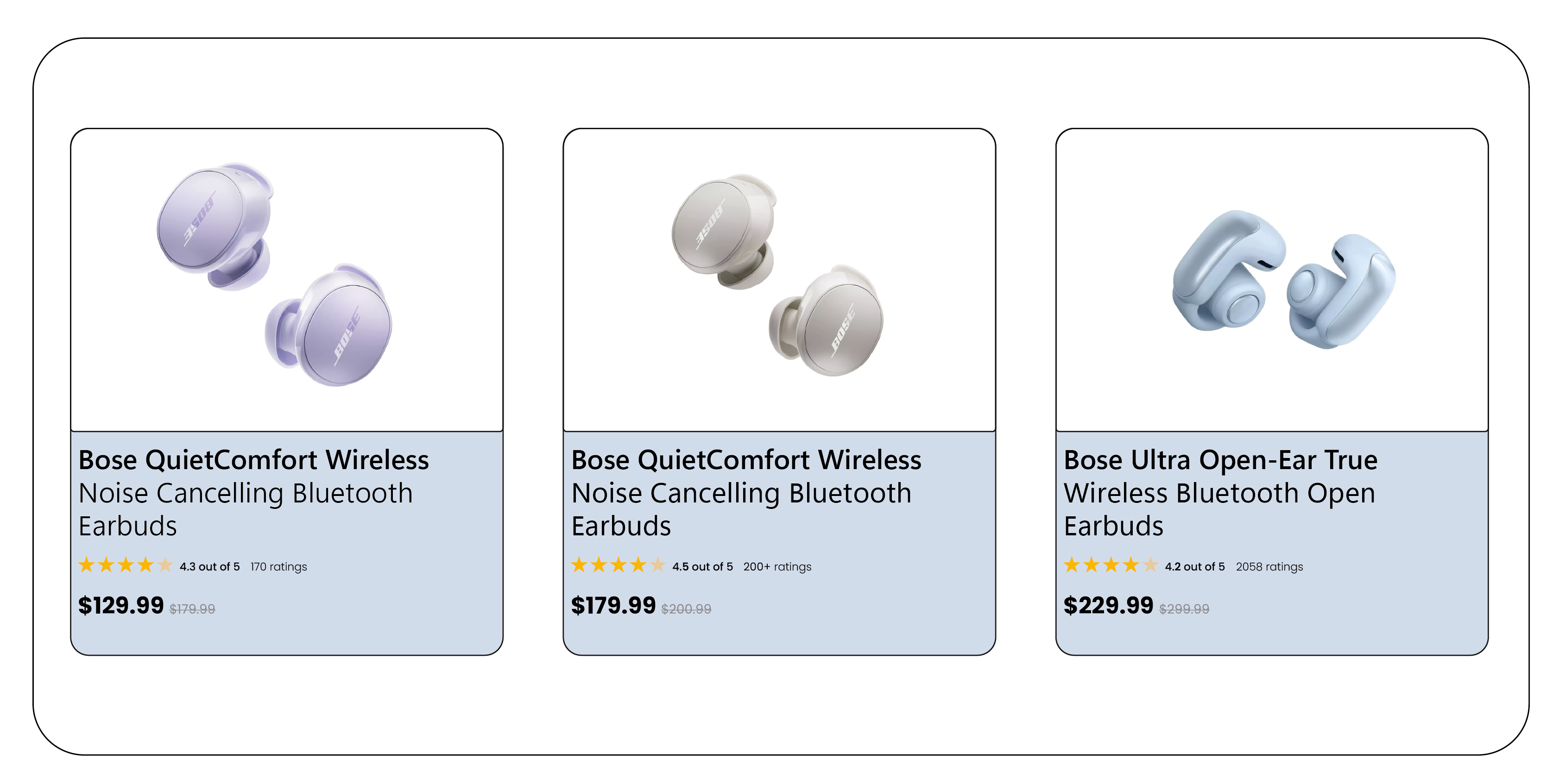

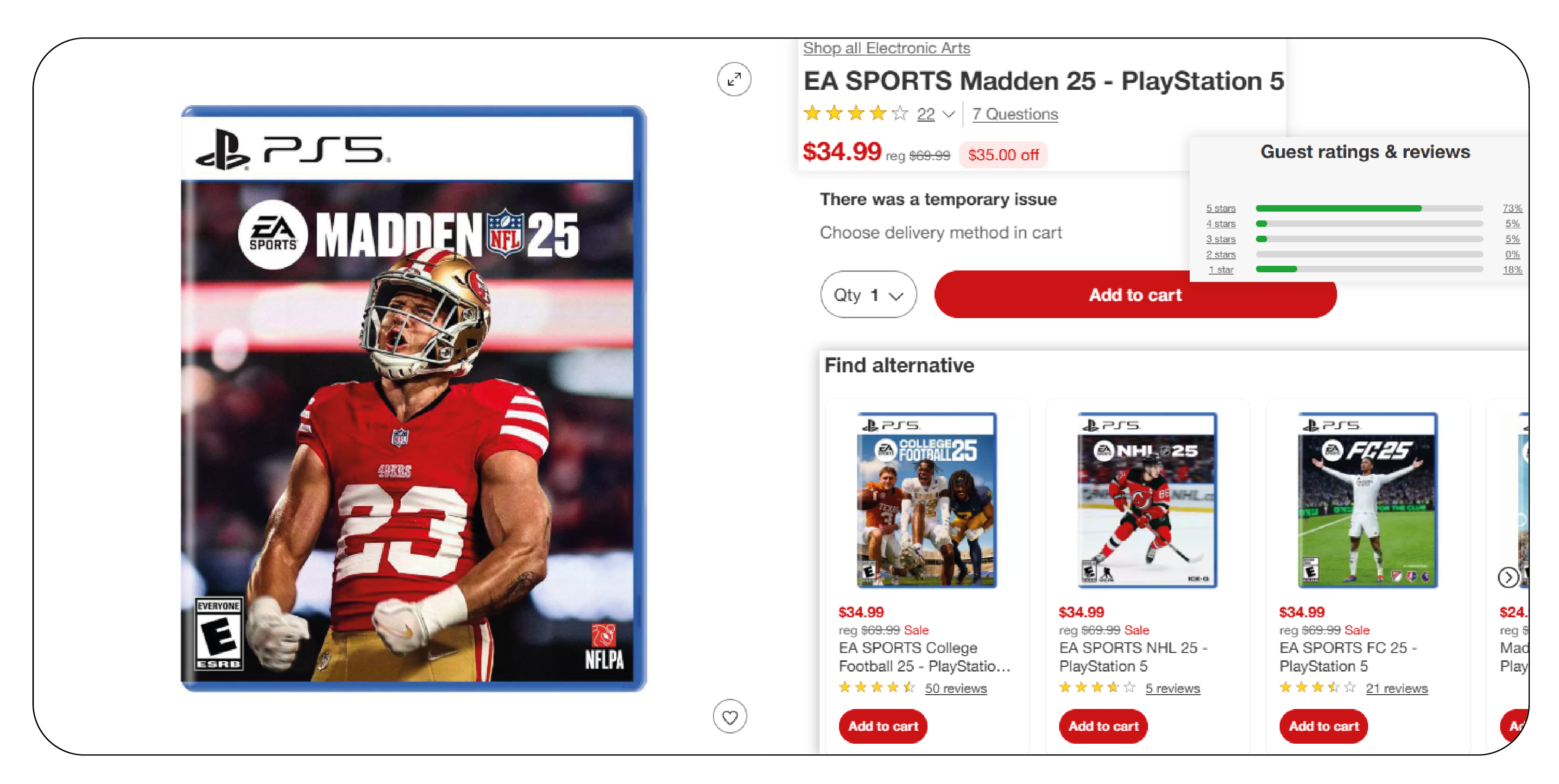

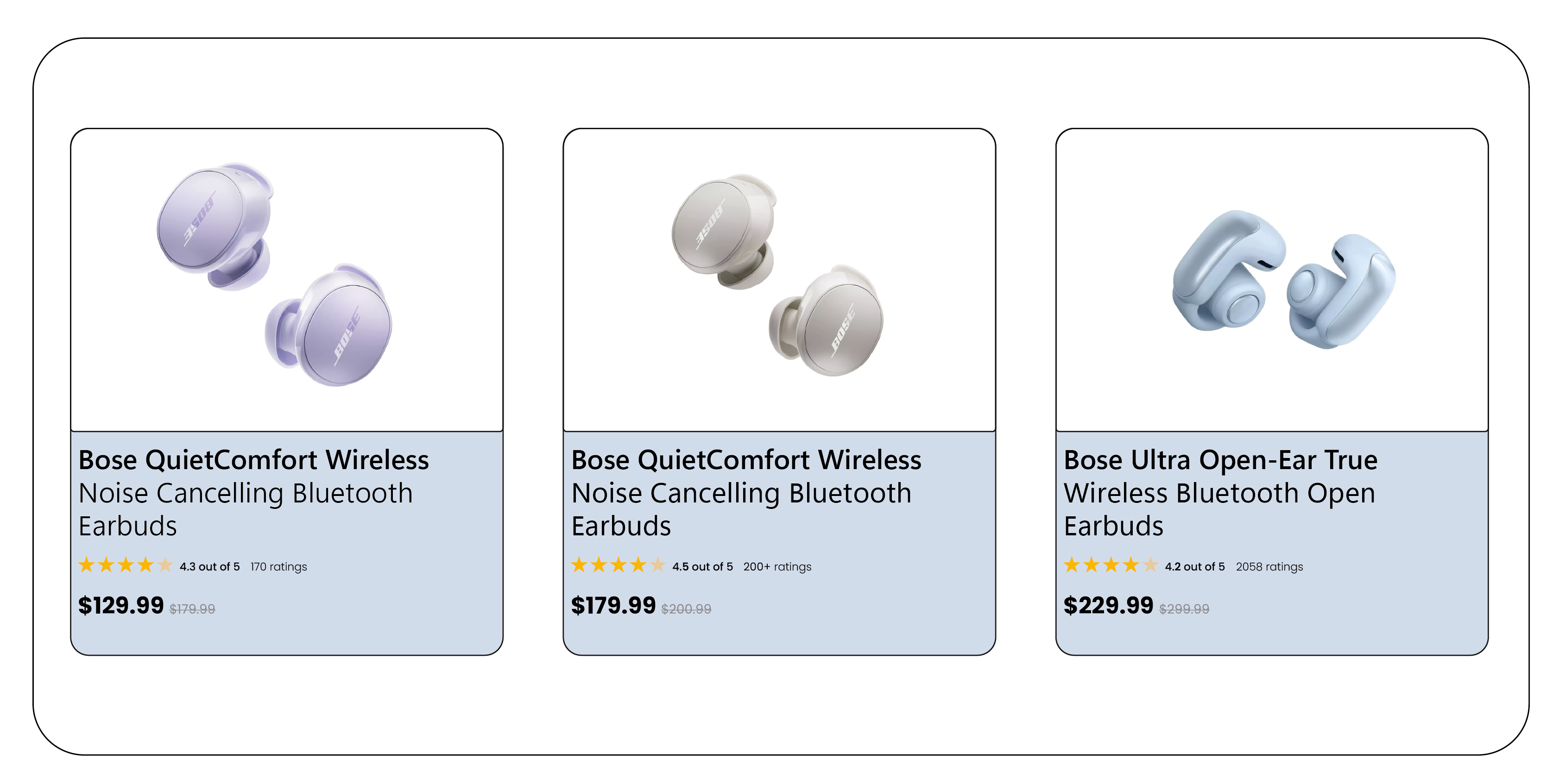

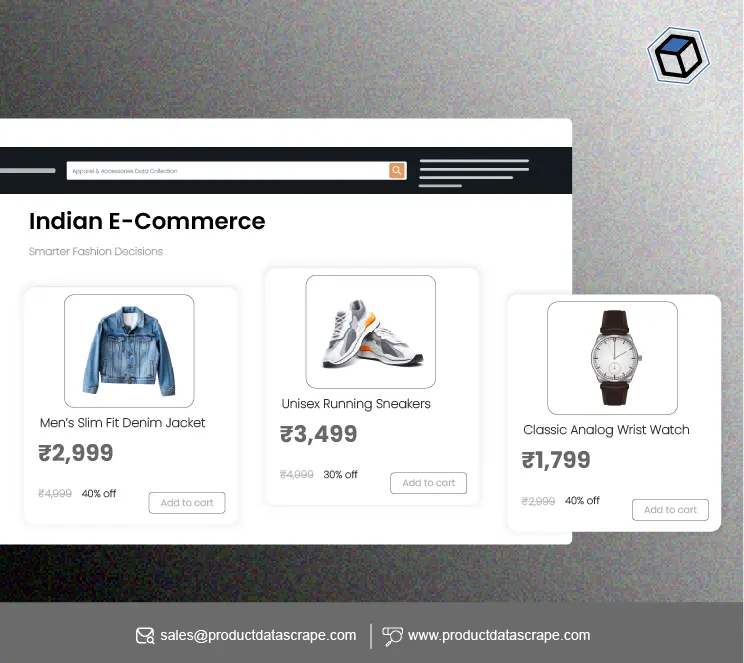

Product Data Scrape: Specialized Scraping for E-Commerce

Product Data Scrape is a specialized library designed for scraping product data from e-commerce websites. It offers built-in methods to extract product names, descriptions, prices, and availability from online stores.

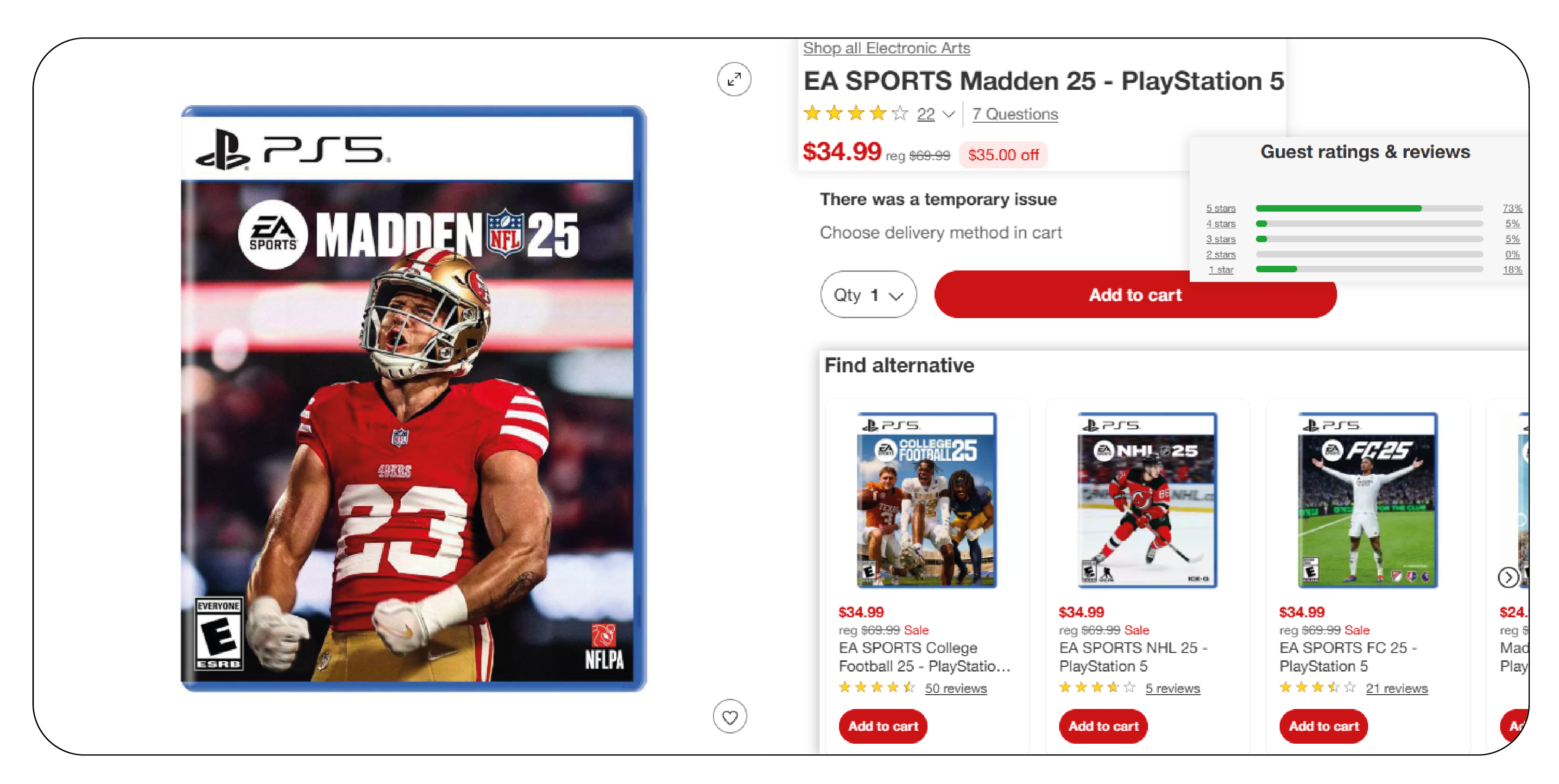

Extracting Product Data Using Web Scraping

When scraping product data, you typically want to extract:

- Product name

- Price

- Description

- Image URLs

- Product reviews and ratings

- Availability

Identifying Product Data on Web Pages

Most e-commerce websites structure their product information within specific HTML tags like div, span, or li. Use CSS selectors or XPath expressions to target these elements and extract data.

Handling Product Listings, Prices, and Descriptions

Example:

Dealing with Pagination

Pagination is common on e-commerce sites. Scraping multiple pages requires navigating through page links and scraping data from each page. You can extract the next page's URL and repeat the scraping process.

Practical Example: Scraping Product Data from an E-Commerce Website

Let’s look at a simple example of scraping product names and prices using Jsoup.

Best Practices for Web Scraping in Java

- Respecting Website’s Terms of Service: Always check the website's terms of service before scraping.

- Using User-Agent Headers: This helps in mimicking real user traffic to avoid detection.

- Handling Rate Limits: Use delays between requests to avoid overwhelming the server.

- Error Handling: Always handle exceptions and edge cases where data might be missing.

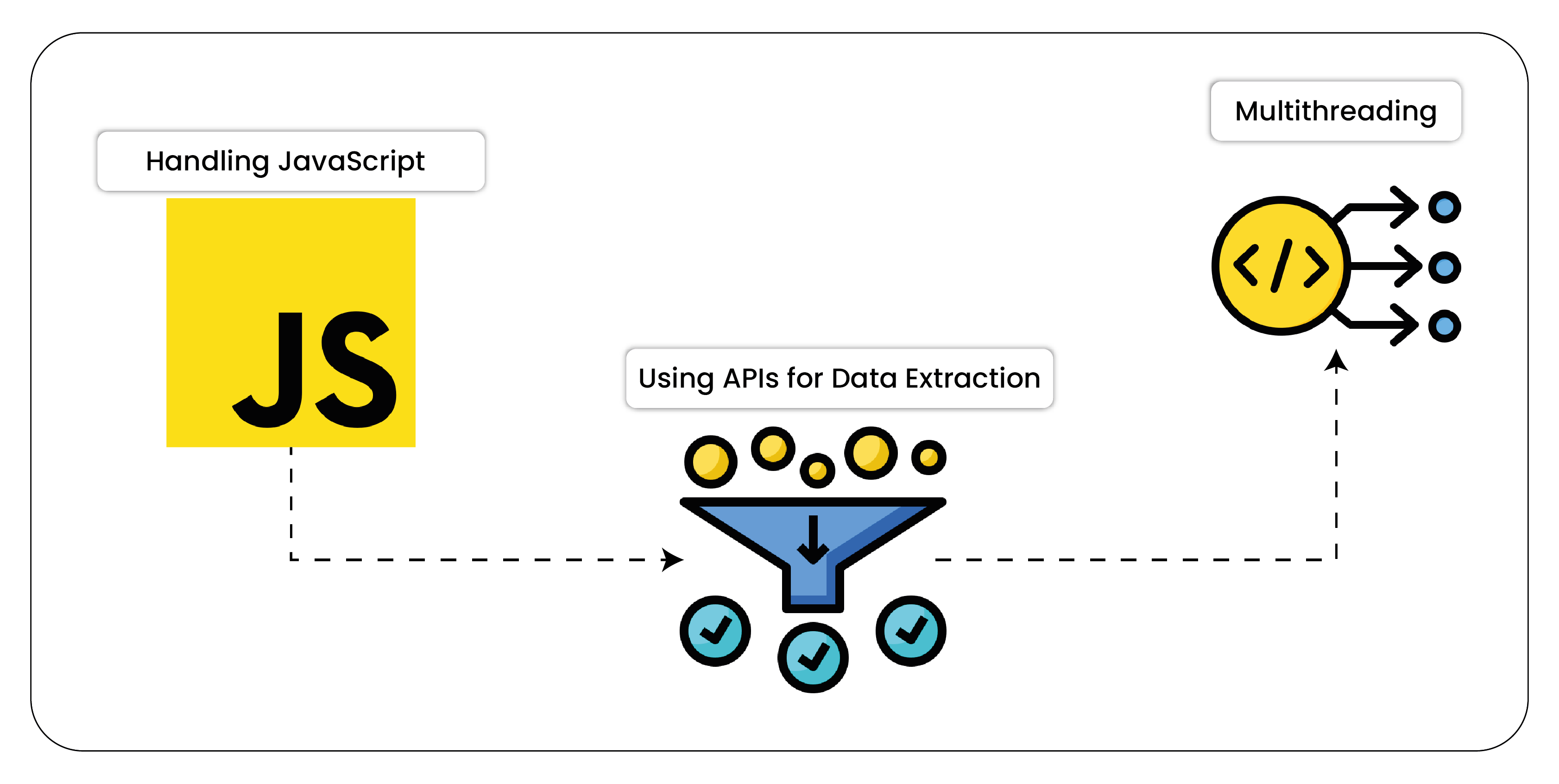

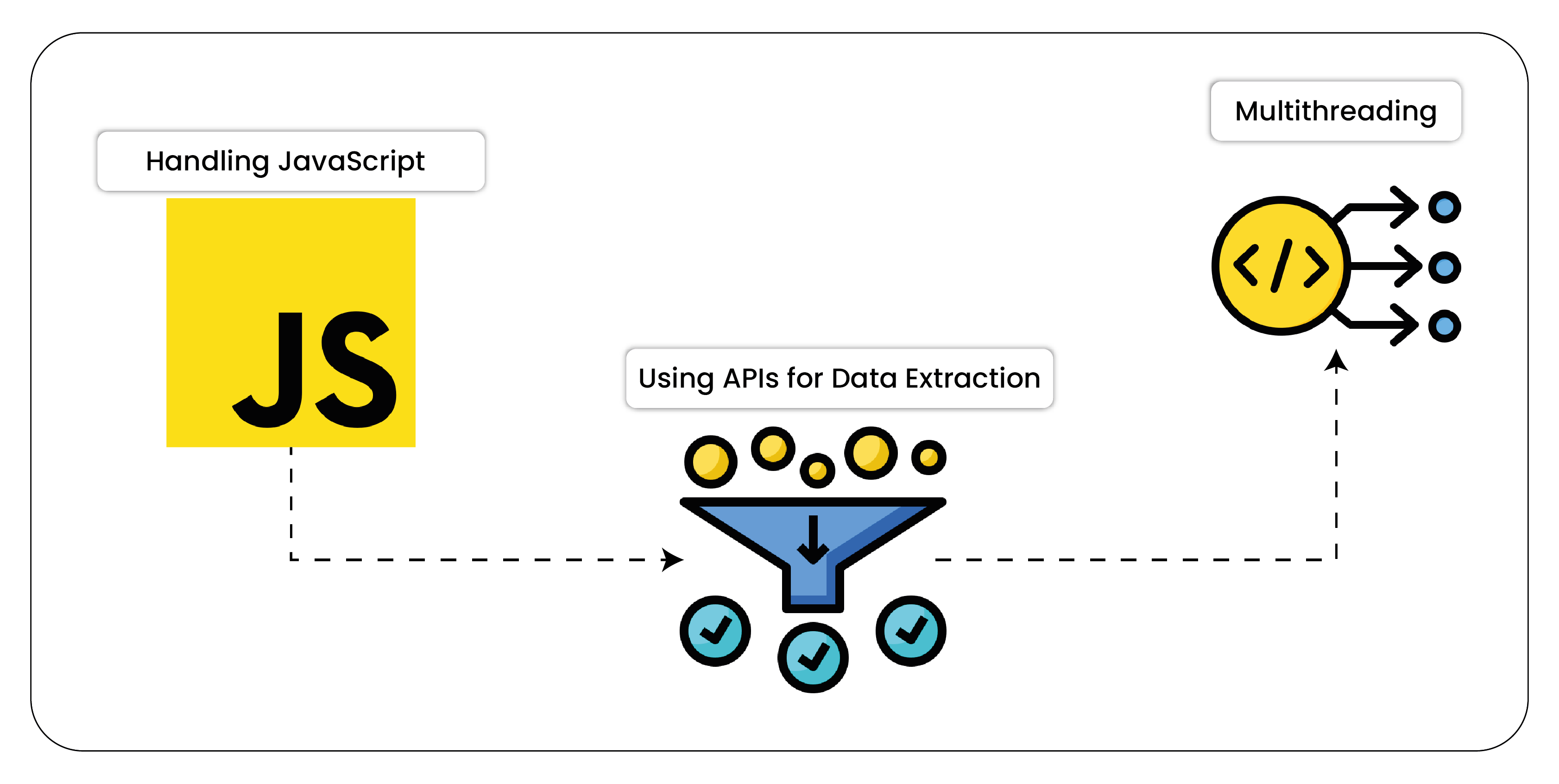

Advanced Topics in Java Web Scraping

- Handling JavaScript: Use Selenium WebDriver to interact with JavaScript-heavy websites.

- Using APIs for Data Extraction: Many websites provide APIs to access data more easily and efficiently than scraping HTML.

- Multithreading: You can speed up the scraping process by using Java’s multithreading capabilities.

Scaling Web Scraping Projects

For large-scale scraping, consider using distributed systems or cloud-based solutions. Tools like Apache Kafka and Apache Spark can help manage and process large data sets effectively.

Legal and Ethical Considerations

While web scraping is a useful tool, ensure that it’s done ethically and legally. Avoid scraping personal data without permission, and respect robots.txt and ToS.

Conclusion

In 2025, web scraping with Java remains an essential tool for businesses looking to gather data from the web. With the evolution of technologies like machine learning, AI, and more advanced anti-bot measures, the future of web scraping promises even more sophisticated techniques. Understanding the legal, technical, and ethical challenges is essential for building a sustainable scraping solution.

.webp)

.webp)

.webp)

.webp)