Introduction to Web Scraping in PHP

Web scraping is an essential technique used by developers and businesses to extract data from websites for various purposes such as product monitoring, competitive analysis, research, and data aggregation. PHP, being a widely-used server-side language, offers several tools and libraries to facilitate web scraping.

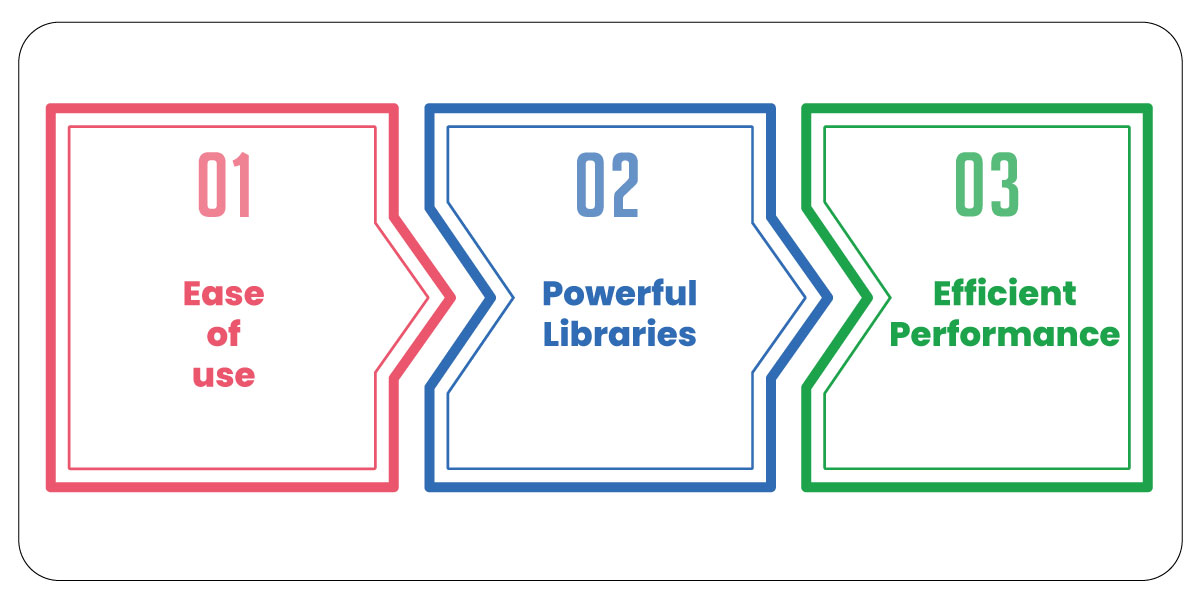

Why Use PHP for Web Scraping?

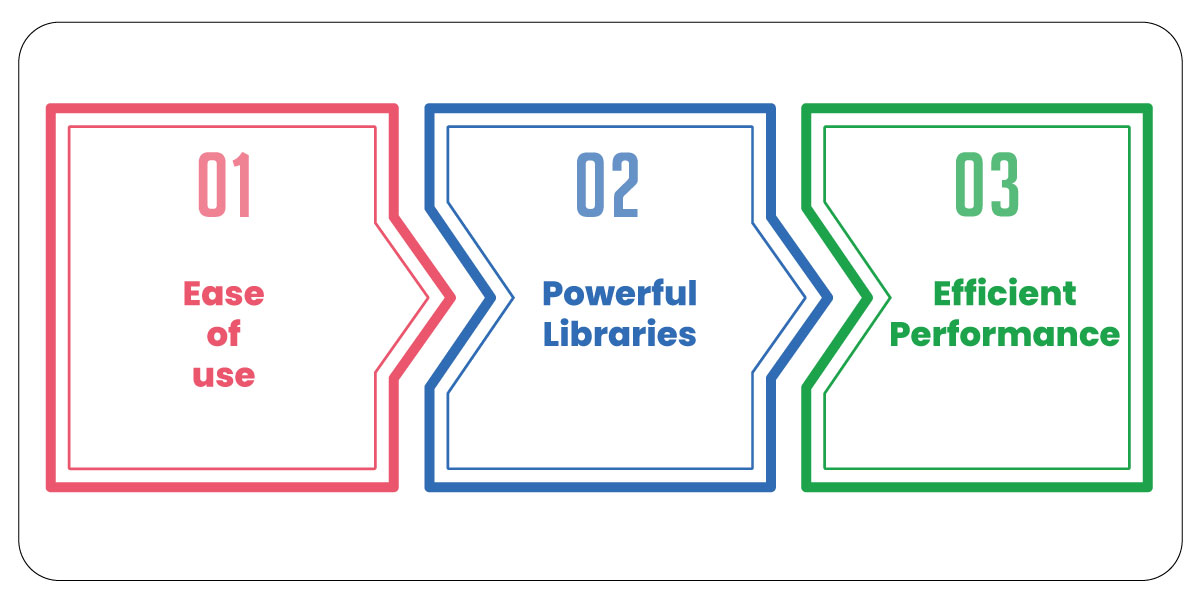

PHP has several advantages for web scraping:

- Ease of use: PHP is known for its simplicity and wide support for various libraries, making it easy to integrate into existing applications.

- Powerful Libraries: PHP has libraries like cURL and Goutte which can be used for effective scraping.

- Efficient Performance: PHP can handle large amounts of data extraction and automate tasks like checking product prices or inventory on eCommerce websites.

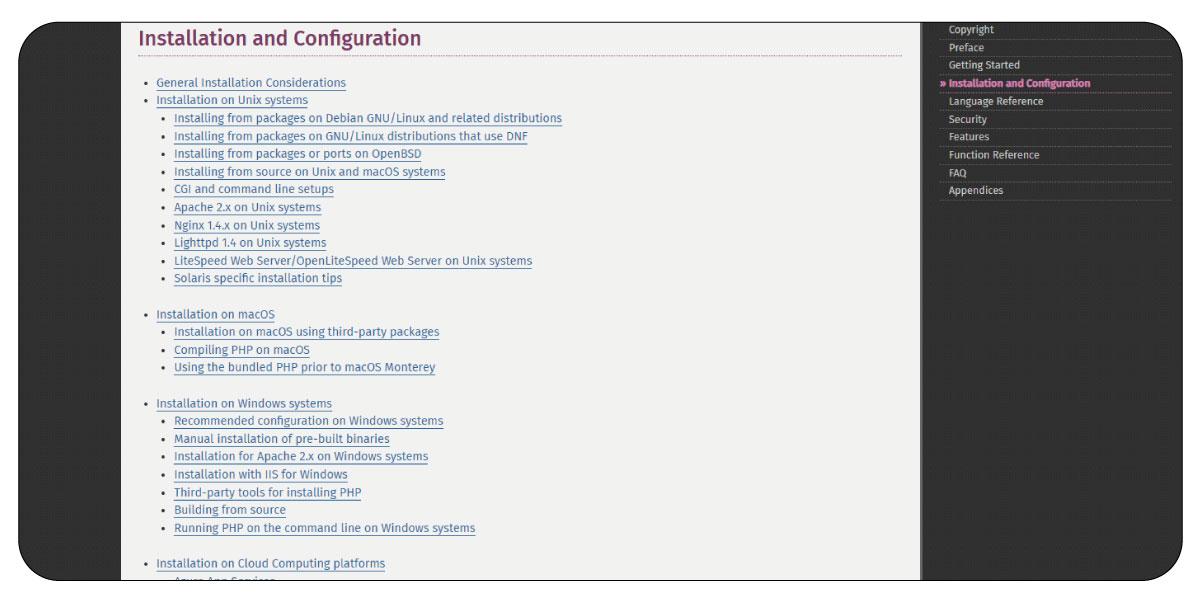

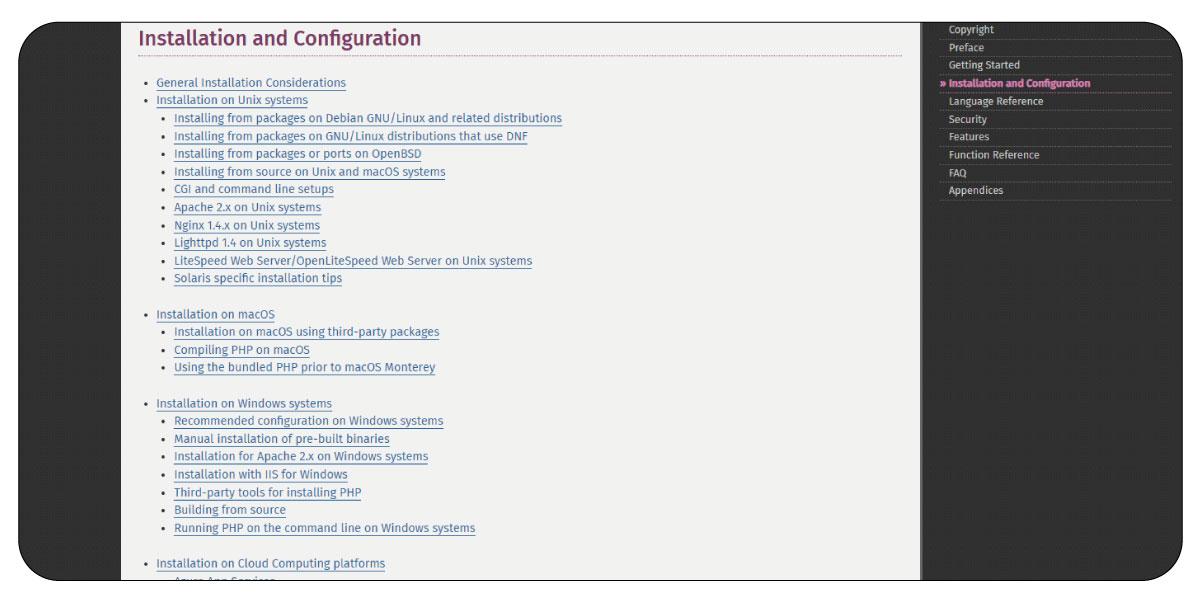

Setting Up Your PHP Environment for Scraping

Before diving into the code, ensure that your PHP environment is ready for web scraping:

1. Install PHP: Make sure you have the latest version of PHP installed.

2. Install Composer: Composer is a dependency manager for PHP, used to install libraries.

3. Install Necessary Libraries:

Goutte (a simple web scraping library)cURL (for making HTTP requests)Symfony DomCrawler (for extracting data from HTML documents)

You can install these dependencies using Composer:

composer require fabpot/goutte

composer require symfony/dom-crawler

composer require symfony/http-client

Basic Web Scraping with PHP Using Goutte

Step 1: Create a Simple PHP Scraper

Let's start with a simple PHP scraper that fetches the content of a webpage.

require 'vendor/autoload.php';

use Goutte\Client;

// Initialize Goutte client

$client = new Client();

// The URL to scrape

$url = 'https://example.com/products';

// Fetch the webpage content

$crawler = $client->request('GET', $url);

// Check if the request was successful

if ($client->getResponse()->getStatus() === 200) {

echo "Page fetched successfully!";

} else {

echo "Failed to fetch page.";

}

In this example:

- The

Goutte\Client is used to initiate the request to a URL.

- The

filter() method allows us to target specific elements, such as product titles, using CSS selectors.

Step 2: Extracting Product Data

Now, let's scrape detailed product information, such as names, prices, and images.

// Extract product names, prices, and image URLs

$crawler->filter('.product')->each(function ($node) {

$productName = $node->filter('.product-title')->text();

$productPrice = $node->filter('.product-price')->text();

$productImage = $node->filter('.product-image img')->attr('src');

echo "Product: " . $productName . "\n";

echo "Price: " . $productPrice . "\n";

echo "Image URL: " . $productImage . "\n\n";

});

This example extracts:

- Product name

- Product price

- Product image URL

Step 3: Handling Pagination

Most eCommerce websites have multiple pages of products. To handle pagination, we need to modify the scraper to navigate through multiple pages.

// Loop through pages until there's no "Next" link

$page = 1;

while (true) {

$url = 'https://example.com/products?page=' . $page;

$crawler = $client->request('GET', $url);

// Extract product data

$crawler->filter('.product')->each(function ($node) {

$productName = $node->filter('.product-title')->text();

$productPrice = $node->filter('.product-price')->text();

$productImage = $node->filter('.product-image img')->attr('src');

echo "Product: " . $productName . "\n";

echo "Price: " . $productPrice . "\n";

echo "Image URL: " . $productImage . "\n\n";

});

// Check if there is a "Next" page link

$nextPageLink = $crawler->filter('.pagination .next')->count();

if ($nextPageLink > 0) {

$page++;

} else {

break; // Exit the loop if no next page is found

}

}

In this case, the scraper will loop through all pages of products until it reaches the last page.

Handling Dynamic Content with cURL

Some websites use JavaScript to load data dynamically. In such cases, Goutte may not be enough. Here, we use PHP’s cURL to handle AJAX requests and scrape the data.

Here, we use cURL to fetch the HTML content of a dynamically-loaded page, then parse it with DOMDocument and DOMXPath.

Dealing with Anti-Scraping Techniques

Many websites employ anti-scraping measures to prevent automated data extraction. Here are some techniques to deal with them:

1. User-Agent Spoofing: Change your user-agent header to mimic a real browser.

2. IP Rotation: Use proxy servers or VPNs to rotate IPs and avoid detection.

3. Captcha Handling: Solve captchas using services like 2Captcha or AntiCaptcha if needed.

4. Rate Limiting: Avoid overwhelming the server with too many requests in a short period. Introduce delays between requests.

curl_setopt($ch, CURLOPT_HTTPHEADER, [

'User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

]);

Storing and Analyzing Scraped Data

Once you've scraped product data, you may need to store it in a database or analyze it further.

Store Data in a MySQL Database

Analyze Scraped Data

After storing the data, you can perform analysis on the prices, trends, or availability of the products over time.

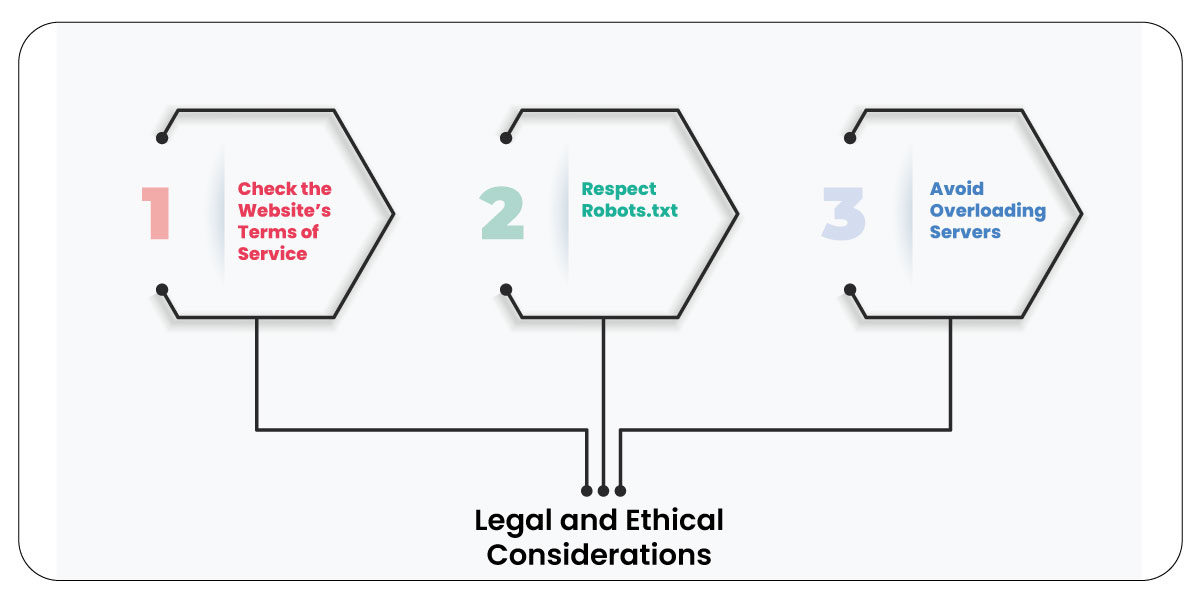

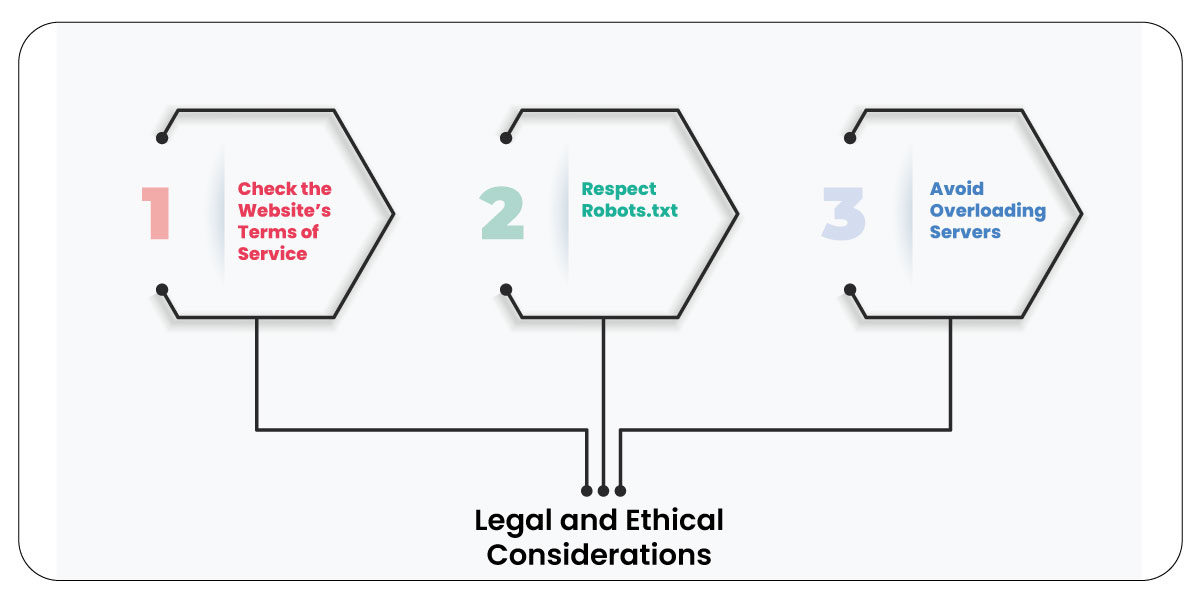

Legal and Ethical Considerations

Web scraping can sometimes be a gray area legally. It’s important to:

- Check the Website’s Terms of Service: Ensure you are not violating the site’s policies.

- Respect Robots.txt: Follow the guidelines in a website’s robots.txt file.

- Avoid Overloading Servers: Scrape responsibly and respect rate limits to avoid disrupting the website’s performance.

Conclusion

Web scraping in PHP, especially for product data, is a powerful tool for businesses and developers to gather insights. With the right tools, such as Goutte, cURL, and Symfony’s DomCrawler, PHP makes it easy to extract data from websites. By following best practices and respecting legal considerations, you can successfully implement a product data scraping solution.

.webp)