Introduction

In the booming world of online grocery shopping, Dunzo has established itself as a prominent player, offering customers the convenience of ordering groceries, essentials, and food delivery directly to their doorstep. As the market grows, businesses and analysts are eager to collect valuable data from platforms like Dunzo to track product availability, pricing trends, and consumer preferences. Web scraping is one of the most efficient ways to gather this information. This guide will show you how to scrape grocery details from the Dunzo app using Python and popular libraries such as BeautifulSoup and Scrapy. Whether you're a developer gathering data for analysis or a business aiming to gain competitive insights, this article provides a comprehensive approach to web scraping Dunzo app 2024. With precise grocery data scraping step-by-step details, you can automate the extraction process and collect valuable data for informed decision-making in the online grocery space.

Why Scrape Grocery Details from Dunzo?

Before diving into the process, it's essential to understand why Scraping Real-Time Trending Grocery Data for 2024 can be valuable:

- Price Comparison: Scraping grocery data from Dunzo allows you to conduct a thorough price comparison between different platforms, giving you insights into how your pricing stacks up against competitors. By gathering real-time data on product prices, you can ensure you're offering competitive rates, whether for individual products or bundles. Done app grocery price scraping 2024 provides a powerful way to track pricing trends and adjust your pricing strategies accordingly.

- Product Availability Tracking: Dunzo app product availability scraping is essential for businesses managing inventory. By tracking the availability of popular products or specific brands, you can ensure that high-demand items are always in stock, preventing lost sales opportunities. Regular scraping of product availability allows businesses to forecast demand and optimize stock levels based on consumer trends. This capability is significant for grocery stores offering quick commerce services.

- Market Insights: By extracting data from the Dunzo app, businesses can gain valuable Dunzo quick commerce data extraction that highlights consumer preferences based on product popularity, discounts, and categories. These insights allow companies to tailor their product offerings to meet the demands of their target market. Analyzing this data can help create personalized shopping experiences and design better promotional strategies.

- Competitive Edge: Constant monitoring of competitors' pricing and product offerings through Dunzo supermarket data collection service enables businesses to stay ahead of the curve. Scraping Dunzo's product data provides key information on pricing trends, product availability, and sales strategies used by competitors. This information allows businesses to adapt and refine their strategies to maintain a competitive advantage in the dynamic grocery market.

Now that we've established the benefits, let's look at how you can start Scraping Trending Grocery Data in 2024.

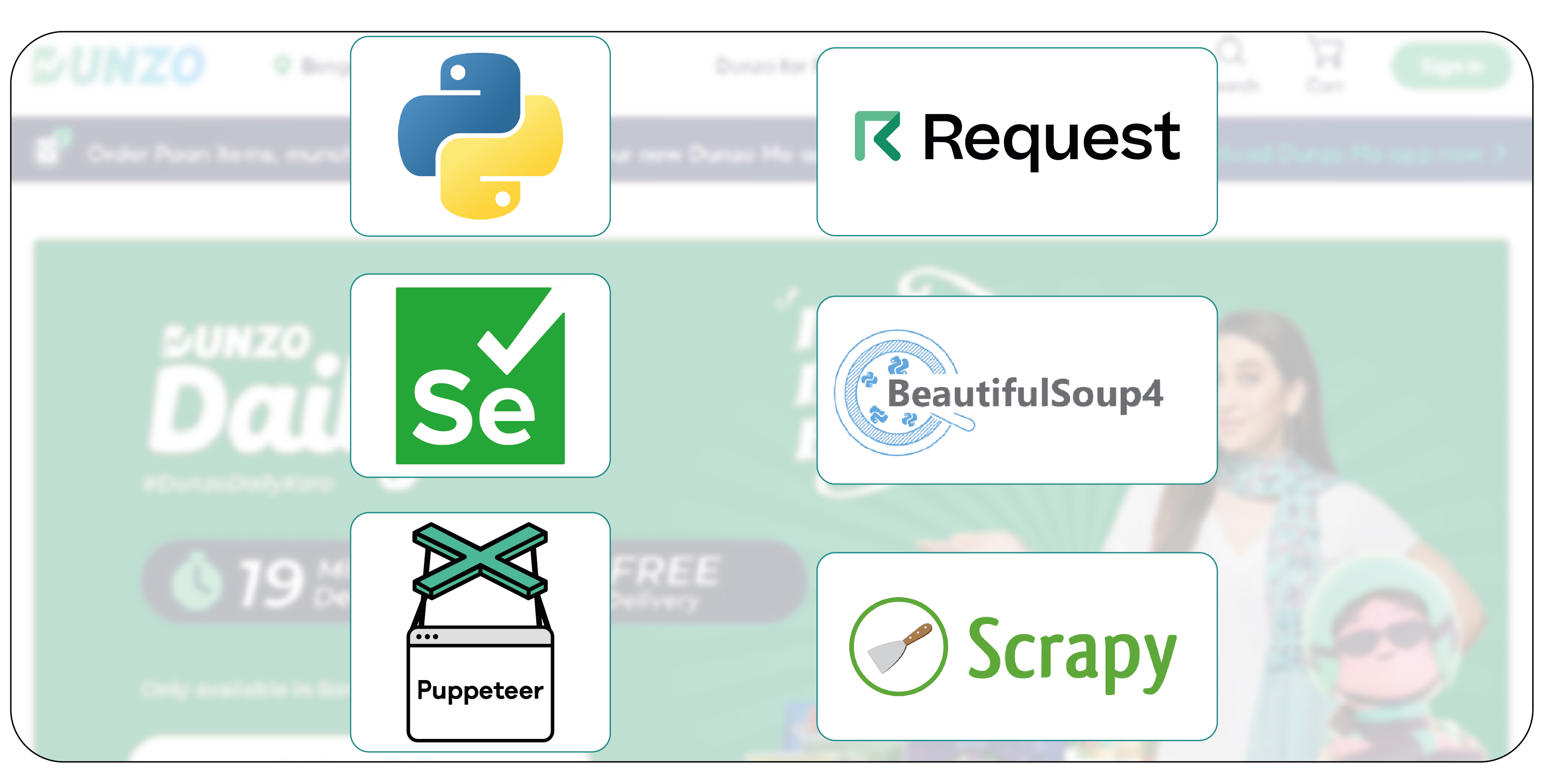

Tools You'll Need

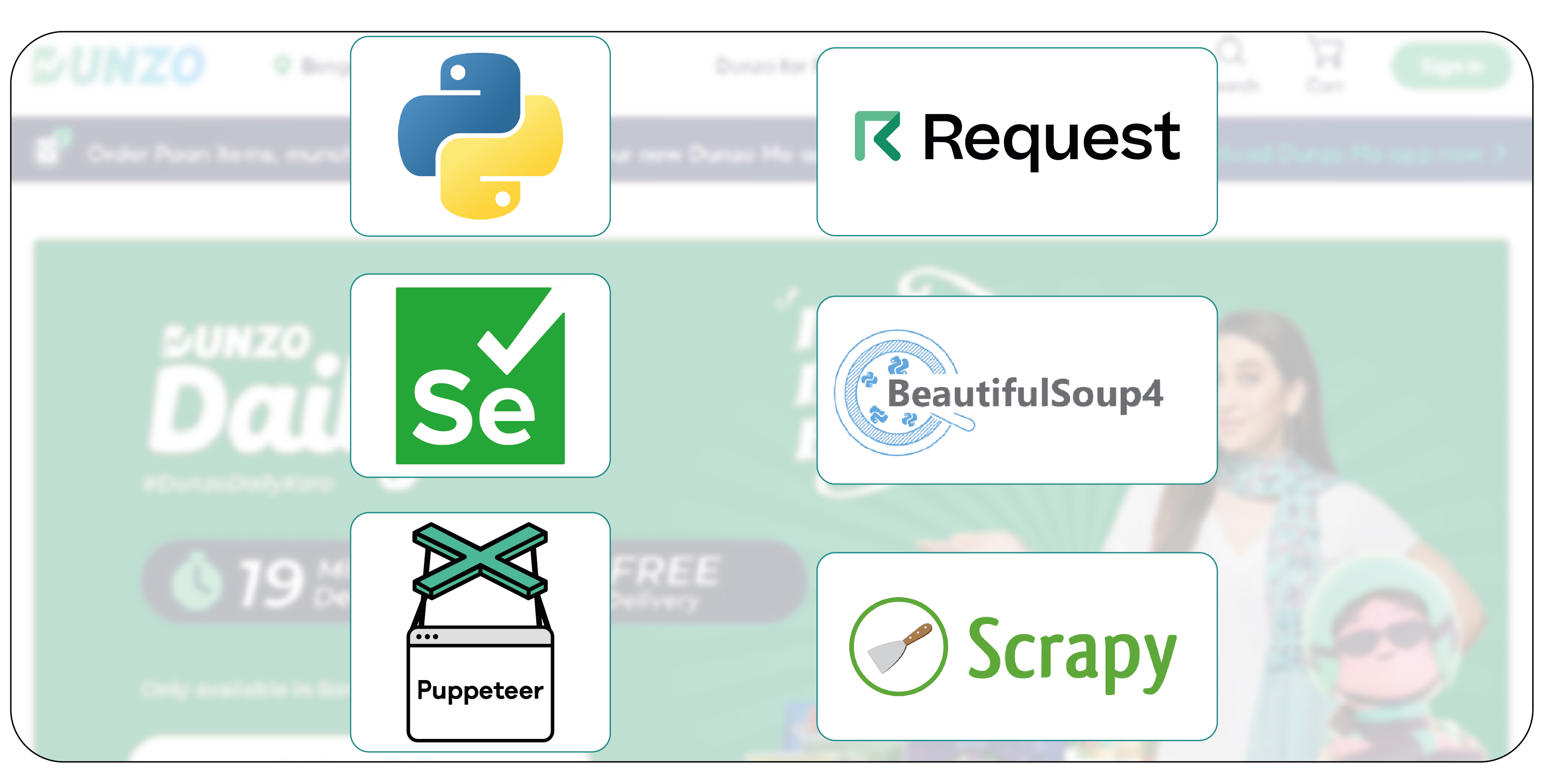

To begin Web Scraping Dunzo Grocery Data, you'll need the following tools:

- Python: A versatile programming language that's widely used for web scraping and data extraction tasks.

- BeautifulSoup: A Python library for parsing HTML and XML documents, making extracting specific data from websites easy.

- Scrapy: Another powerful Python library designed specifically for large-scale web scraping projects.

- Requests: A Python library that simplifies making HTTP requests, which is necessary for retrieving web pages for scraping.

- Selenium: If the app's content is dynamically loaded (using JavaScript), Selenium can simulate browser actions such as scrolling and clicking.

- Pandas: Useful for storing and processing data in dataframes, especially when dealing with large datasets.

Step-by-Step Guide to Scrape Grocery Details from the Dunzo App

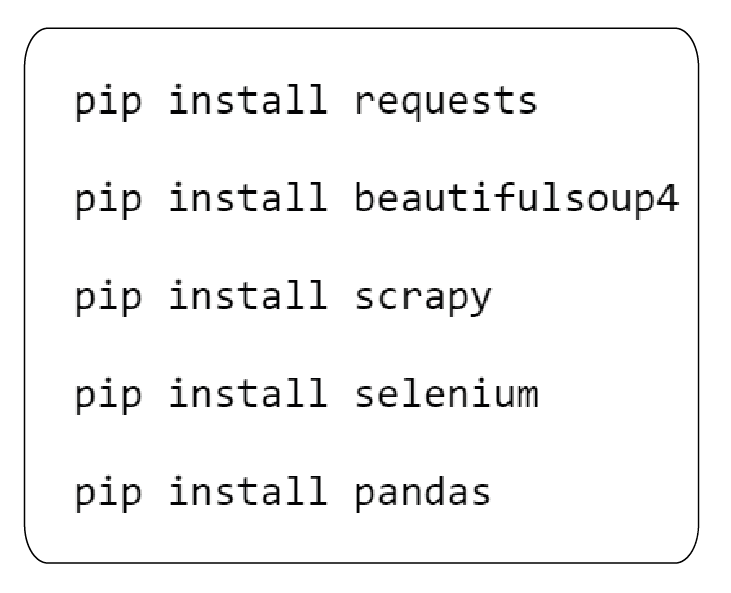

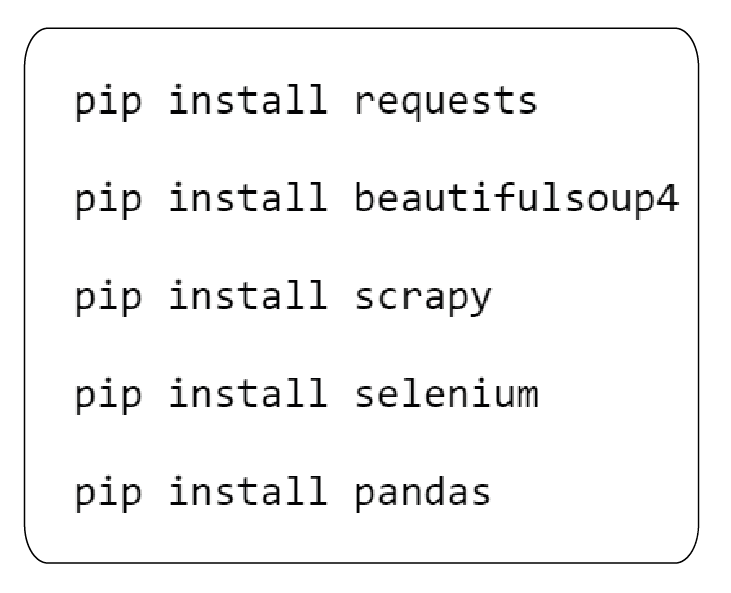

Step 1: Install Required Libraries

Before beginning, make sure you have Python installed on your system. Then, install the necessary libraries using pip:

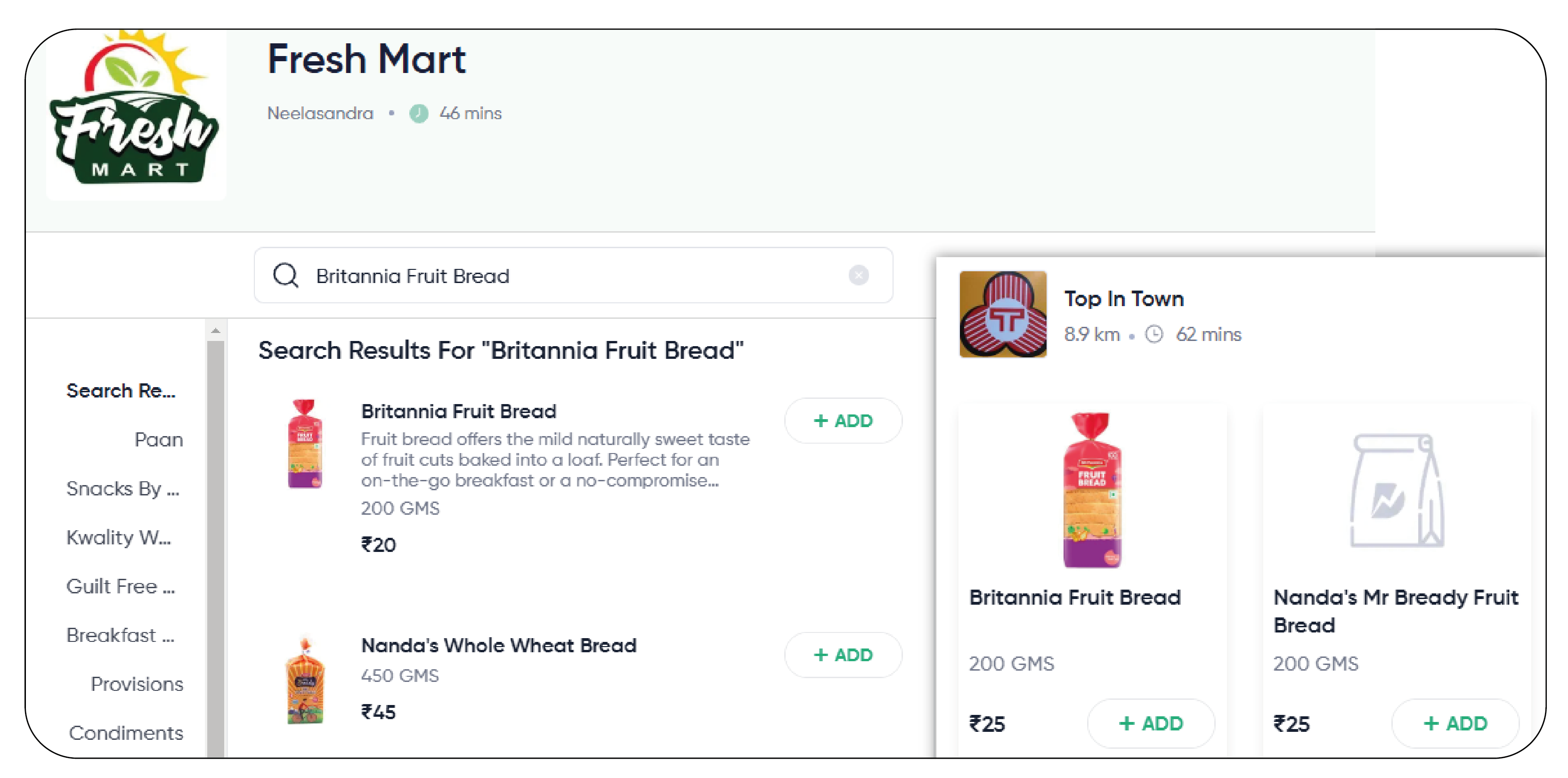

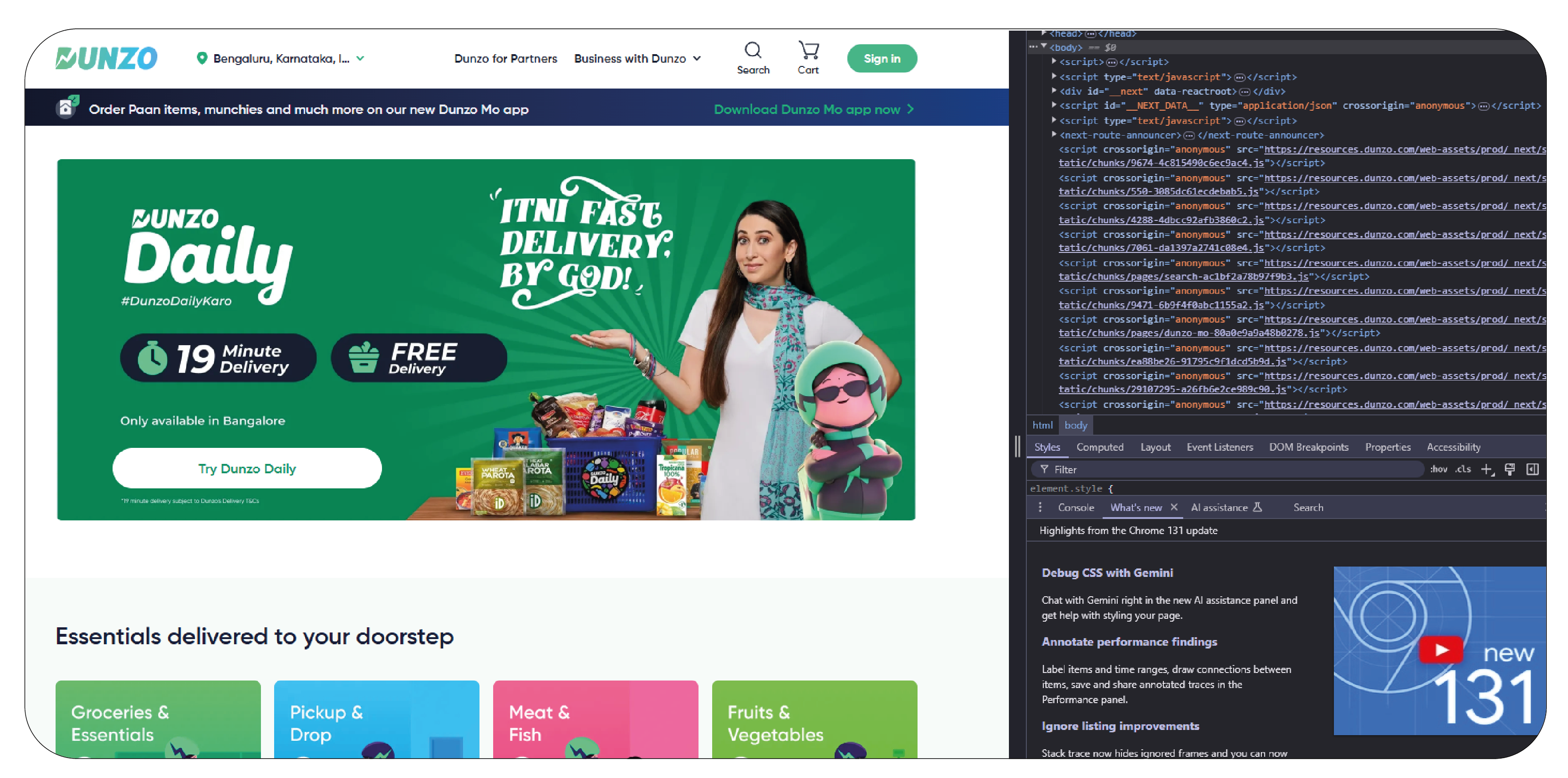

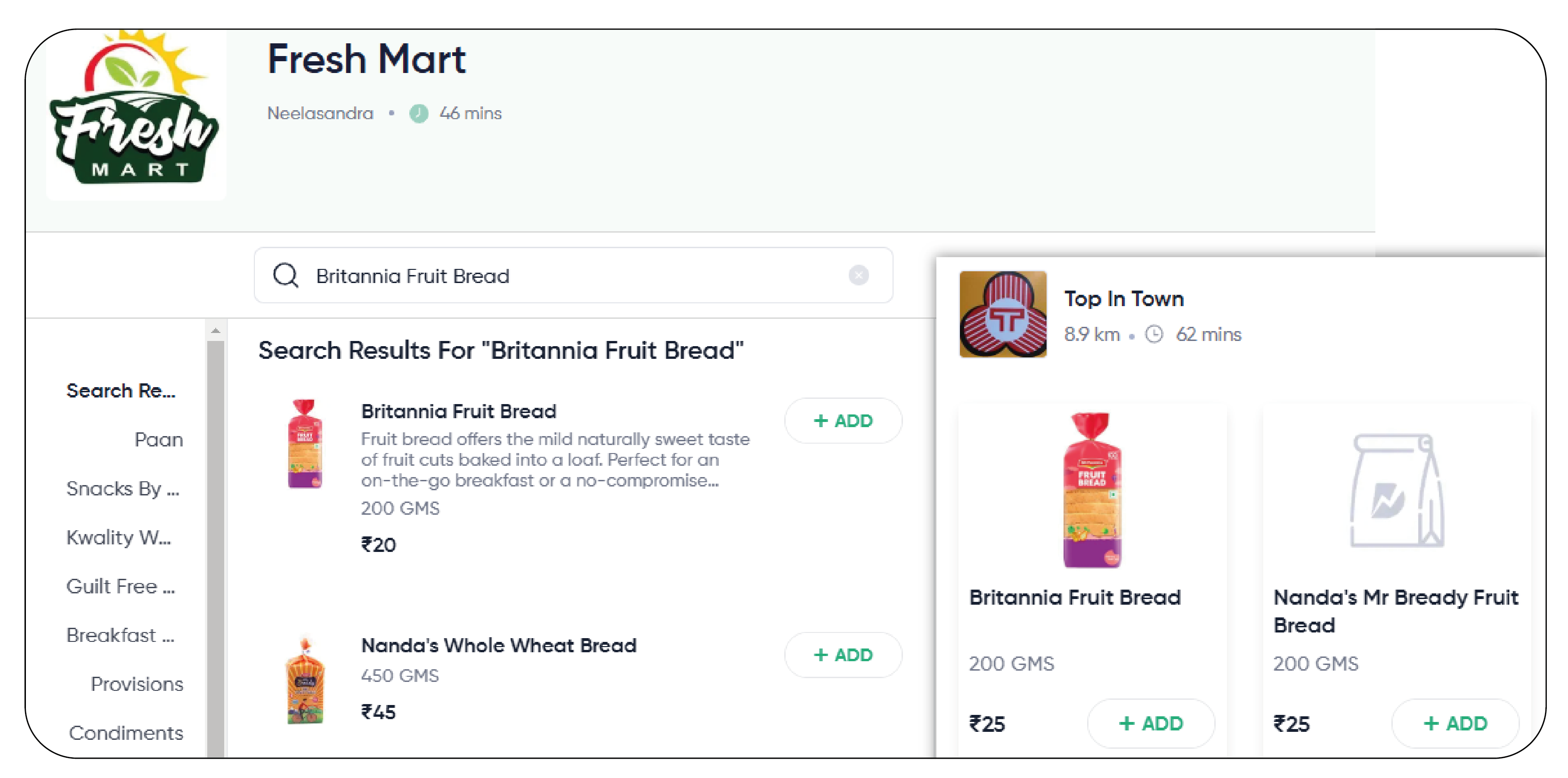

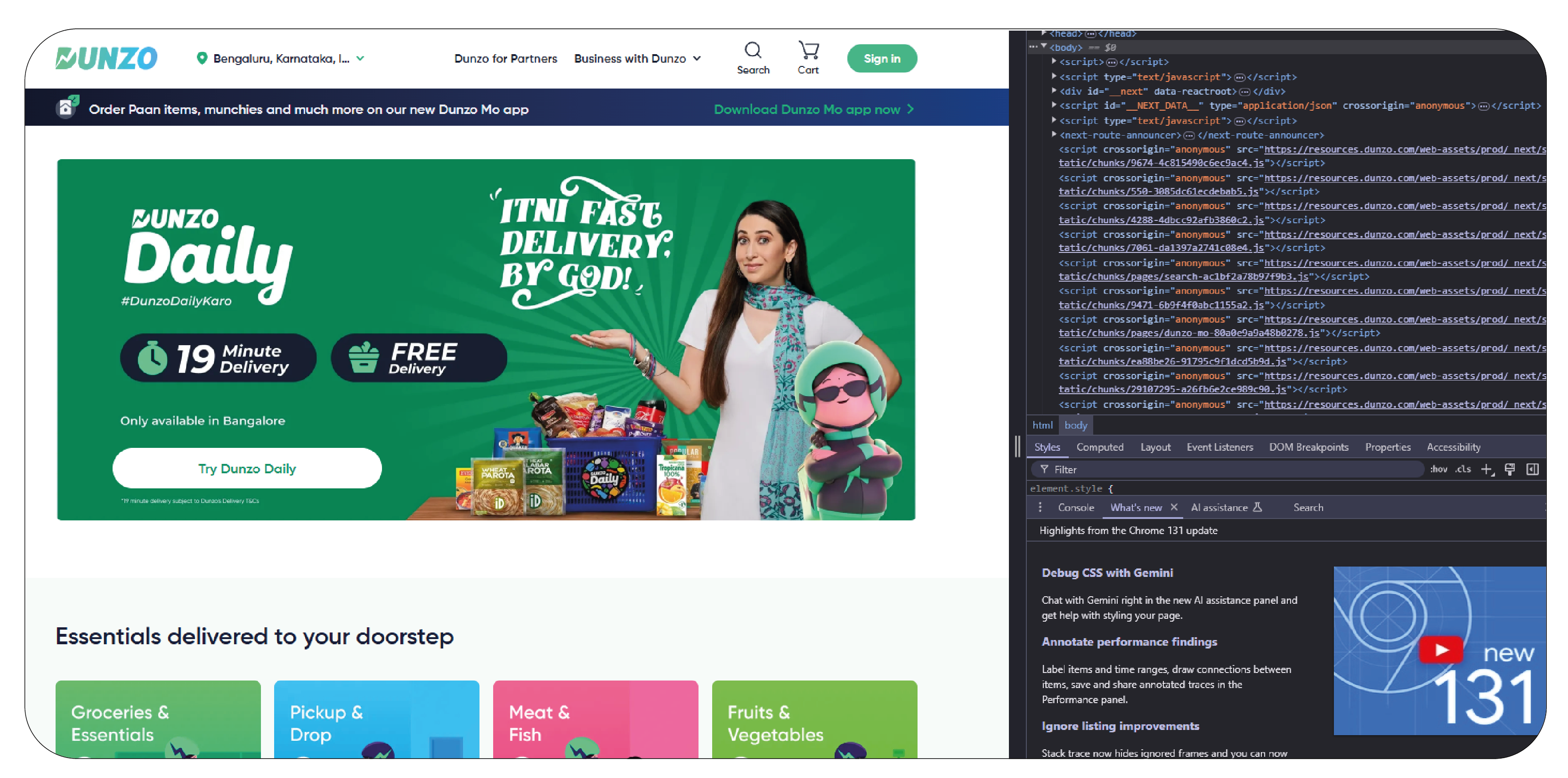

Step 2: Inspect the Website

The first step in web scraping grocery & gourmet food data is to inspect its structure. This allows you to identify the HTML elements containing the needed data.

- Open the Dunzo app in your browser and right-click to open the "Inspect" option (for Google Chrome).

- Use the Elements tab to navigate through the HTML structure of the page.

- Find the product details you want to scrape, such as product name, price, description, and category. Look for unique identifiers like class or id attributes to help locate the data.

- You may also want to monitor network requests to understand how the app dynamically loads content.

For dynamically loaded content (e.g., through JavaScript), you may need to use Selenium or Scrapy with browser automation.

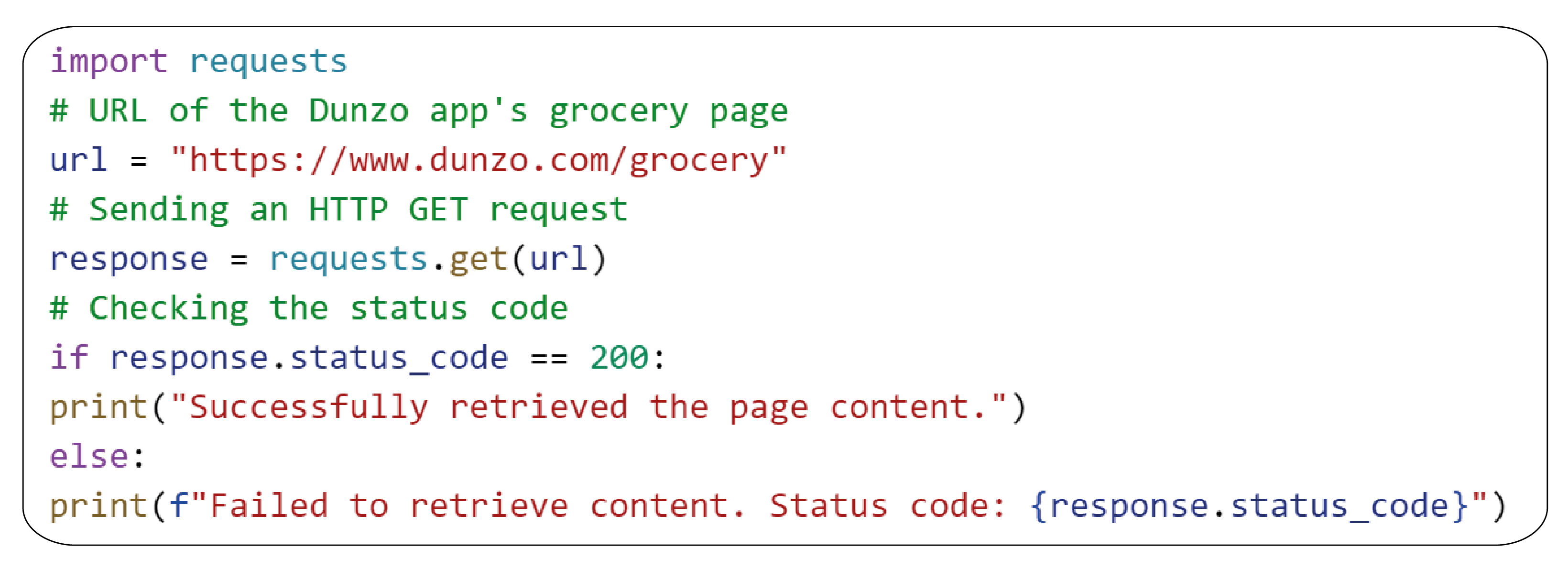

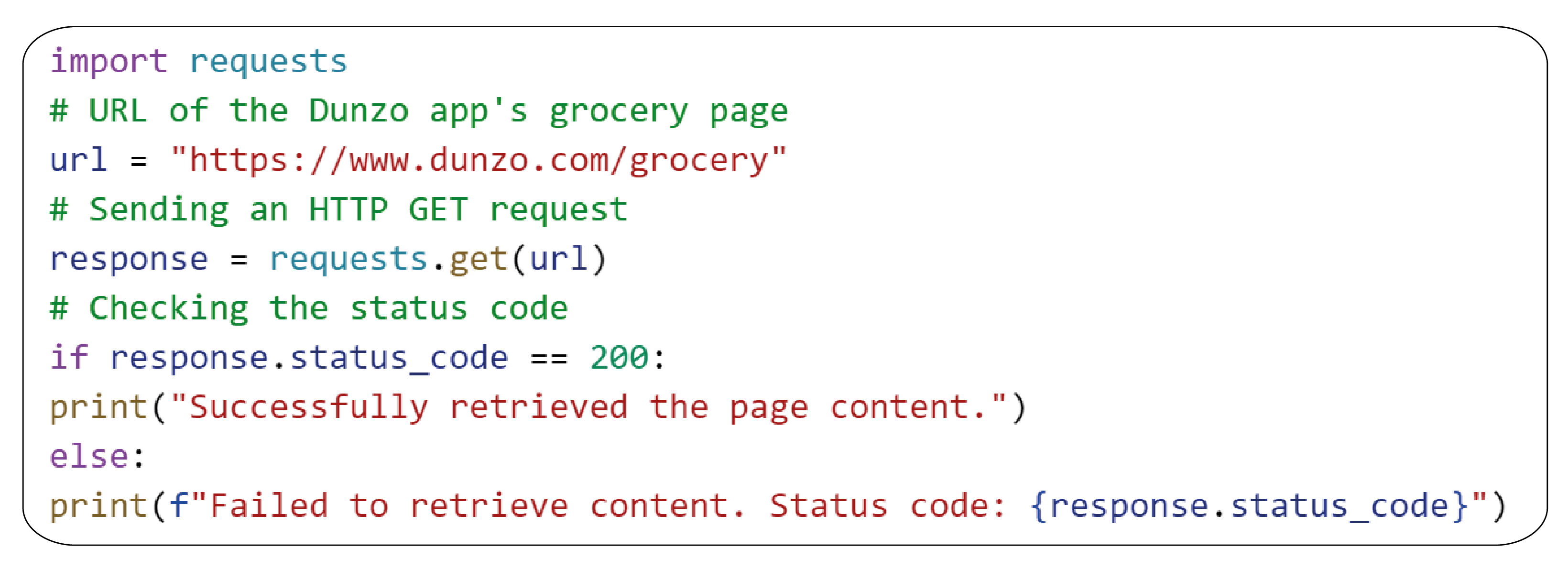

Step 3: Send Requests to the App's Server

Once you've identified the target data, the next step is to send a request to the server and retrieve the page's HTML content.

Here's an example of how to use the requests library in Python to send a request:

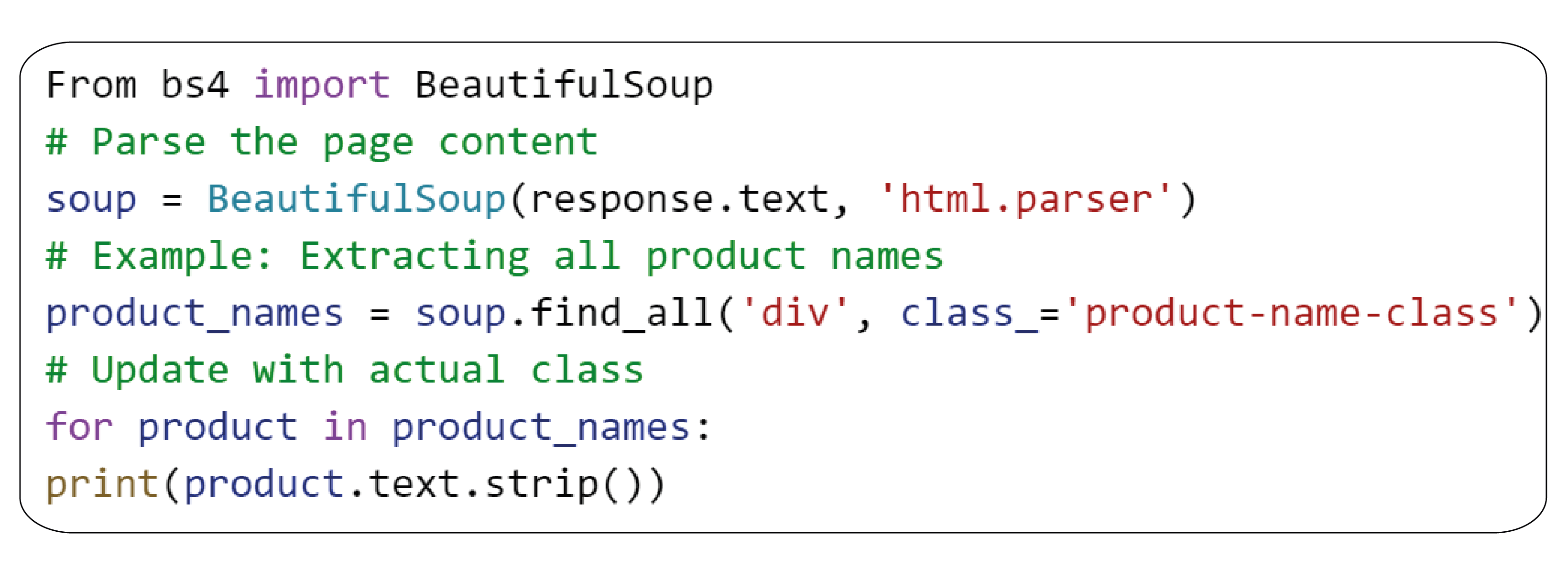

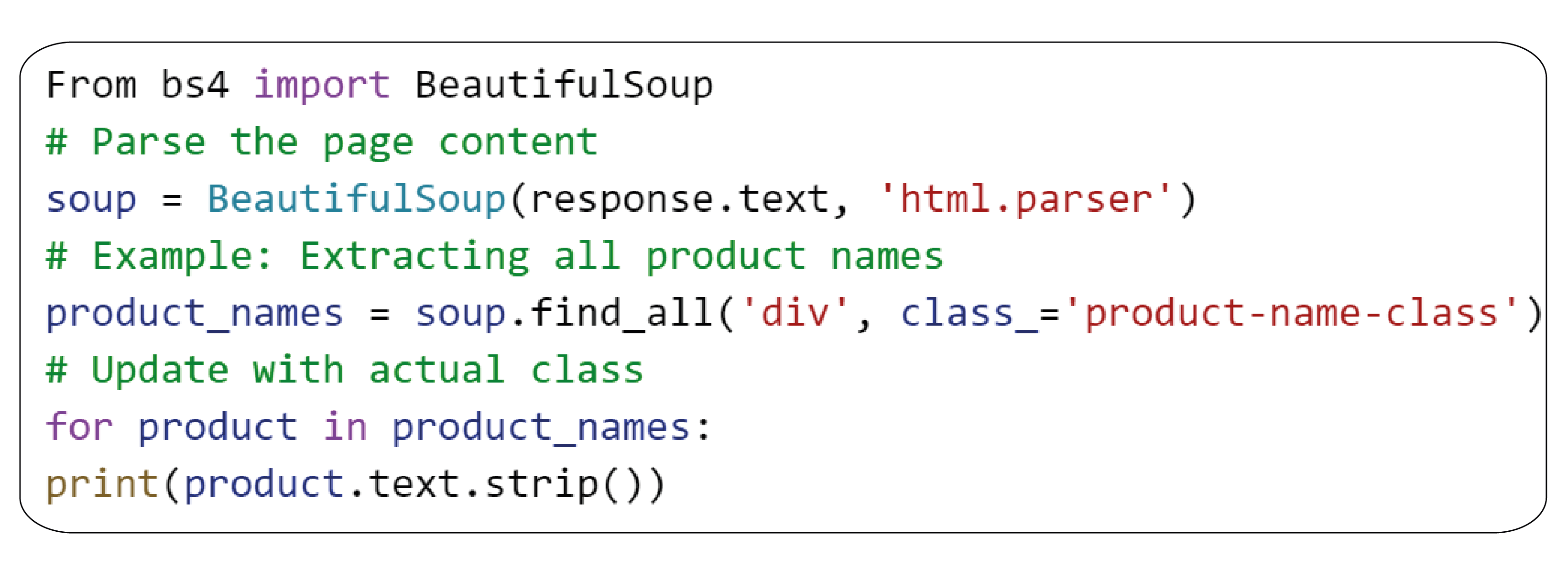

Step 4: Parse the HTML Content

Once you've fetched the page content, the next step is parsing the HTML to extract relevant data.

Here's how you can use BeautifulSoup to parse and extract grocery details:

Based on the tags and classes you identified during the inspection, you can extract multiple attributes, such as product names, prices, and availability.

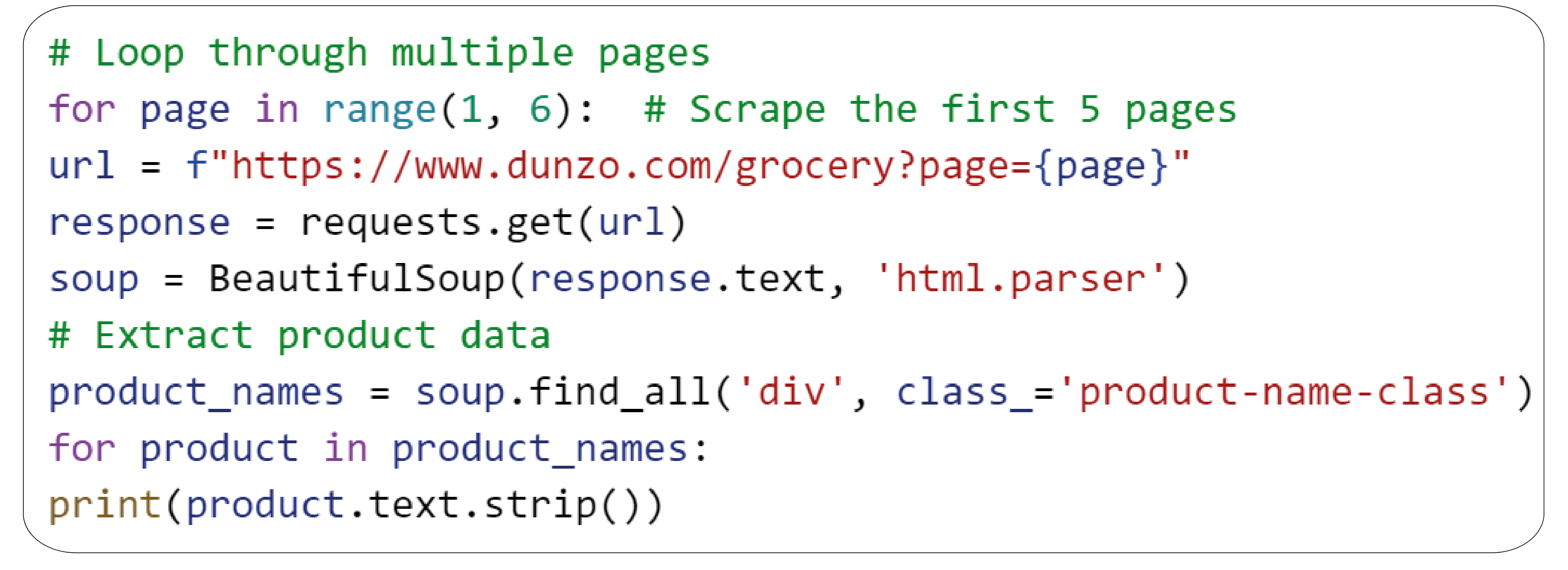

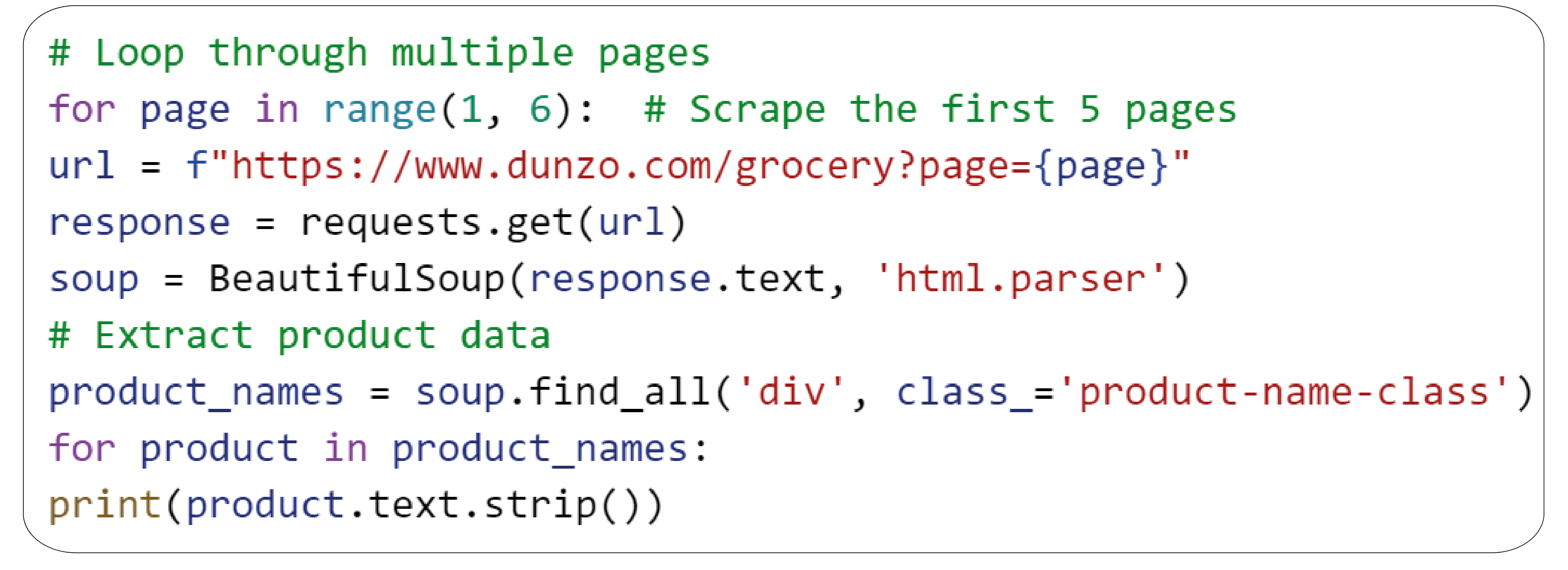

Step 5: Handle Pagination

Many websites, including Dunzo, display products across multiple pages. To handle pagination, you'll need to loop through the page numbers and scrape data from each page.

Here's an example:

Step 6: Extract Dynamic Content with Selenium (if required)

If the content is loaded dynamically via JavaScript, you must render the page using Selenium. Selenium allows you to automate browser actions like clicking on pagination buttons or scrolling to load more content.

Example with Selenium:

-01.png)

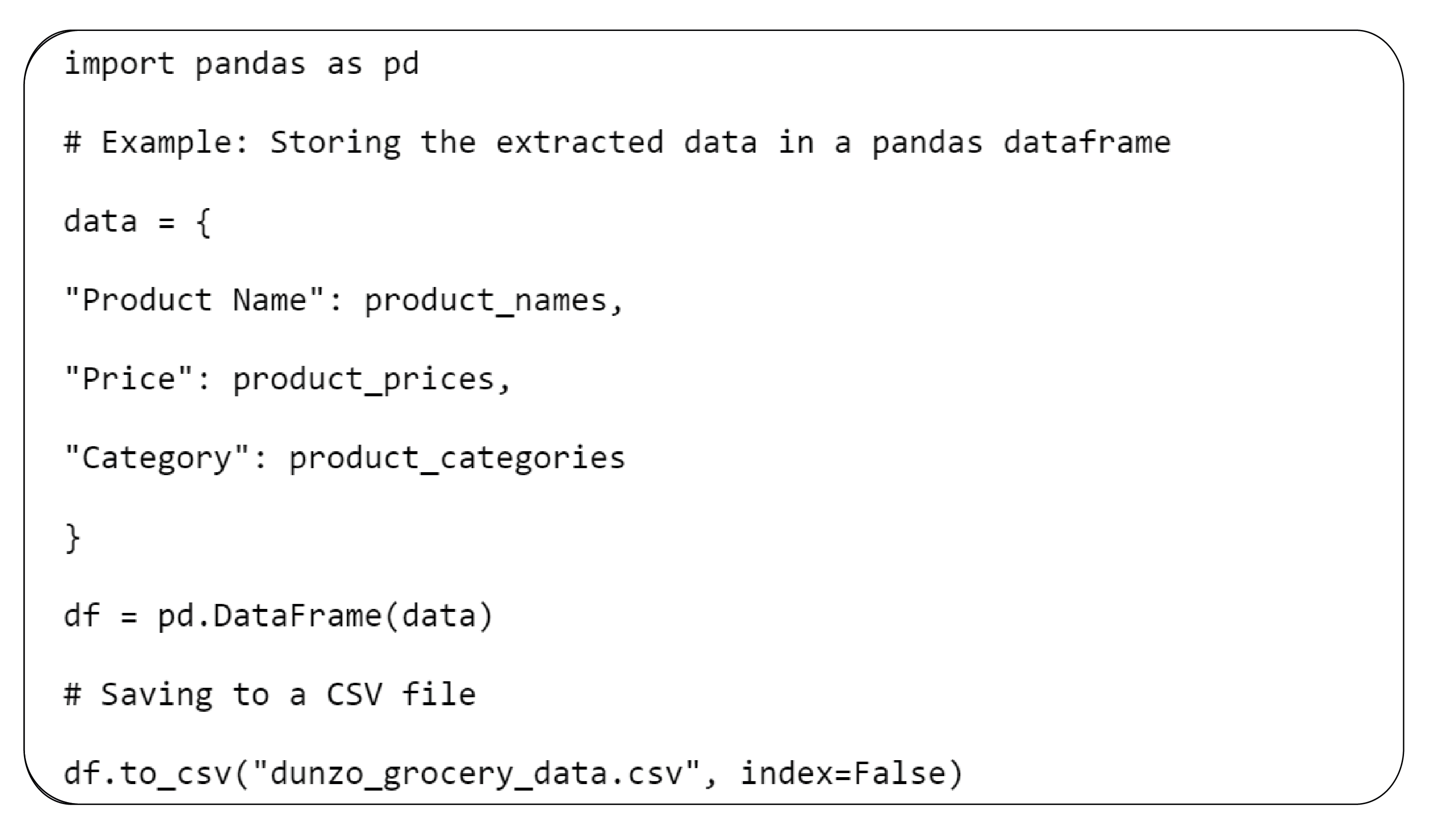

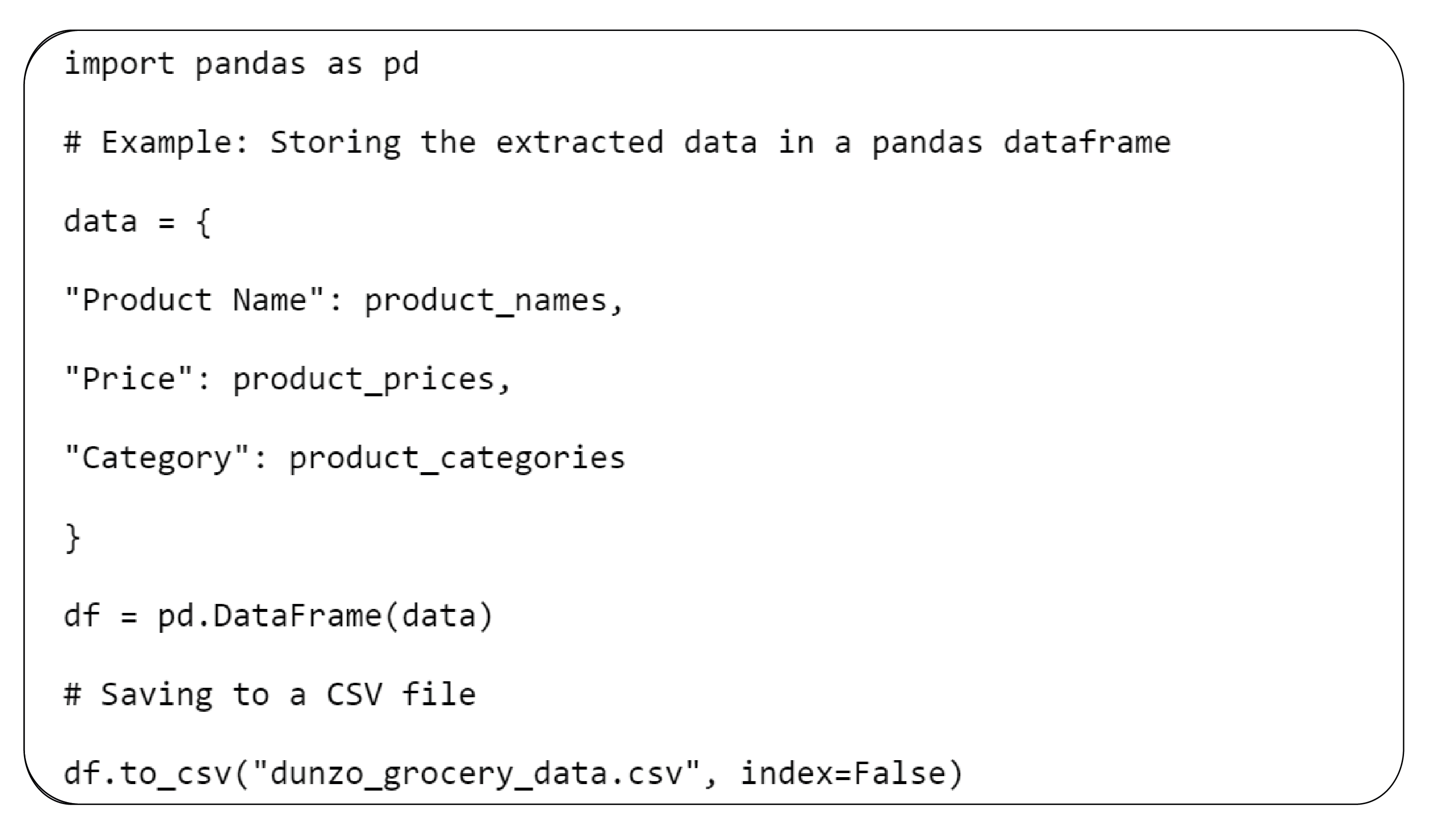

Step 7: Store the Data

After scraping the necessary data, you'll likely want to store it in a structured format like CSV or Excel. Panda is an excellent tool for this.

Step 8: Automate the Scraping Process

To automate the scraping process, you can schedule your script to run regularly using tools like Cron jobs (Linux) or Task Scheduler (Windows). This is particularly useful if you need to collect fresh data frequently.

Legal and Ethical Considerations

Before scraping any website, including the Dunzo app, it's crucial to consider the legal and ethical implications:

- Terms of Service: Review the website's terms of service to ensure that scraping is allowed. Some sites explicitly prohibit scraping.

- Respectful Scraping: Avoid overloading the website's servers by making too many requests quickly. Use techniques like rate-limiting and respecting the robots.txt file.

- Data Privacy: Ensure that sensitive customer data is not collected or misused while scraping.

Conclusion

Scraping grocery details from the Dunzo app offers valuable insights for businesses and individuals, enabling them to optimize strategies and make informed decisions. Following this comprehensive, step-by-step guide, you can efficiently extract Dunzo grocery & gourmet food data to track product pricing, availability, and trends. You can automate data extraction by using powerful tools like Python, BeautifulSoup, Scrapy, and Selenium, saving time and effort while collecting large volumes of crucial data. The Dunzo grocery dataset offers essential data to drive business strategies, whether you're analyzing competitive pricing, monitoring stock levels, or identifying market trends. For those in the quick commerce sector, Dunzo quick commerce datasets provide real-time insights into product offerings and customer demand, allowing businesses to adjust their operations accordingly. Always ensure compliance with legal guidelines to maintain ethical standards and avoid potential issues while scraping.

At Product Data Scrape, we strongly emphasize ethical practices across all our services, including Competitor Price Monitoring and Mobile App Data Scraping. Our commitment to transparency and integrity is at the heart of everything we do. With a global presence and a focus on personalized solutions, we aim to exceed client expectations and drive success in data analytics. Our dedication to ethical principles ensures that our operations are both responsible and effective.

-01.png)

.webp)