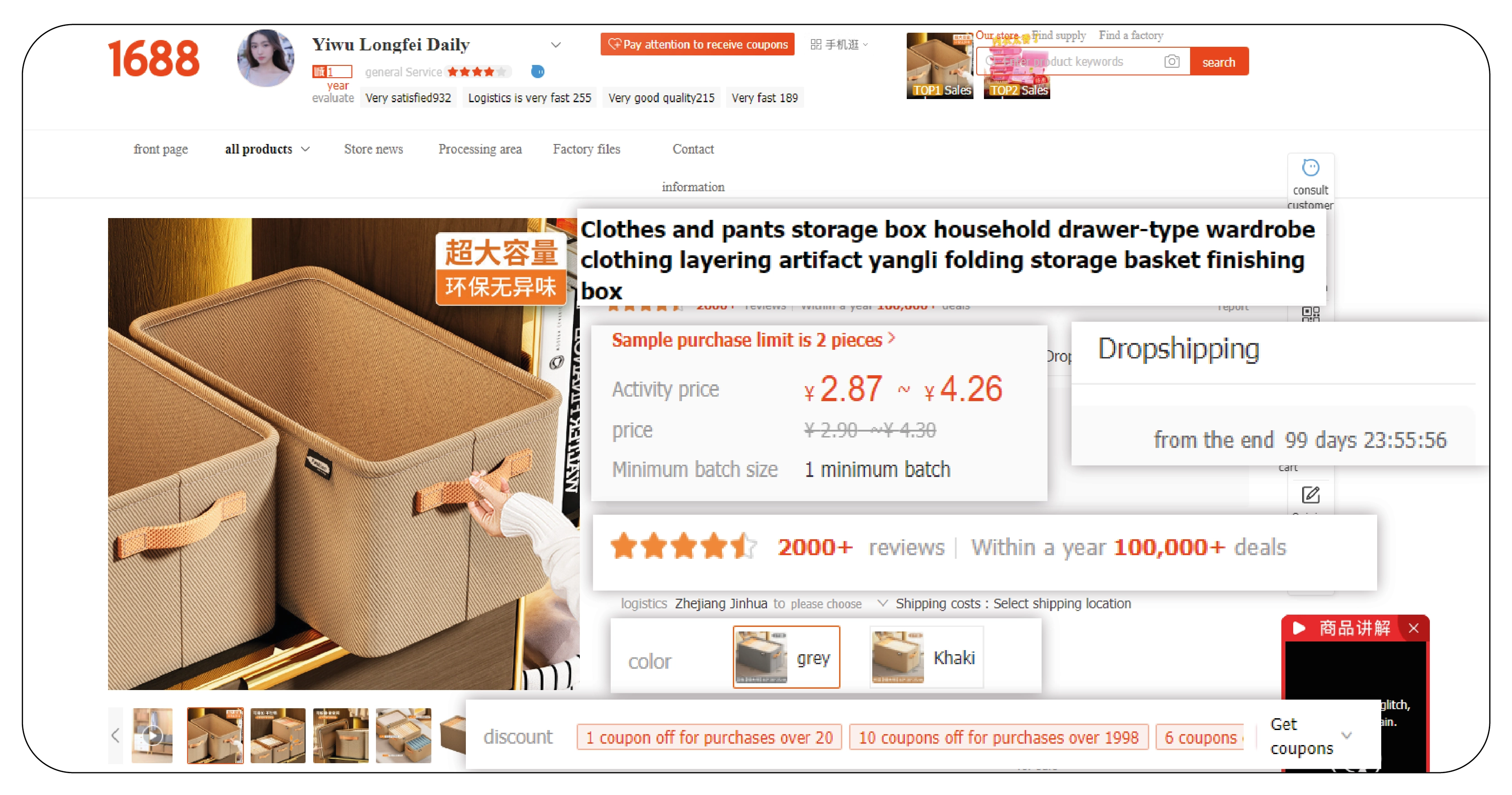

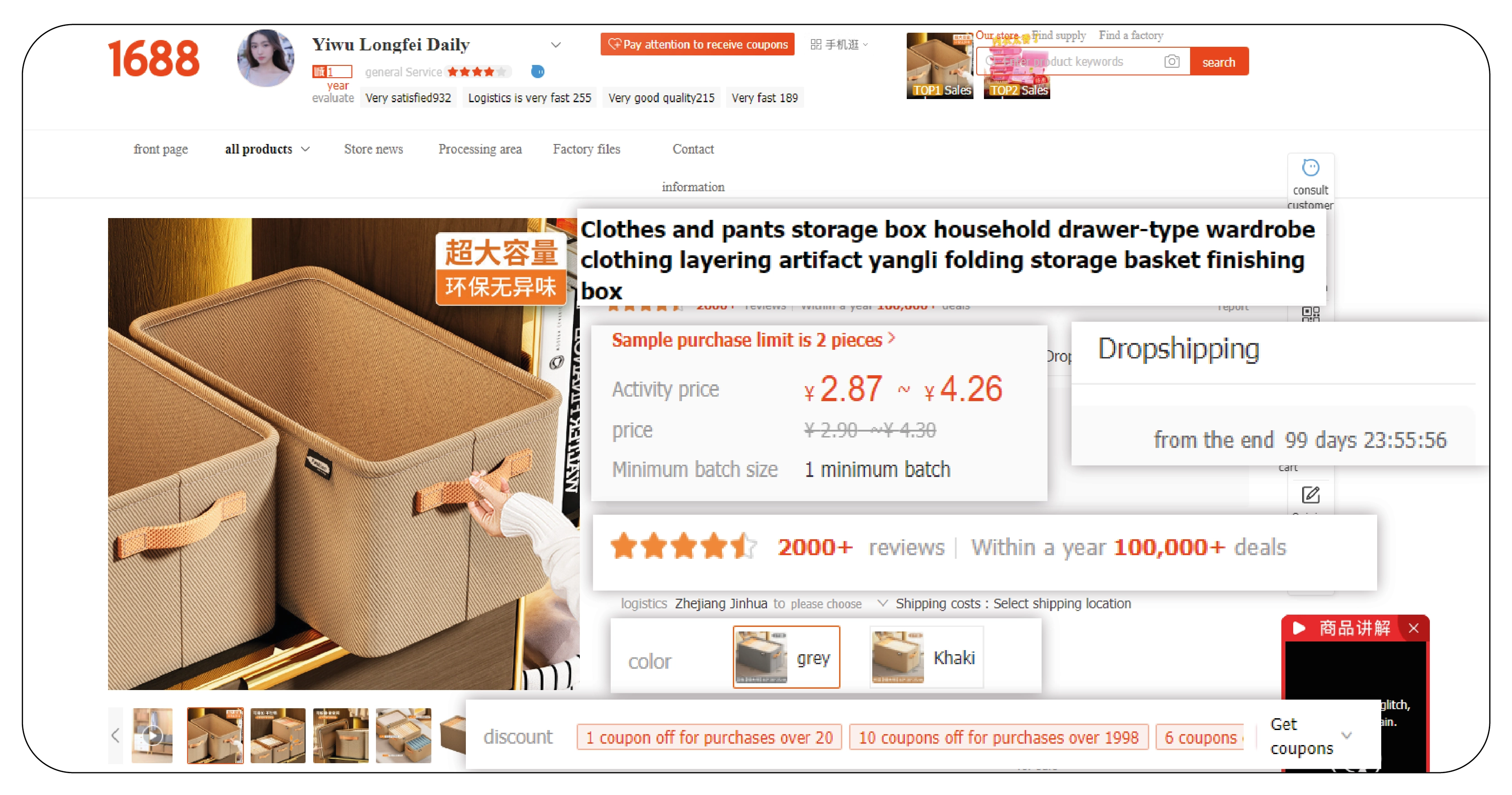

In the fast-paced world of e-commerce, efficiency reigns supreme, particularly in sourcing products from platforms like 1688. With the exponential growth of online trade, businesses seek streamlined purchasing processes to conserve time and resources. Here, the integration of 1688 web scraping and auto checkout tools proves instrumental. These tools provide an innovative solution, automating essential tasks and bolstering productivity. Through web scraping 1688, businesses collect pertinent data swiftly, enabling informed decisions and agile responses to market dynamics. Simultaneously, auto-checkout expedites the final stages of procurement, eliminating manual steps and reducing transactional time. By harnessing these technologies, businesses optimize their sourcing endeavors, gaining a competitive edge in the ever-evolving e-commerce landscape. The synergy of web scraping 1688 and auto checkout saves invaluable time and enhances operational efficiency, facilitating smoother transactions and driving overall business success.

Autofilling Cart: One of the critical features of a 1688 web scraping and auto checkout tool is its ability to efficiently autofill the cart based on keywords or specific product IDs. This flexibility empowers users to source products swiftly according to their preferences and requirements. Whether searching for products based on specific keywords or targeting particular items using product IDs, the tool ensures a seamless and hassle-free experience. Businesses can expedite the sourcing process and focus on other essential aspects of their operations by automating the cart-filling process.

Checkout Automation: Once the desired products are successfully added to the cart, the next crucial step is the checkout process. Manual checkout can be time-consuming and prone to errors, leading to delays and inefficiencies. With auto checkout functionality, the 1688 web scraping tool automates the entire process, saving invaluable time and reducing operational steps. By automatically completing the checkout process, businesses can eliminate the need for manual intervention, ensuring a smooth and efficient transaction experience.

Types of Data Collected from 1688 Web Scraping

Web scraping from a platform like 1688 can yield various types of data depending on the project's specific requirements. Here are some common types of data that might be collected:

Product Information: This includes details like product name, description, images, price, dimensions, weight, materials, and specifications.

Supplier Information: Information about the suppliers, such as company name, location, contact details, rating, and reviews.

Prices and Discounts: Data on product pricing, any discounts or promotions being offered, and pricing trends over time.

Inventory and Availability: Information on product availability, stock levels, and any out-of-stock items.

Shipping Information: Details regarding shipping options, costs, estimated delivery times, and shipping policies.

Reviews and Ratings: Customer reviews, ratings, and feedback on products and suppliers.

Product Categories and Attributes: Categorization of products into different categories and subcategories, as well as any attributes associated with each product.

Trending and Popular Products: This category identifies trending or popular products based on sales volume, search frequency, and user engagement.

Market Analysis Data: Insights into market trends, competitor analysis, pricing strategies, and demand patterns.

Historical Data: Historical data on product prices, inventory levels, and other relevant metrics for analytical purposes.

Images and Multimedia: Downloading images and multimedia content associated with products for visual analysis or application use.

Product Variations: Information on different variations of products, such as sizes, colors, and configurations.

Supplier Performance Metrics: Metrics related to supplier performance, such as response time to inquiries, fulfillment rates, and overall reliability.

Keywords and Search Terms: This section analyzes keywords and search terms used by customers to find products, which can be helpful in SEO or marketing.

Custom Data: Any other specific data points relevant to the project requirements, such as unique product features, user-generated content, or additional metadata.

Ensuring that any data collected through retail data scraping services complies with legal and ethical standards, including respecting the website's terms of service, privacy policies, and applicable data protection regulations, is essential.

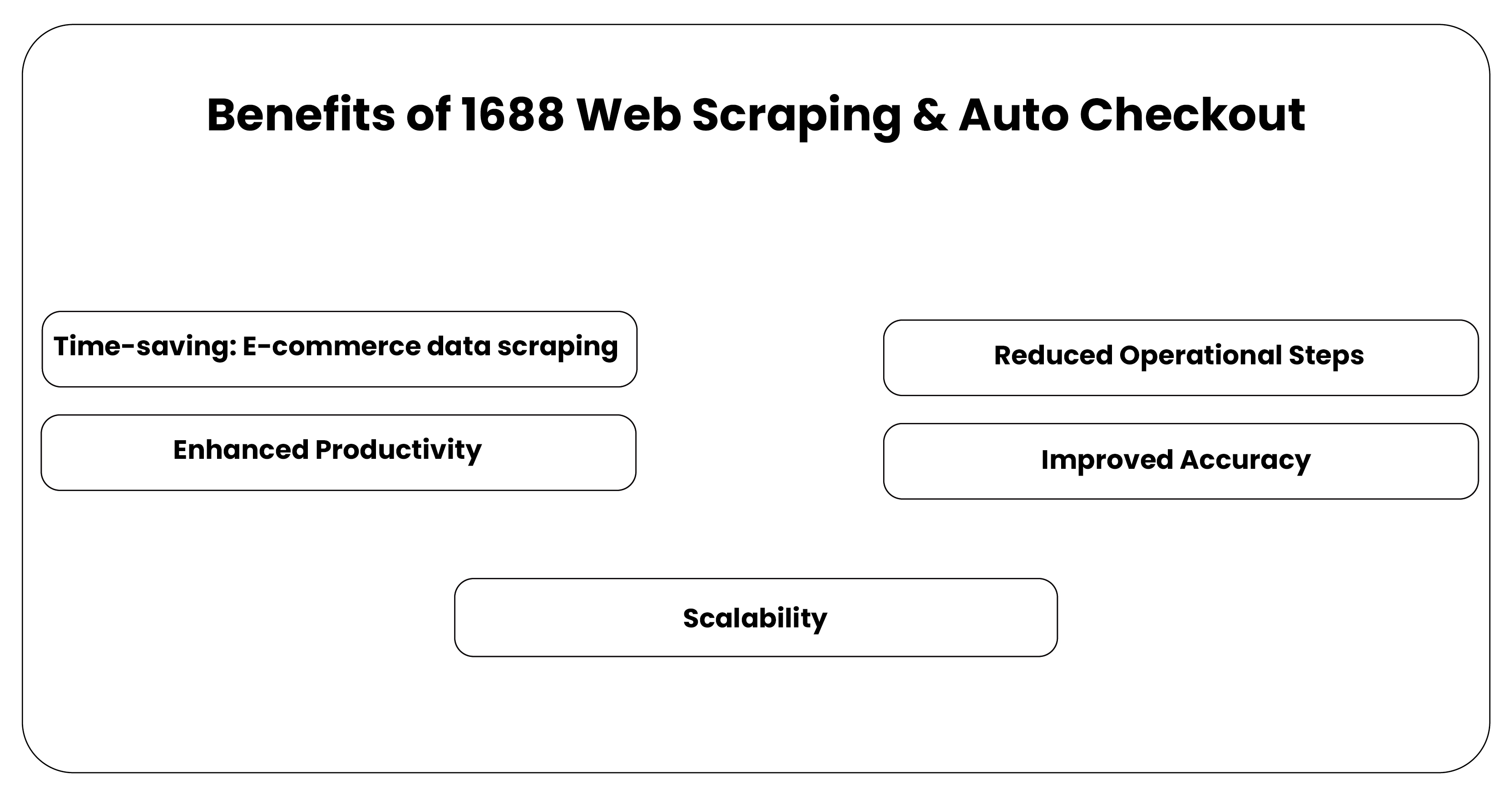

Benefits of 1688 Web Scraping & Auto Checkout

Unlock efficiency and competitiveness in sourcing with 1688 web scraping and auto checkout. Seamlessly automate tasks, save time, and streamline procurement processes for enhanced productivity.

Time-saving: E-commerce data scraping automates cart filling and checkout tasks, rescuing significant time that manual processes would otherwise consume. This efficiency allows users to redirect their focus towards core business activities, enhancing overall productivity and efficiency. By swiftly completing repetitive tasks, businesses can utilize saved time for strategic endeavors, driving growth and competitiveness in the fast-paced e-commerce landscape.

Reduced Operational Steps: Manual sourcing and purchasing processes involve multiple operational steps, heightening the likelihood of errors and delays. With the integration of 1688 web scraping and auto checkout functionalities, the number of operational steps diminishes significantly. This reduction minimizes the risk of errors, streamlining the entire procurement process and ensuring smoother transactions. Businesses can mitigate operational complexities and enhance their sourcing endeavors by automating these steps using 1688 data scraper.

Enhanced Productivity: E-commerce data scraping services liberate businesses from laborious, repetitive tasks, allowing them to allocate resources strategically. Highlighted productivity empowers businesses to accomplish more in less time, bolstering overall performance and competitiveness. By automating mundane tasks, such as data entry and transaction processing, organizations can leverage their workforce for more value-added activities, driving innovation and growth in e-commerce.

Improved Accuracy: Manual data entry and checkout processes are susceptible to errors, jeopardizing order accuracy and reliability. However, the risk of errors is significantly mitigated by implementing auto checkout functionality using an e-commerce data scraper. E-commerce data scraping ensures accuracy and reliability throughout purchasing, safeguarding against inaccuracies and discrepancies. Its heightened accuracy instills buyer and supplier confidence, fostering trust and credibility in e-commerce transactions.

Scalability: As businesses expand and their sourcing requirements evolve, scalability becomes imperative. E-commerce data scraping and auto checkout tools are purposefully designed to scale with the business, accommodating growing volumes of sourcing activities without sacrificing performance or efficiency. This scalability ensures seamless operations, even as businesses experience rapid growth and increasing demand. Organizations can sustain their momentum by leveraging scalable solutions and capitalize on emerging opportunities in the dynamic e-commerce landscape.

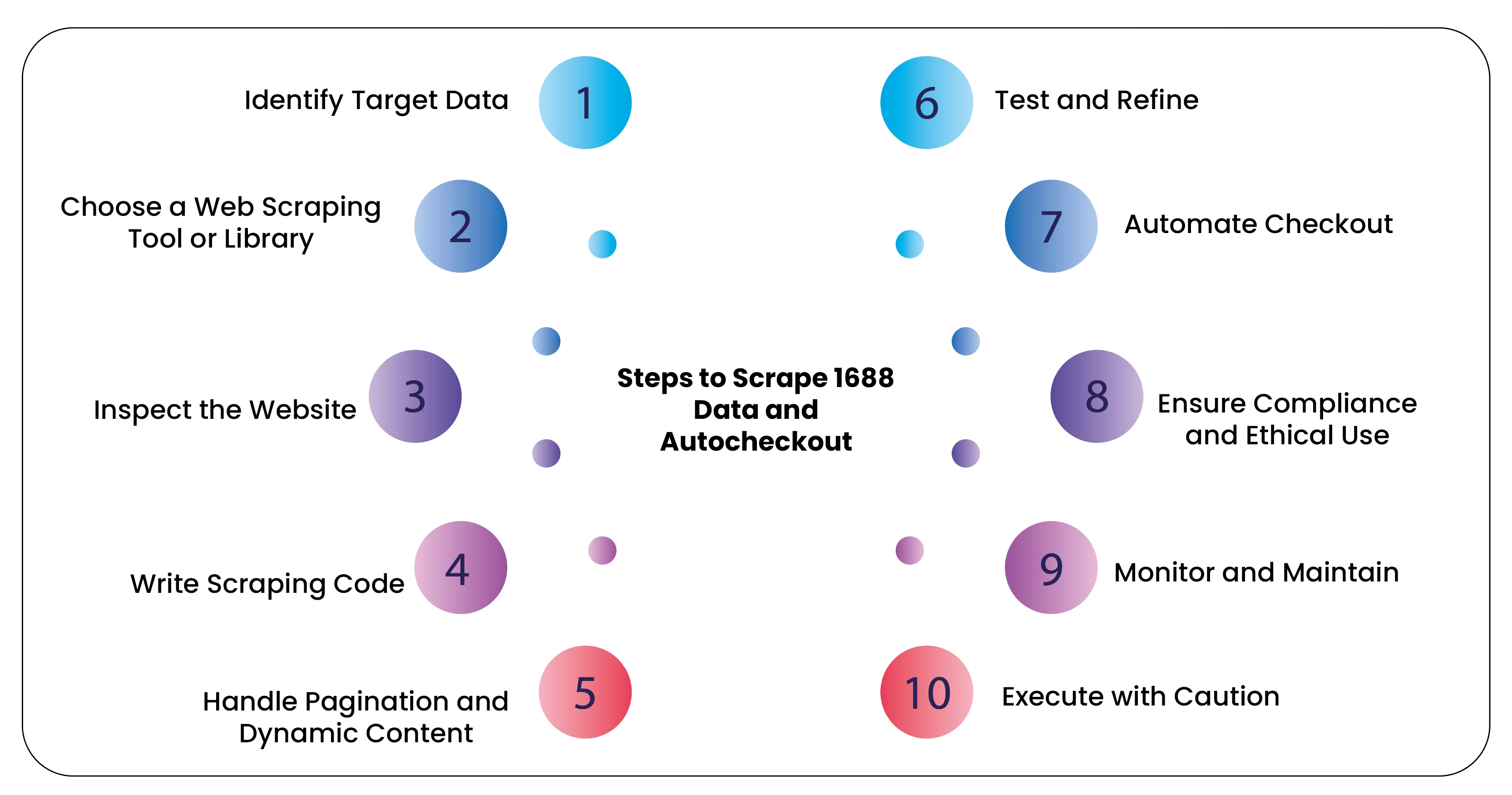

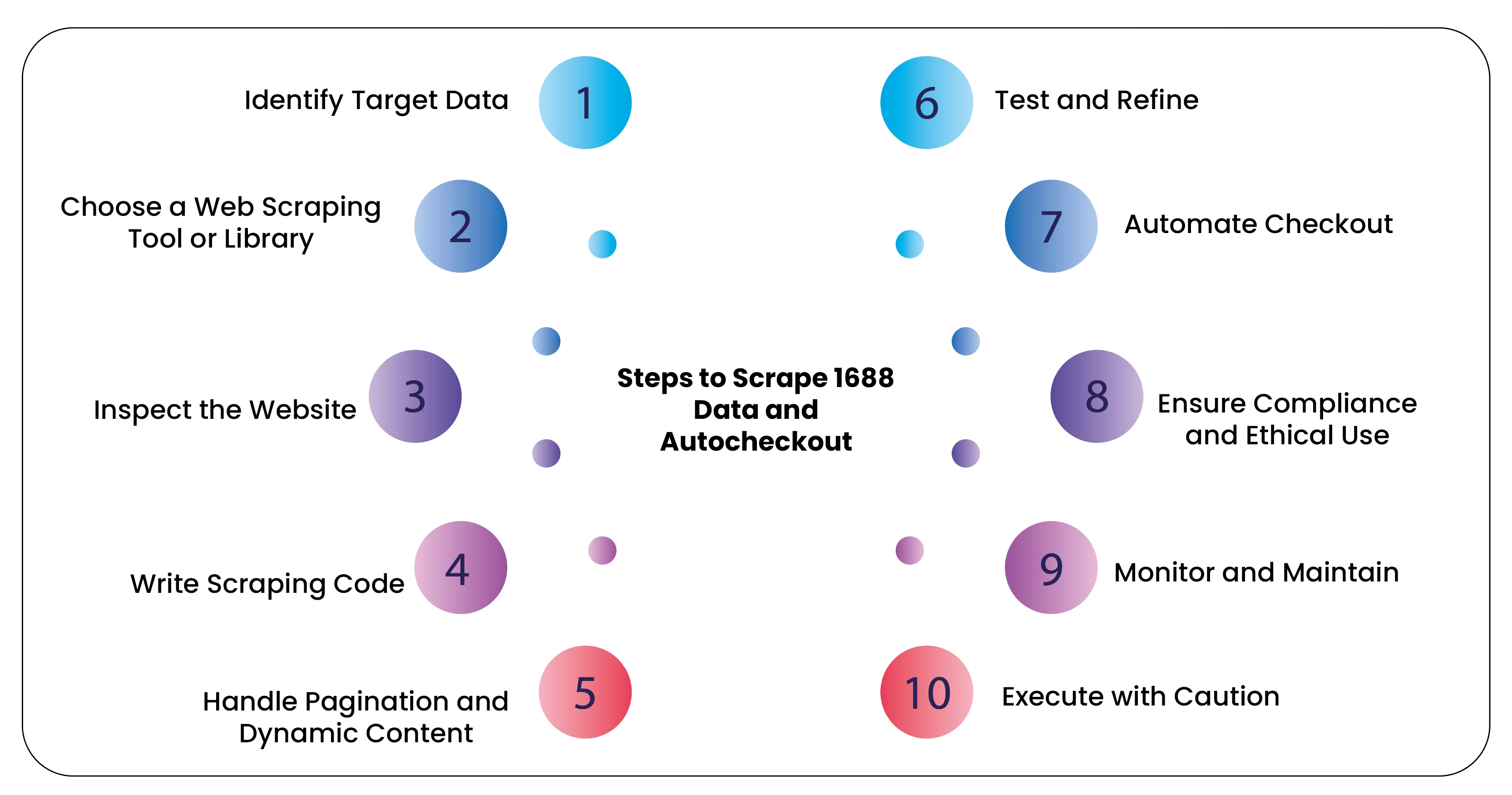

Steps to Scrape 1688 Data and Autocheckout

Scraping data from a website like 1688 and automating the checkout process involves several steps. However, it's important to note that web scraping and automated checkout may be subject to legal and ethical considerations, including the terms of service of the website and potential violations of security or privacy policies. Be sure to familiarize yourself with these considerations before proceeding. Here's a general outline of the steps involved:

Identify Target Data: Determine the specific data you want to scrape from the 1688 website, such as product information, prices, availability, etc.

Choose a Web Scraping Tool or Library: Select a web scraping tool or library capable of extracting data from web pages. Popular options include BeautifulSoup (for Python), Scrapy, Puppeteer (for JavaScript), or Selenium.

Inspect the Website: Use your web browser's developer tools to inspect the structure of the 1688 website and identify the HTML elements containing the data you want to scrape. It might include product listings, prices, add-to-cart buttons, etc.

Write Scraping Code: Develop a script using your chosen tool or library to programmatically navigate the 1688 website, extract the desired data from the HTML, and store it in a structured format such as a CSV file or database.

Handle Pagination and Dynamic Content: If the data you want to scrape is spread across multiple pages or includes dynamically loaded content, you'll need to implement logic in your scraping script to handle pagination and content.

Test and Refine: Test your scraping script on a small subset of data to ensure it works as expected. Make any necessary adjustments or refinements to handle edge cases or unexpected behavior.

Automate Checkout: To automate the checkout process, you'll need to develop additional automation scripts using tools like Selenium or Puppeteer to programmatically interact with the checkout flow on the 1688 website. It involves adding items to the cart, filling out shipping and payment information, and completing the checkout process.

Ensure Compliance and Ethical Use: Before deploying your scraping and automation scripts at scale, ensure they comply with the 1688 website's terms of service and any relevant legal and ethical considerations. Avoid excessive or disruptive scraping behavior that could impact the website's performance or violate its policies.

Monitor and Maintain: Regularly monitor your scraping and automation scripts to ensure they function correctly, as the 1688 website may undergo updates or changes to its structure. Make any necessary adjustments to maintain compatibility and reliability.

Execute with Caution: Finally, if you decide to deploy your scraping and automation scripts in a production environment, do so with caution and respect for the website's resources and policies. Be prepared to adjust your approach to mitigate any negative impacts on the website or its users.

Remember that automated checkout may be particularly sensitive and potentially violate terms of service or policies. Always ensure your actions are legal, ethical, and respectful of the website's guidelines.

Conclusion: 1688 web scraping coupled with auto checkout offers a potent means for efficiently acquiring desired products from the platform. However, it necessitates meticulous attention to legal and ethical considerations, respecting 1688's terms of service and ensuring compliance with relevant regulations. Users can extract valuable data by leveraging appropriate scraping tools and crafting robust automation scripts while seamlessly navigating the checkout process. Continuous monitoring, error handling, and periodic updates are vital for sustaining functionality and guideline adherence. Ultimately, responsible use of these techniques enables streamlined procurement workflows while upholding integrity and respect for the platform's policies and users' rights.

At Product Data Scrape, ethical principles are central to our operations. Whether it's Competitor Price Monitoring or Mobile App Data Scraping, transparency and integrity define our approach. With offices spanning multiple locations, we offer customized solutions, striving to surpass client expectations and foster success in data analytics.

.webp)

.webp)

.webp)

.webp)