Walmart is a global retail corporation renowned for its chain of hypermarkets and retail stores. With a multinational presence, Walmart offers a wide range of products and services to customers worldwide, grocery stores, and discount department stores. Founded by Sam Walton in 1962, it is in Bentonville, Arkansas, United States. Scrape product data from eCommerce to gain insights into product offerings and pricing details. Walmart is one of the largest companies in the world by revenue and employs millions of associates globally.

The company offers various products, including groceries, household goods,

electronics, clothing, furniture, and more. It operates both physical stores and an e-commerce

platform, allowing customers to shop in-store or online for convenient shopping experiences.

This tutorial will guide you on automating Walmart Store Coupon Data Extraction

with LXML and Python. Using web scraping techniques, you will learn how to extract valuable

information about coupons Walmart offers for a particular store location.

We will cover scraping the Walmart.com website using Python and relevant

libraries such as BeautifulSoup and requests. Through the use of these tools, you will gain the

ability to navigate the webpage's HTML structure, locate coupon information, and extract the

details you need.

By following the steps outlined in this tutorial, you can automate the process

of scraping the Walmart coupon data, which can be helpful for various purposes, such as price

comparison, savings analysis, or simply staying informed about ongoing promotions at your local

Walmart store.

Whether you are a bargain hunter, a coupon enthusiast, or someone looking to

leverage data for informed shopping decisions, this tutorial will provide the knowledge and

skills to scrape coupon details from Walmart.com for a specific Walmart store.

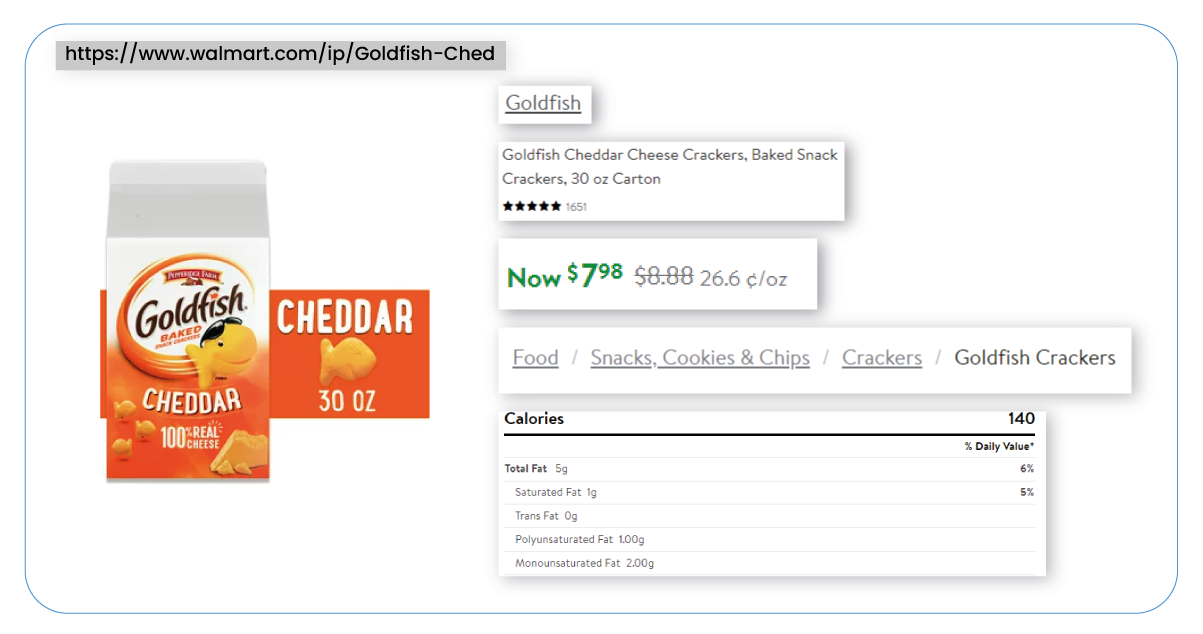

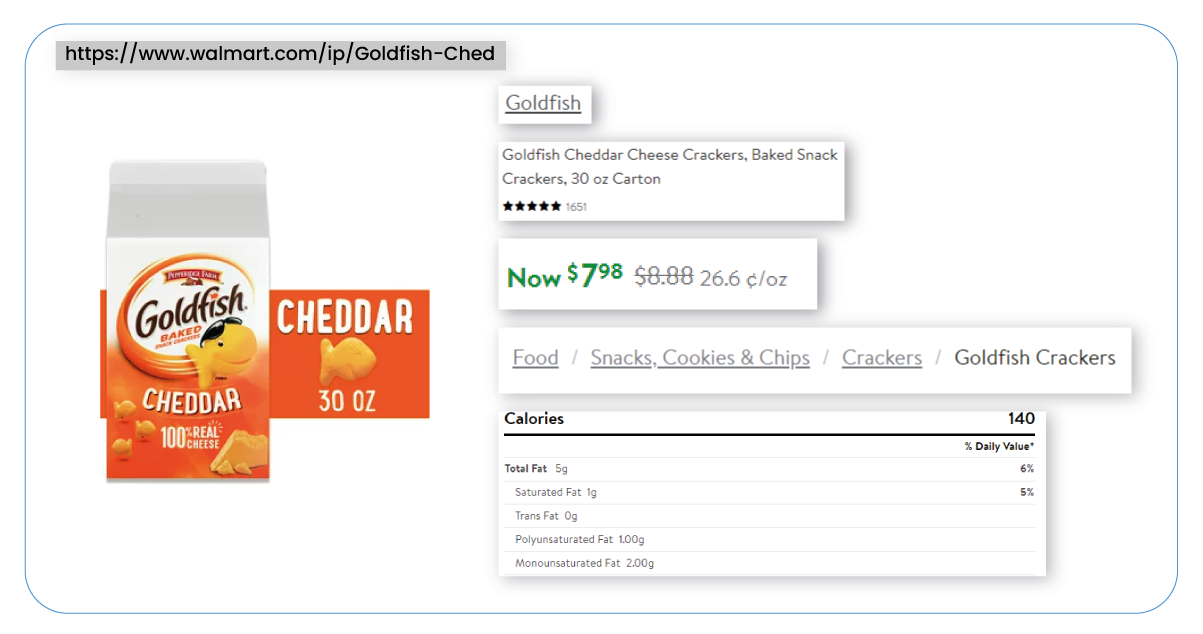

List of Data Fields

- Discounted Price

- Brand

- Category

- Product Description

- Activated Date

- Expired Date

- URL

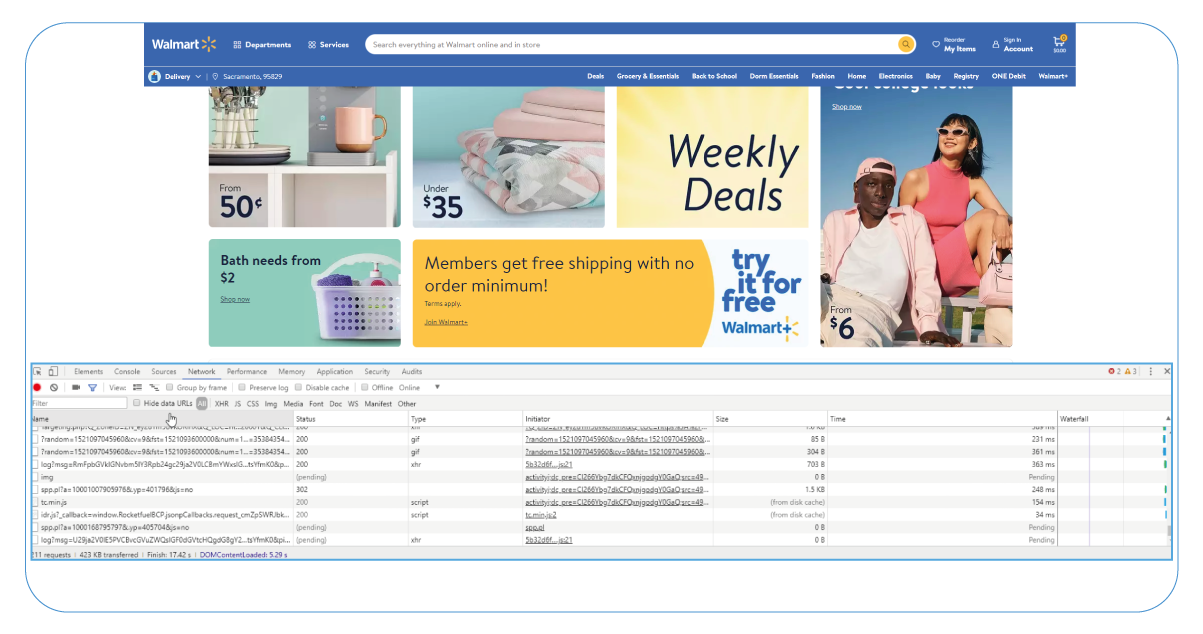

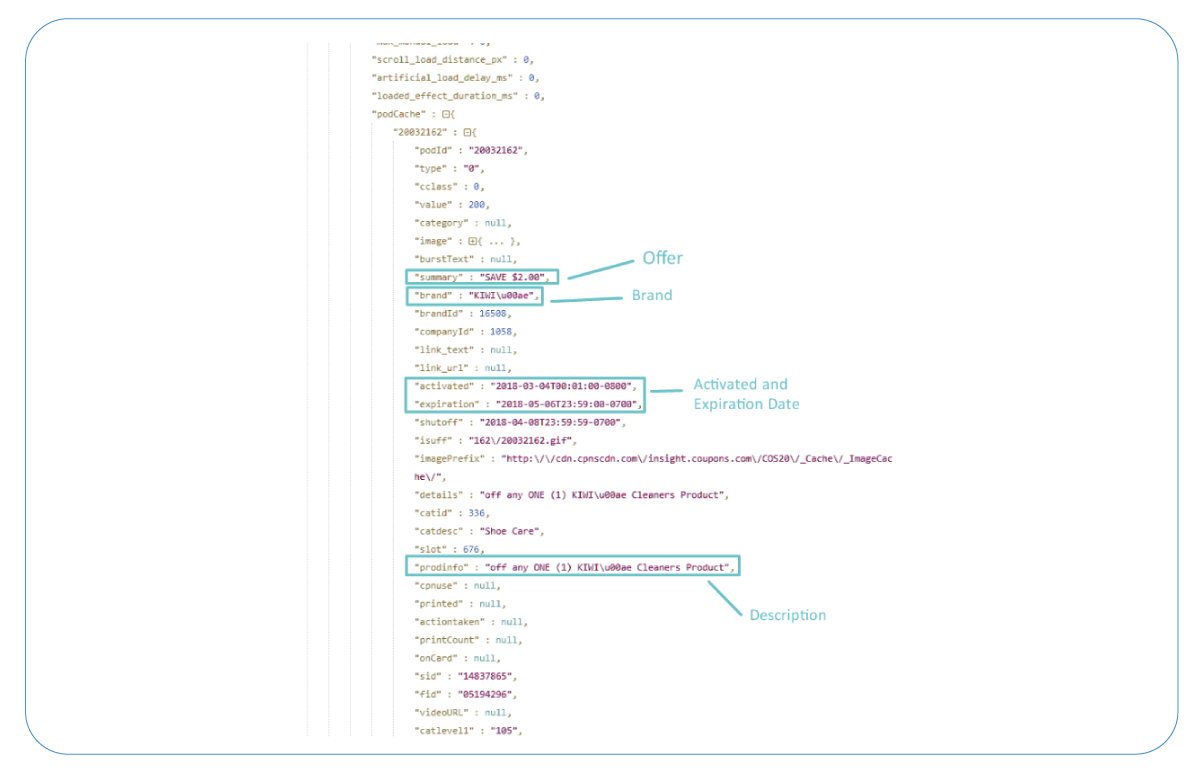

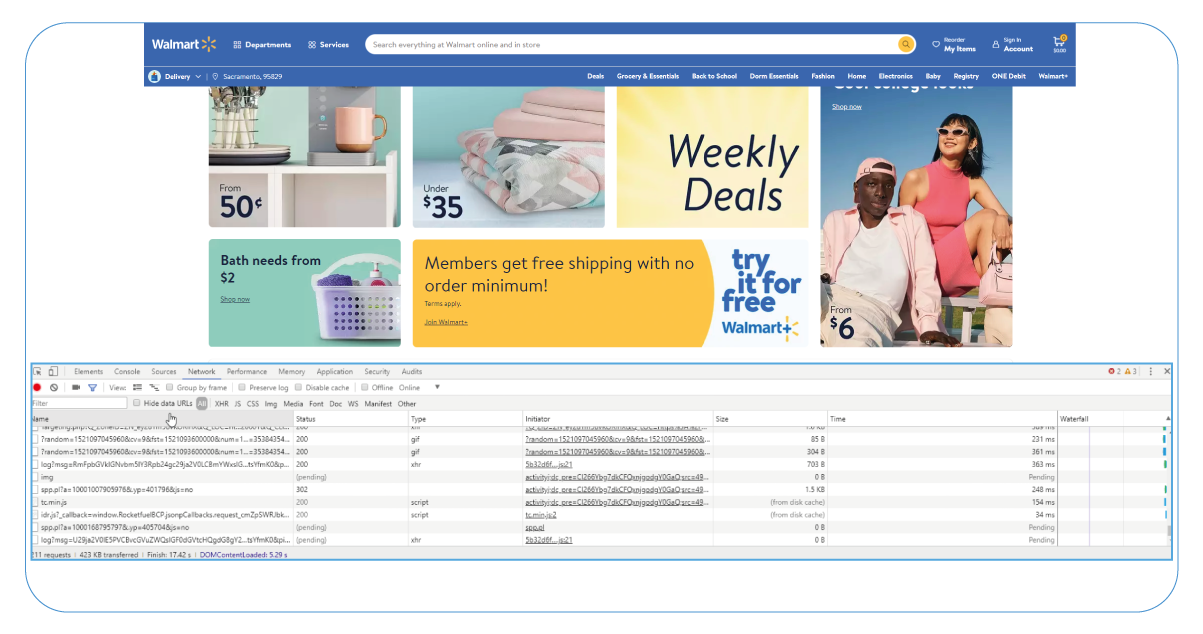

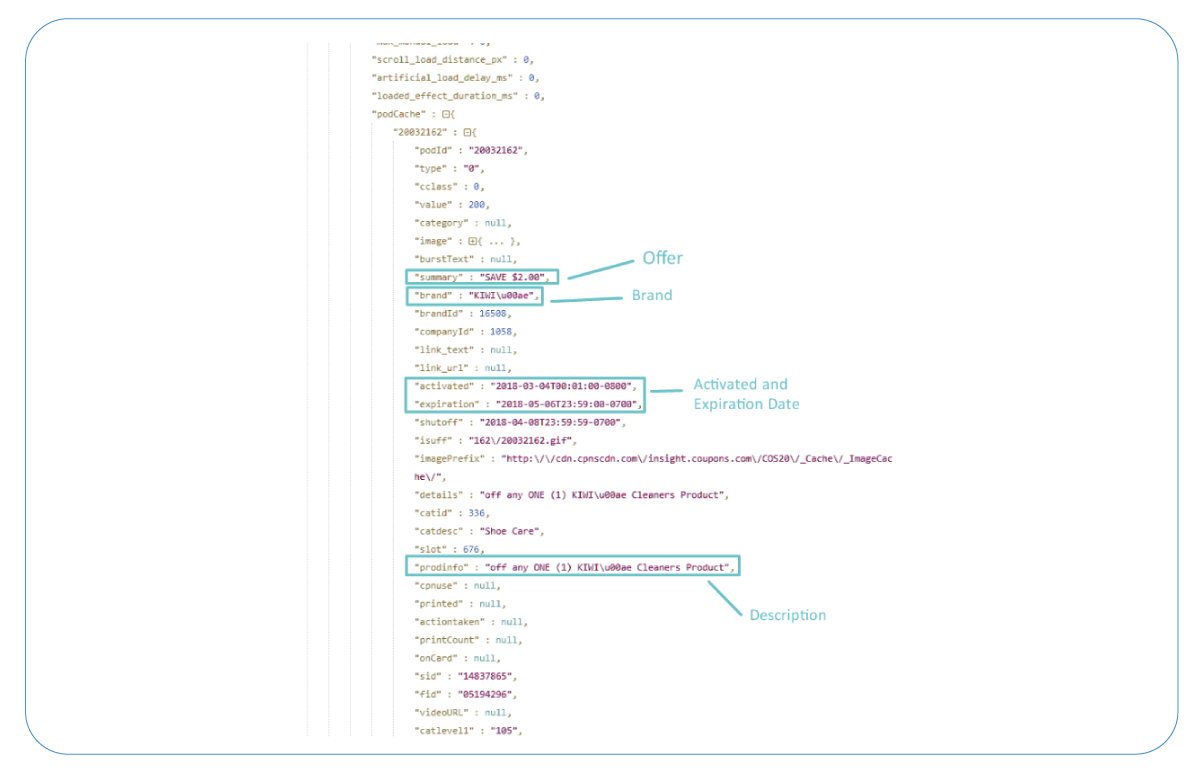

Below is the screenshot that we will extract:

To keep the coupon code and daily deals data scraping tutorial scope simple and focused, we will primarily focus on extracting the annotated coupon details shown in the screenshot. However, it's worth noting that you can extend the scraping process to include additional filters, such as specific brands or customized search criteria.

By implementing more advanced techniques, you can enhance the web scraping

functionality to accommodate various filters and refine your data extraction based on specific

requirements. This flexibility allows you to tailor the scraping process to your preferences and

extract Walmart coupon information based on brand, category, discount value, or any other

desired criteria.

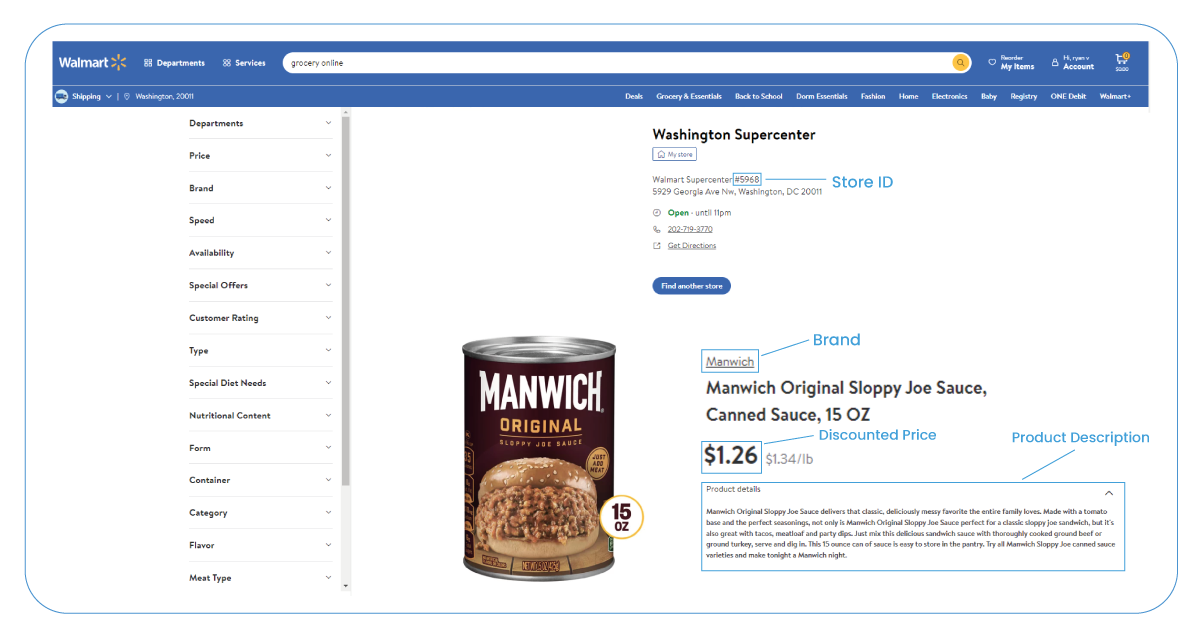

Finding the Data

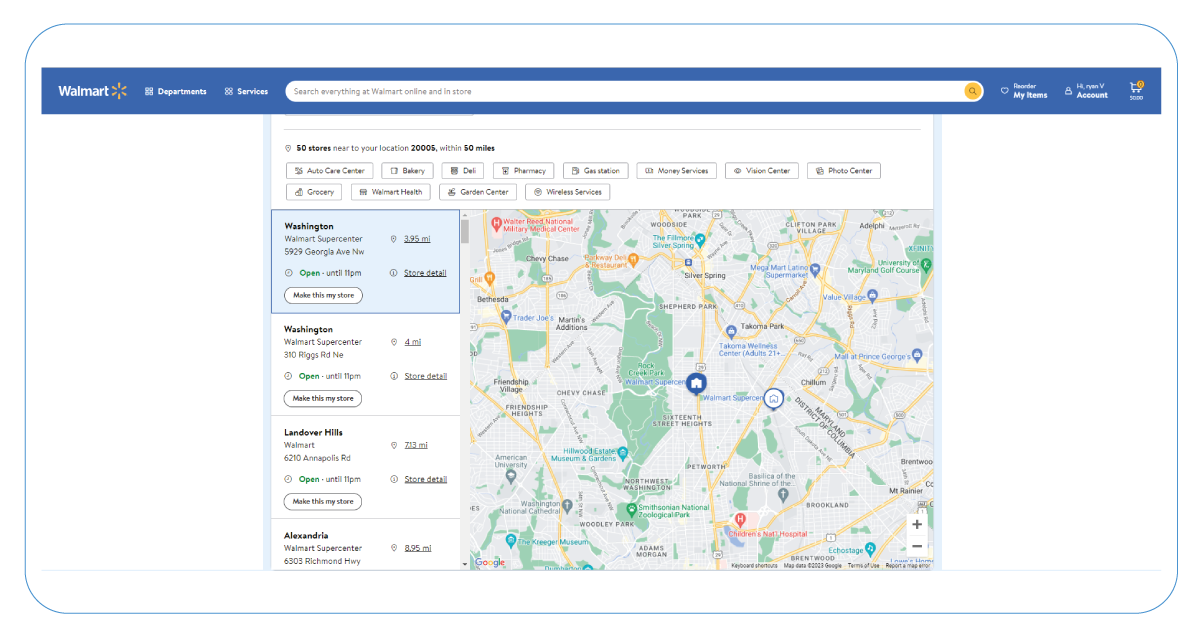

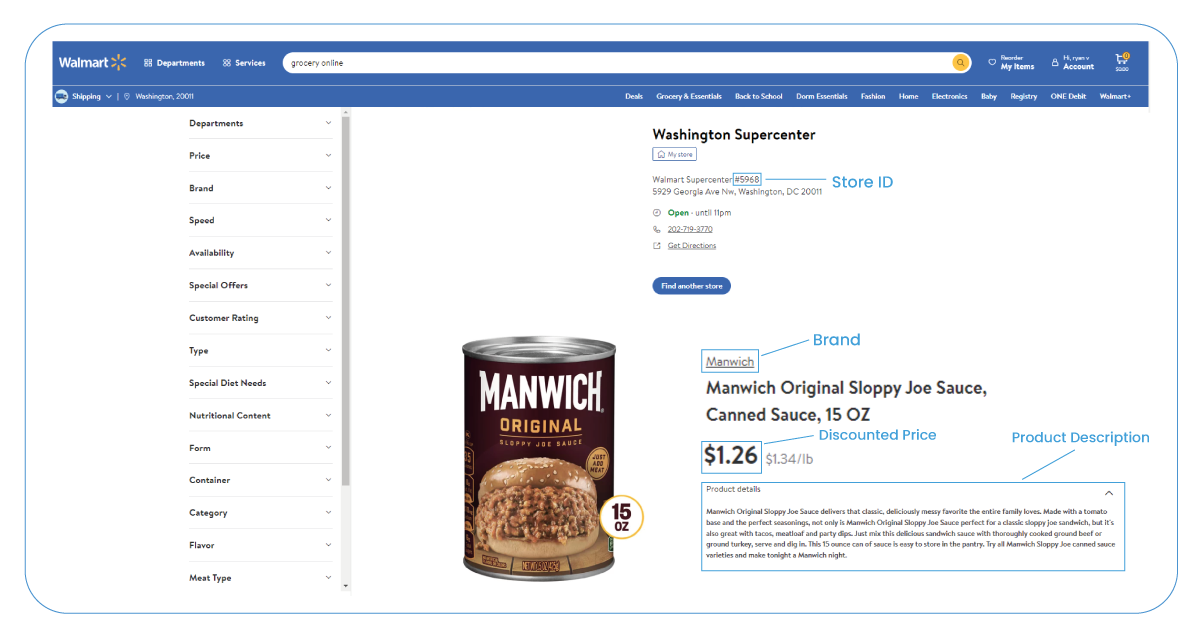

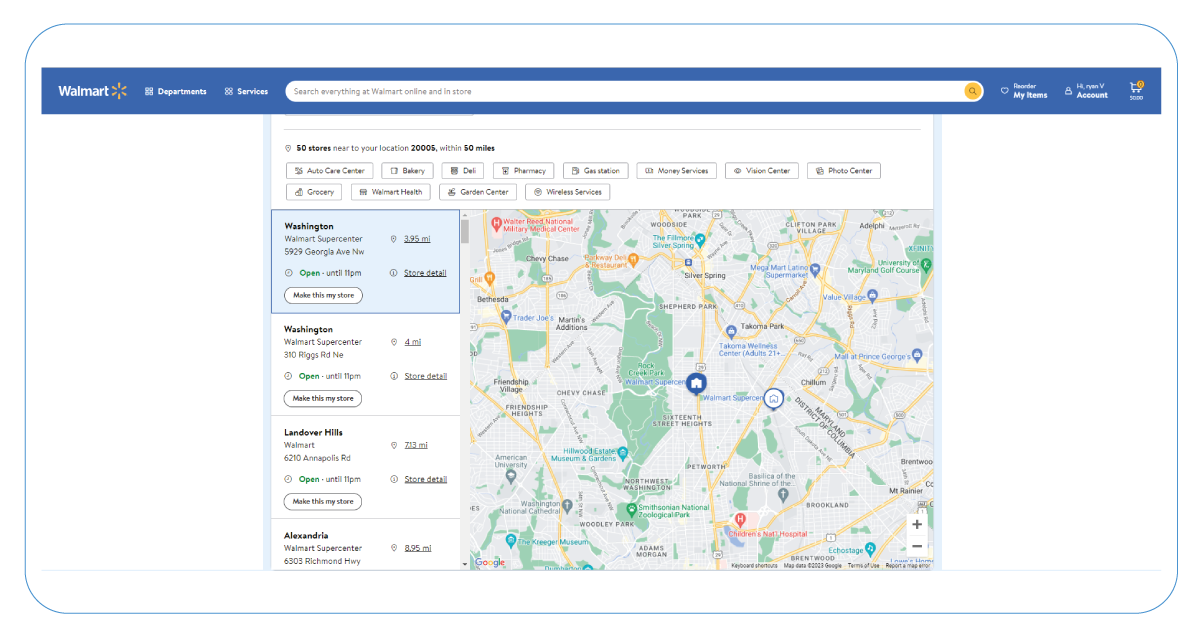

First, open any web browser and navigate to the desired Walmart store URL. For

example, let's use the URL for Walmart store 5941 in Washington, DC:

Once the Walmart store page loads, locate the "Coupons" option on the left side

of the page. Clicking on this option will display a list of available coupons specifically for

Walmart store 5941.

You can refer to the provided GIF for a visual demonstration of obtaining the

store URL. By following these steps, you can access the coupons section for the selected Walmart

store and proceed with web scraping the coupon details using web scraping techniques.

To access the HTML content of the web page and inspect its elements, follow

these steps:

- Open the web page in your preferred browser.

- Right-click on any link or element on the page.

- From the context menu, select "Inspect" or "Inspect Element." This action will open a

developer toolbar or panel within the browser.

- In the developer toolbar or panel, you will see the HTML content of the web page. It will be

displayed in a nicely formatted manner, allowing you to view and analyze the structure and

elements of the page.

- To clear all previous requests from the Network panel, click on the "Clear" button (often

represented by a circular arrow or "x") within the Network panel. It will reset the request

table and remove any previously captured requests.

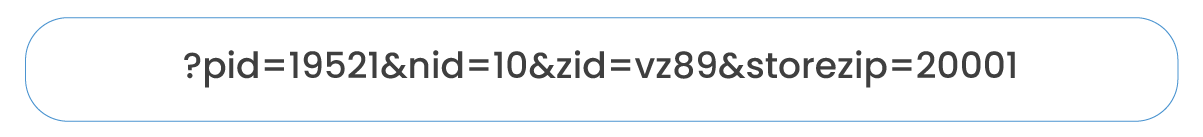

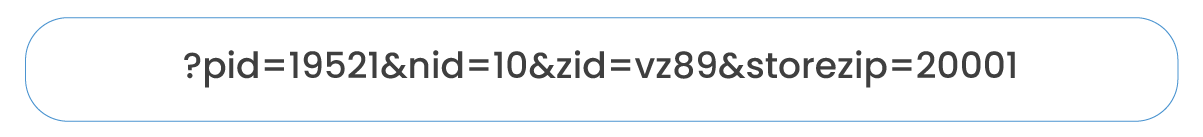

After clicking on the specific request -

- you will notice the

corresponding Request URL:

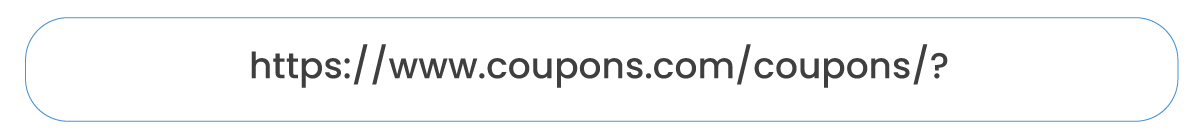

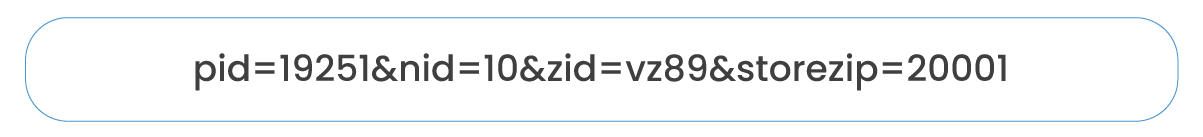

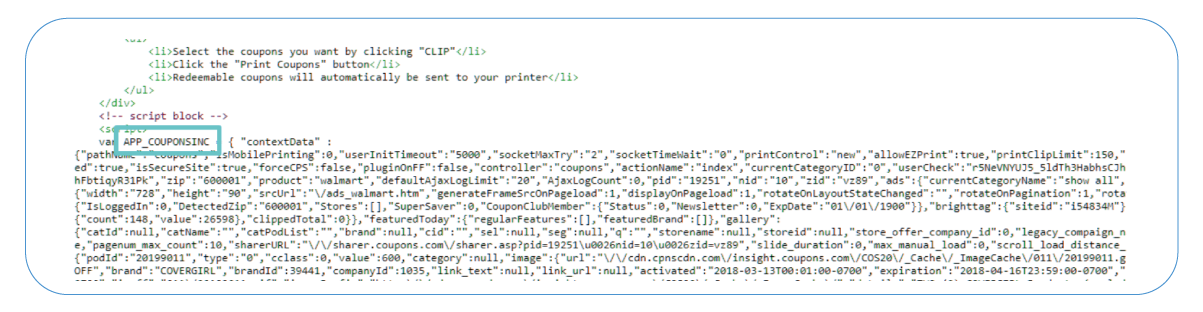

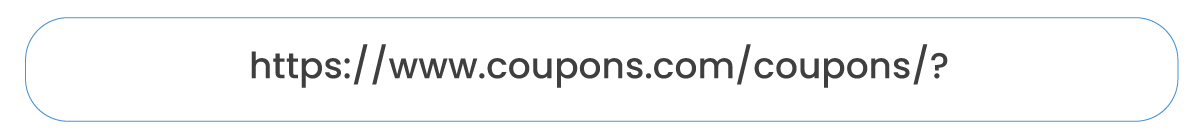

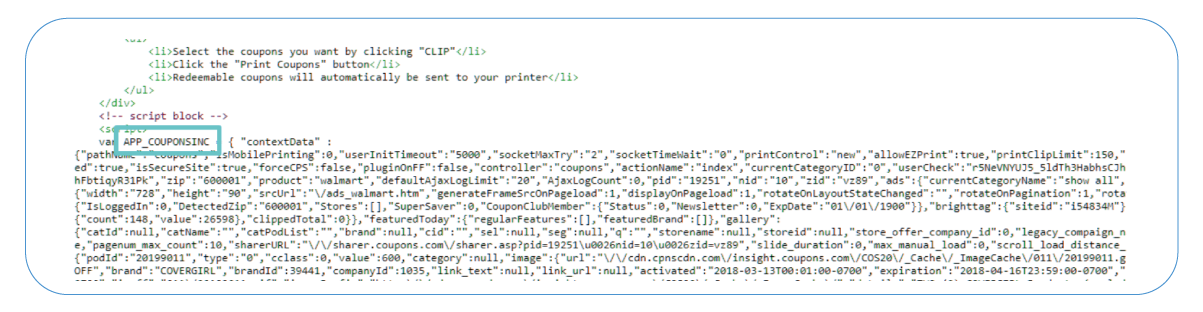

For proceeding, the next step is to identify the values of the "pid," "nid," and "storezip" parameters. You can find these variables in the page source of

Upon inspecting the page source, you will observe that these variables are in a JavaScript variable called "_wml.config". Regular expressions are helpful to filter and extract these variables from the page source. Once extracted, you can create the URL for the coupon endpoint.

By following this approach and utilizing regular expressions to filter the necessary variables, you can construct the URL for the coupon endpoint, which is helpful for further scraping and extracting coupon details from the "coupons.com" website.

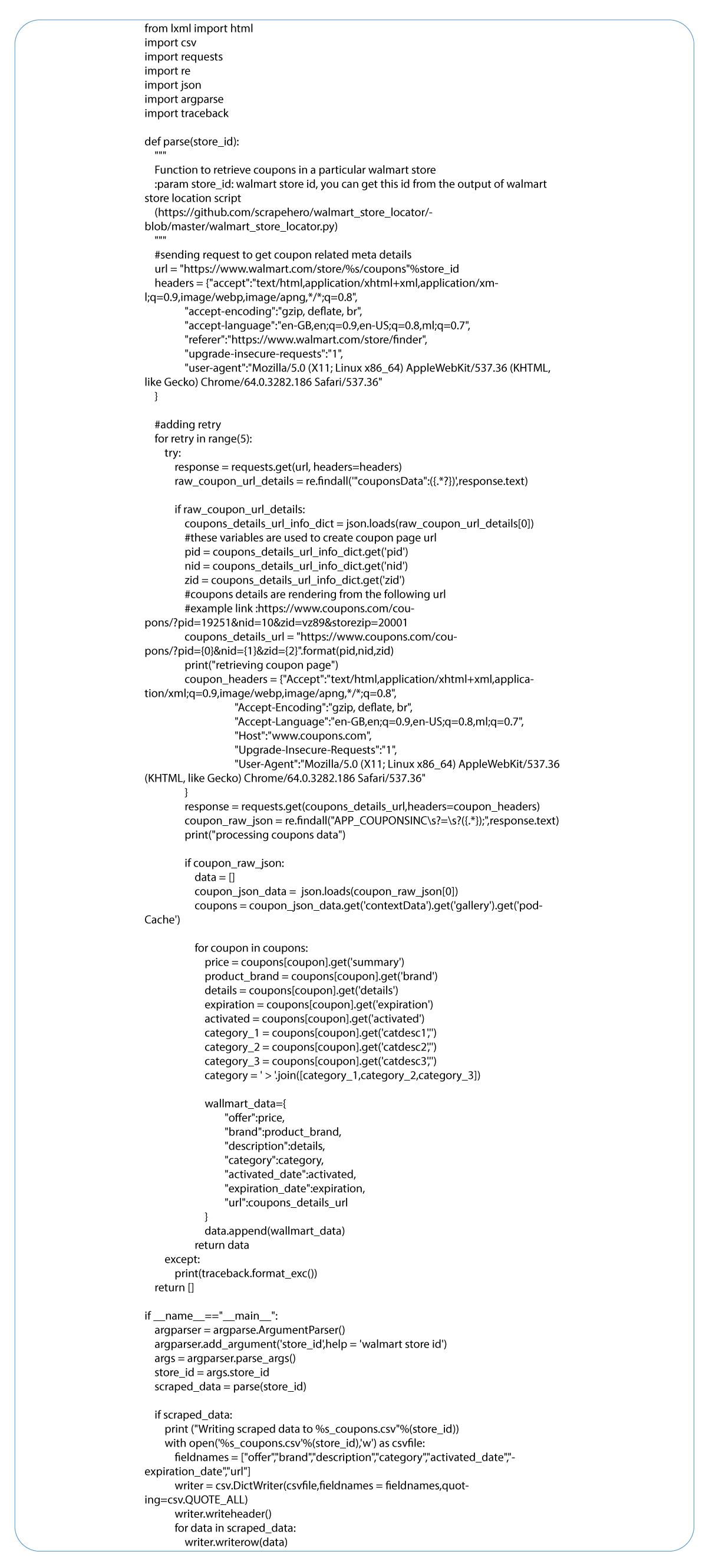

To retrieve the HTML content from the coupon URL, you can make an HTTP request to the specified URL. It is achievable using Python's requests library or any other tool for making HTTP requests.

Once you obtain the HTML content, search for the relevant data within the JavaScript variable "APP_COUPONSINC." This variable likely contains the desired coupon data in JSON format.

To view the extracted data in a structured format, you can copy the data into a JSON parser. Several online JSON parsing tools are available, or you can use Python's built-in JSON module to parse and analyze the extracted data programmatically.

Following these steps, you can retrieve the HTML content from the coupon URL, locate the desired data within the JavaScript variable, and view it in a structured format using a JSON parser.

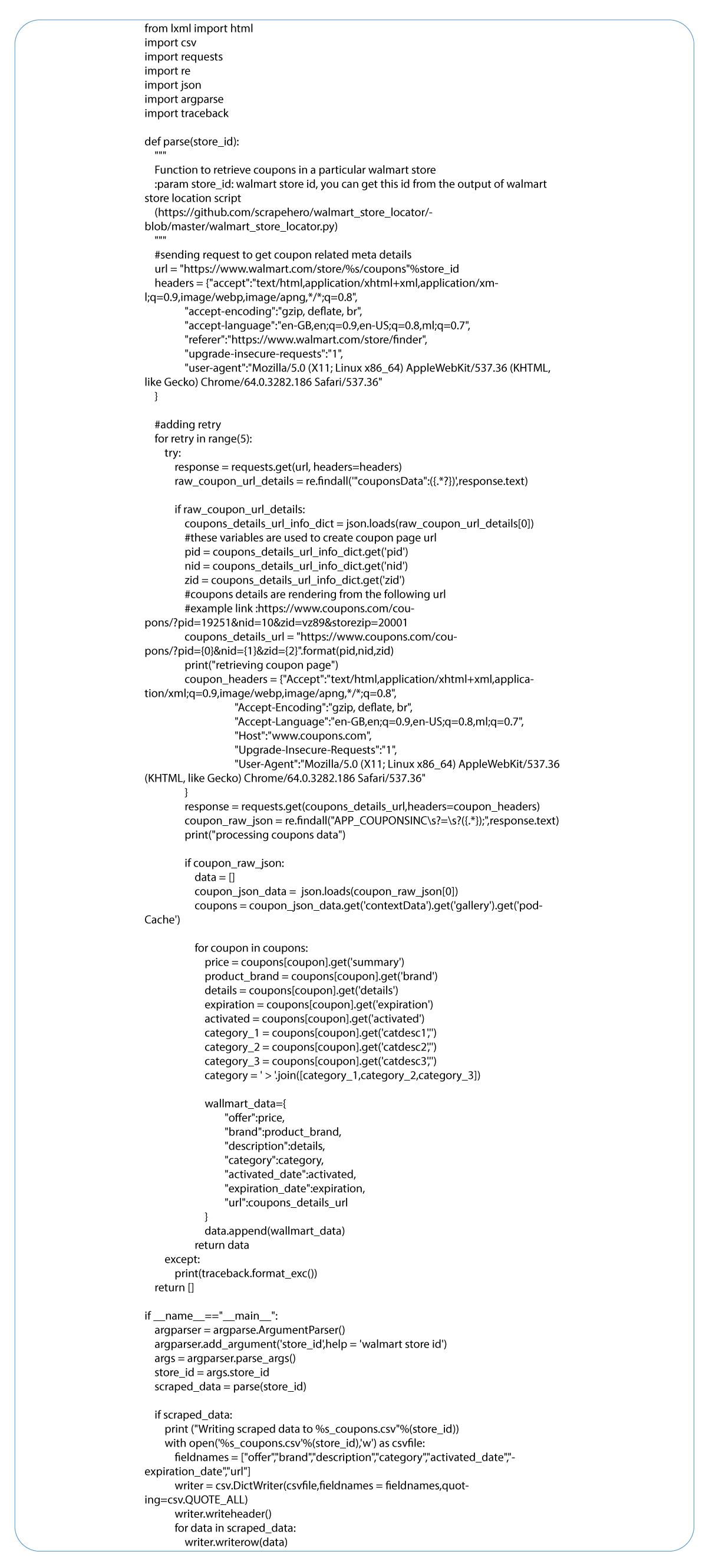

Building the Scraper

To follow along with this tutorial, ensure that you have Python 3 and PIP installed on your computer. Please note that the code provided in this tutorial is specifically for Python 3 and may not work with Python 2.7.

If you are using most UNIX operating systems like Linux or Mac OS, Python may already be pre-installed. However, verifying if you have Python 3 installed is essential, as some systems still come with Python 2 by default.

To examine your Python version, open Linux and Mac OS terminal or Command Prompt (Windows) and type the following command:

-- python version

To examine your Python version, open a terminal (Linux and Mac OS) or Command Prompt (Windows) and enter the following command. Press Enter to execute the command. If the output displays something like "Python 3.x.x", you have Python 3 installed on your system. If the output shows "Python 2.x.x", you have Python 2 installed. If you receive an error message, it indicates that Python is not on your computer.

Install Packages

With Walmart Store data scraping services, you will need to install the following Python packages:

Python Requests: This package helps make HTTP requests and download the HTML content of web pages. You can find installation instructions and further documentation at the official Python Requests website: http://docs.python-requests.org/en/master/user/install/

Python LXML: LXML is a powerful library for parsing HTML and XML documents. It provides tools for navigating and extracting data from the HTML tree structure using Xpaths. Installation instructions are available on the official LXML website: http://lxml.de/installation.html.

Unicode CSV: This package helps handle Unicode characters in the output file. You can install it using the following command in your terminal or command prompt:

To execute the complete code with the script name and store ID, you can use the following command:

python walmart_coupon_retreiver.py store_id

To find the coupon details of store 3305, you can execute the script by running the following command:

python3 walmart_coupon_retreiver .py 3305

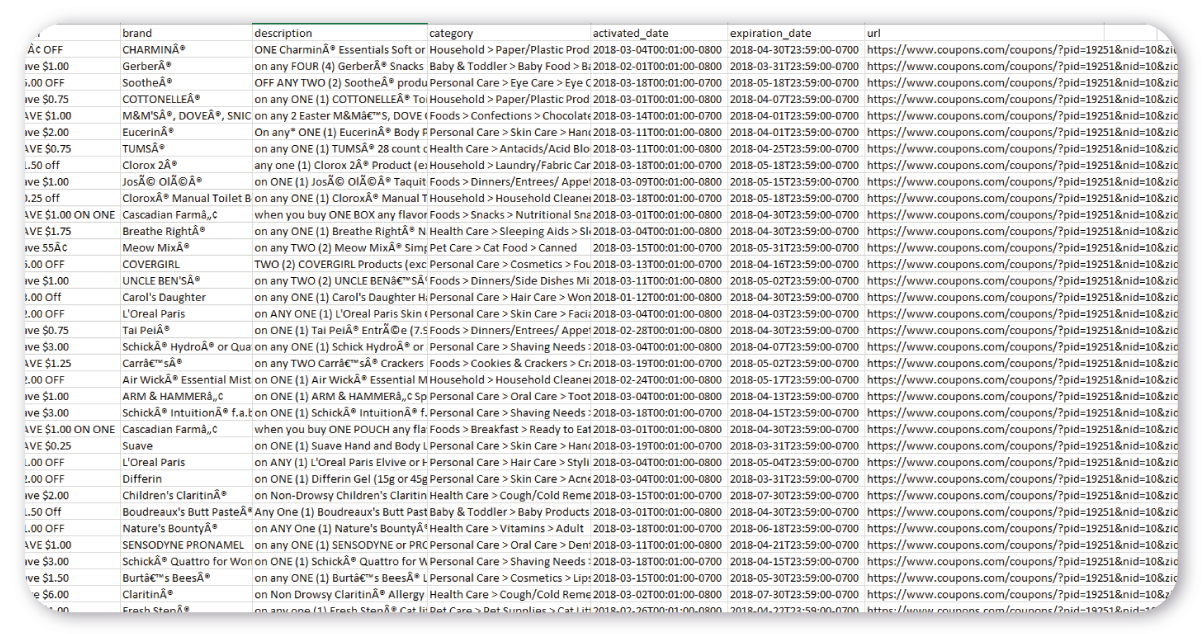

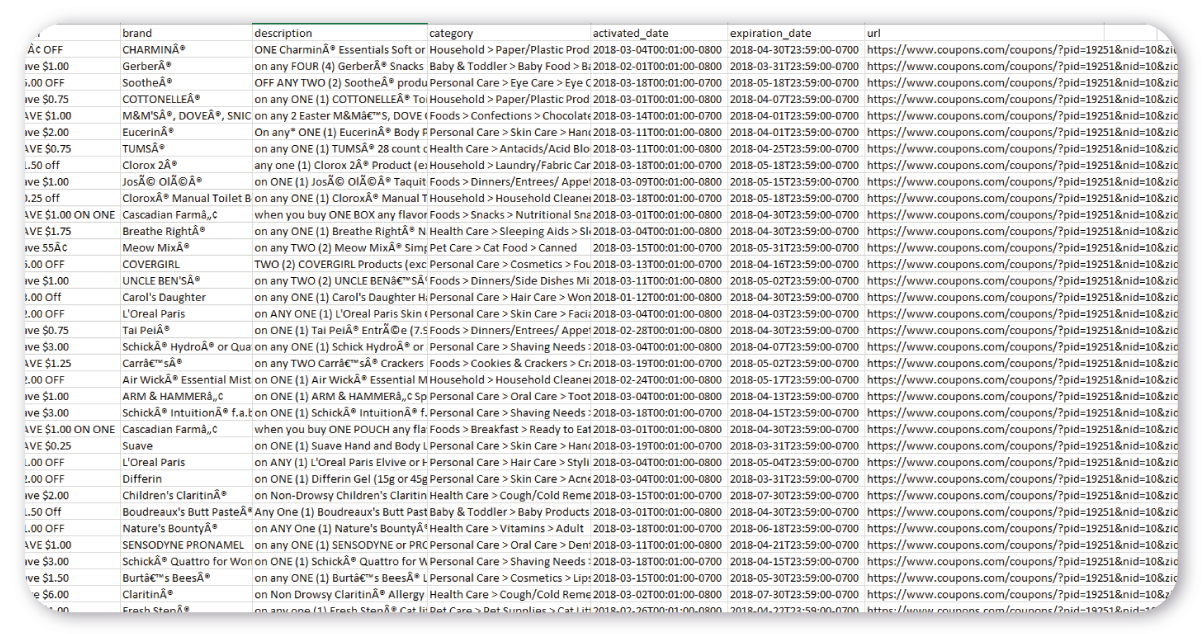

After executing the script, you should find a file named "3305_coupons.csv" in the same folder as the script. This CSV file will contain the extracted coupon details for Walmart store 3305.

The output file is structured similarly to the following:

At Product Data Scrape, we ensure that our Competitor

Price Monitoring Services and Mobile App Data Scraping maintain the highest standards of

business ethics and lead all operations. We have multiple offices around the world to fulfill

our

customers' requirements.

.webp)