Introduction

E-commerce has transformed business operations, making data essential for staying competitive. E-commerce data scraping enables companies to extract critical insights into pricing strategies, customer preferences, product trends, and competitor movements. Businesses can monitor market fluctuations and optimize their offerings by leveraging web scraping for online stores.

This guide will walk you through how to scrape step-by-step e-commerce data, covering its necessity, significance, and practical implementation. Companies use this approach to track competitor pricing, analyze demand patterns, and enhance customer engagement. Automated tools and scripts streamline the data extraction, ensuring accuracy and efficiency.

With e-commerce data scraping, businesses can make informed decisions, improve product positioning, and refine marketing strategies. However, ethical considerations and compliance with website policies are crucial. By following a structured scraping approach, companies can harness real-time data to strengthen their market position and drive business growth effectively.

Why is E-commerce Data Scraping Needed?

With the rapid growth of online shopping, businesses must leverage real-time data to stay ahead in a competitive market. Web scraping for e-commerce data allows companies to extract valuable insights, enabling data-driven decision-making. Here's why e-commerce data scraping services are essential for business success:

- Price Comparison: Monitoring competitor pricing helps businesses adjust their pricing strategies to remain competitive. E-commerce price comparison scraping helps analyze real-time price fluctuations; retailers can implement dynamic pricing models for maximum profitability.

- Product Trend Analysis: Tracking trending products ensures businesses stock and market relevant items. Identifying demand patterns helps retailers introduce high-demand products and optimize promotions.

- Customer Reviews & Sentiment Analysis: Analyzing customer feedback provides insights into product quality and service improvements. Understanding consumer sentiment enables brands to enhance customer satisfaction and build loyalty.

- Inventory & Stock Management: Retailers can monitor competitor stock levels and optimize inventory. Scrape profitable e-commerce business data to avoid stockouts or overstocking, ensuring efficient supply chain management.

- Market Expansion: Businesses exploring new regions can collect data on regional demand, popular products, and pricing trends. This approach allows for strategic market entry and competitive positioning.

Significance of E-commerce Data Scraping

E-commerce data scraping offers multiple advantages, making it a vital tool for businesses, marketers, and analysts. Here's its significance:

- Data-Driven Decision Making: Enables businesses to make strategic decisions backed by real-time data.

- Improved Sales & Marketing: Helps businesses understand consumer behavior and tailor marketing efforts.

- Enhanced Customer Experience: Offers insights into customer expectations and pain points.

- Optimized Supply Chain: Helps monitor supply-demand gaps to prevent stock-outs or overstocking.

- Automation & Efficiency: Reduces manual effort, ensuring accurate data collection with minimal errors.

Step-by-Step Guide to E-commerce Data Scraping

Step 1: Define Your Data Requirements

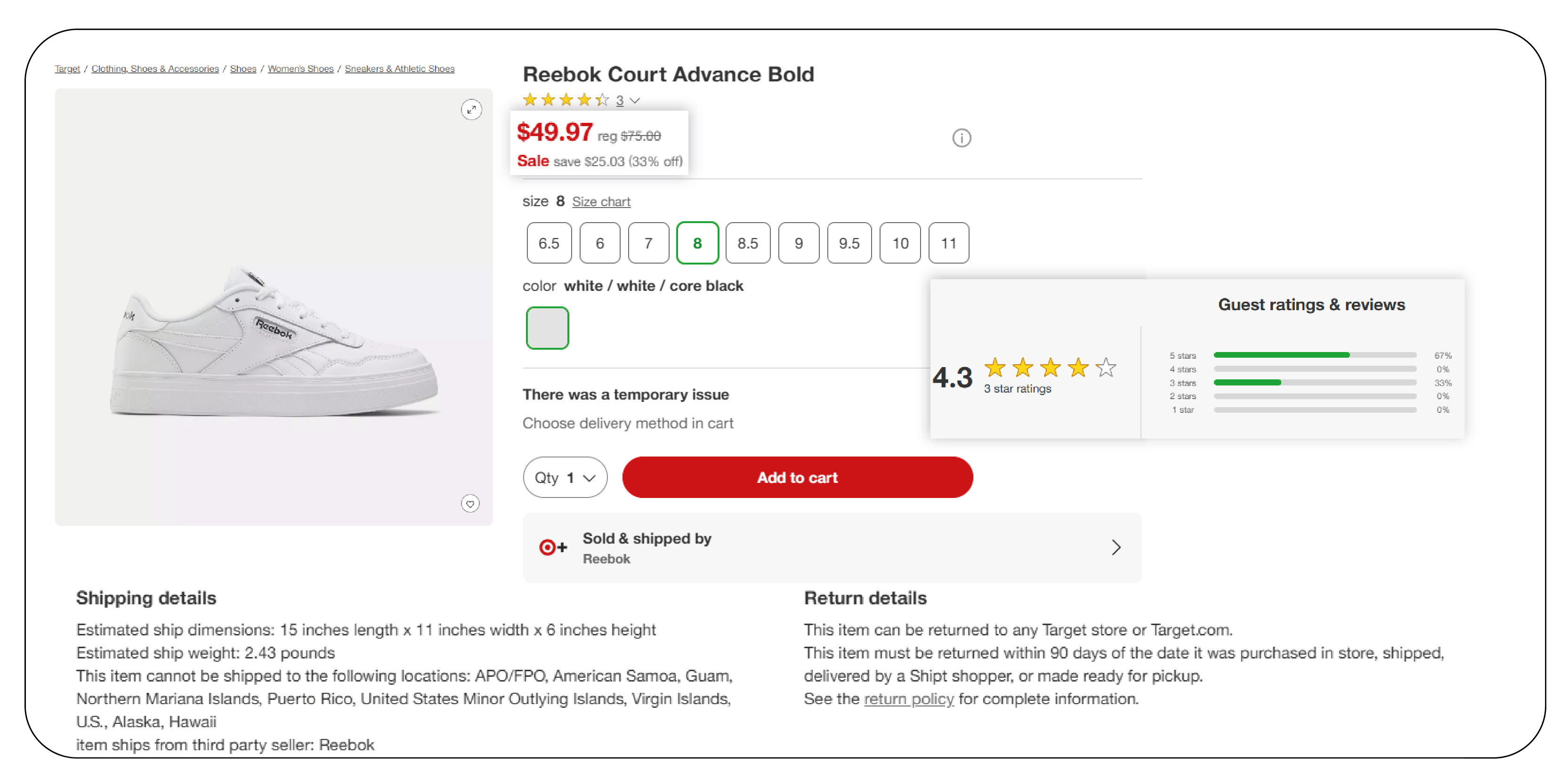

Before scraping, clearly define the objectives and data points you must extract. Some essential e-commerce data points include:

- Product Information: Name, description, specifications, images, brand

- Pricing & Discounts

- Customer Reviews & Ratings

- Stock Availability

- Shipping & Delivery Options

This step helps choose the right scraping tools and techniques based on the data scope and complexity.

Step 2: Choose the Right Scraping Method

E-commerce data can be extracted using different methods, including:

- Web Scraping Tools: Scrapy, BeautifulSoup, and Selenium help in data extraction.

- APIs: Many e-commerce platforms provide APIs for structured data access.

- Automated Scraping Services: Businesses can use third-party services for large-scale data extraction.

- Manual Data Collection: Suitable for small-scale research but not for big data analysis.

Choosing the correct method depends on data volume, frequency, and complexity.

Step 3: Set Up the Scraping Environment

To start scraping, you need:

- Programming Language: Python is widely used due to its robust scraping libraries.

- Libraries & Frameworks: Install libraries like BeautifulSoup, Scrapy, or Selenium for effective scraping.

- Proxy & User Agents: Avoid getting blocked by using rotating proxies and user-agent strings.

- Cloud or Local Storage: Store scraped data in databases, spreadsheets, or cloud storage.

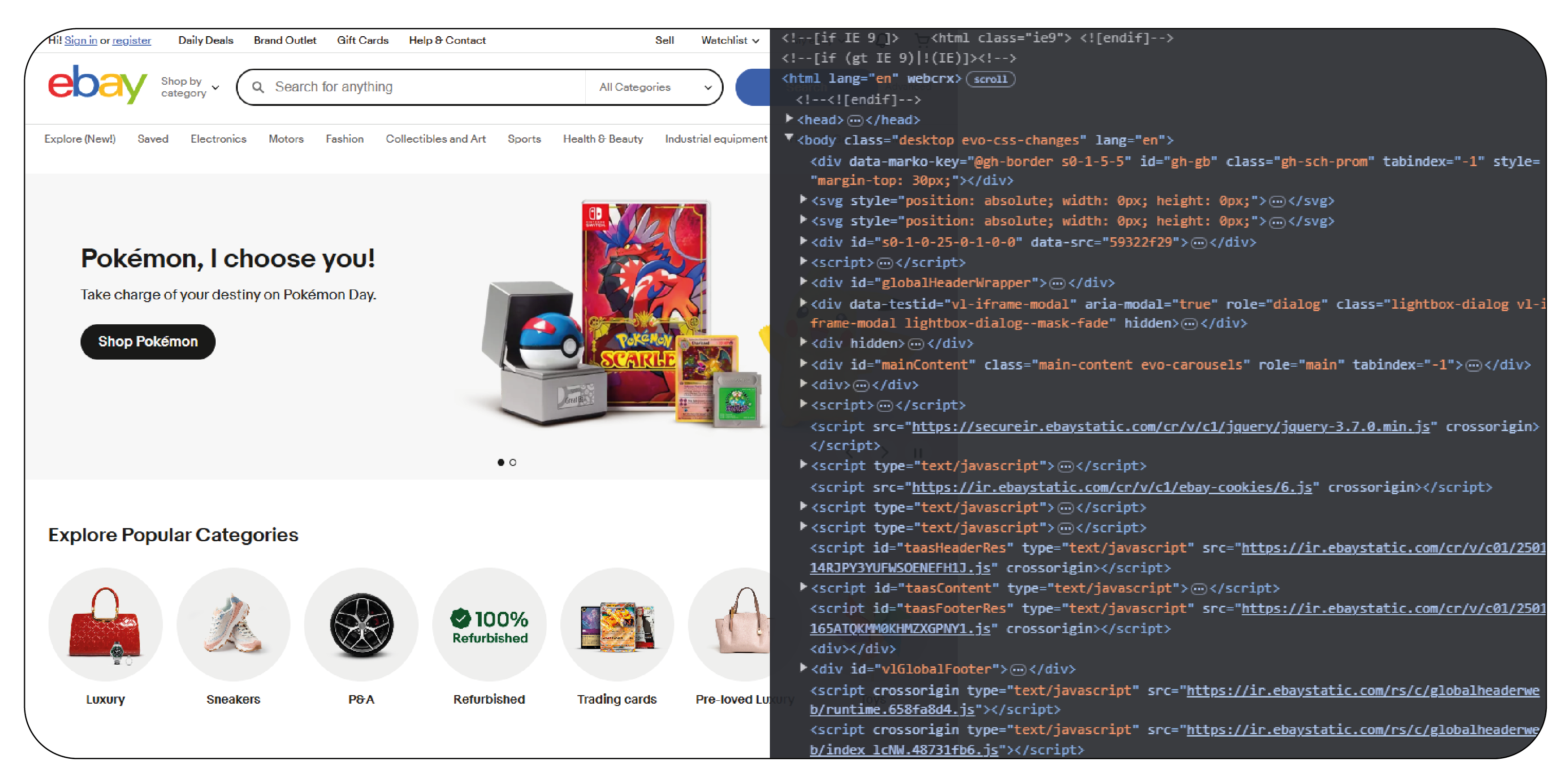

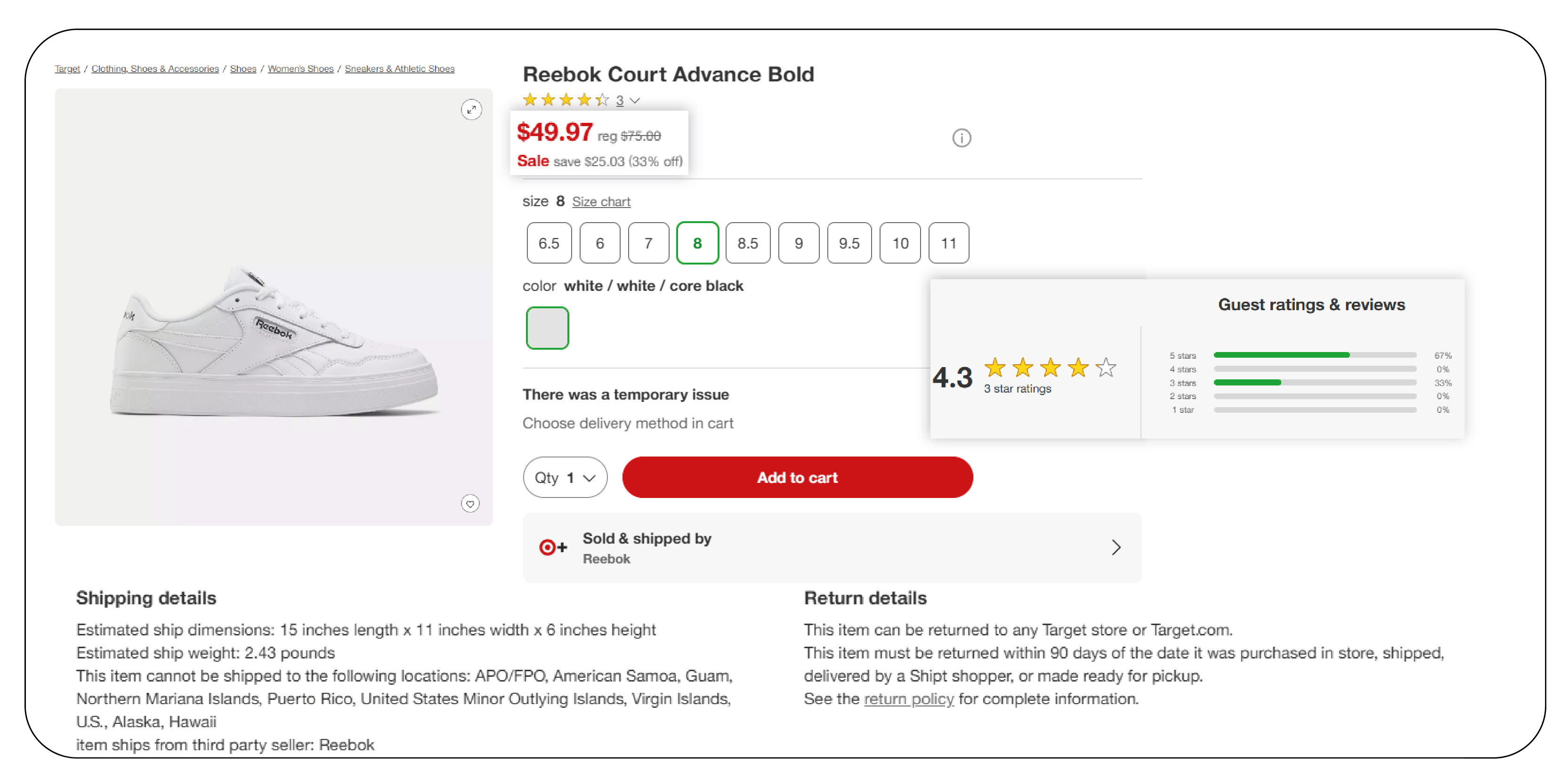

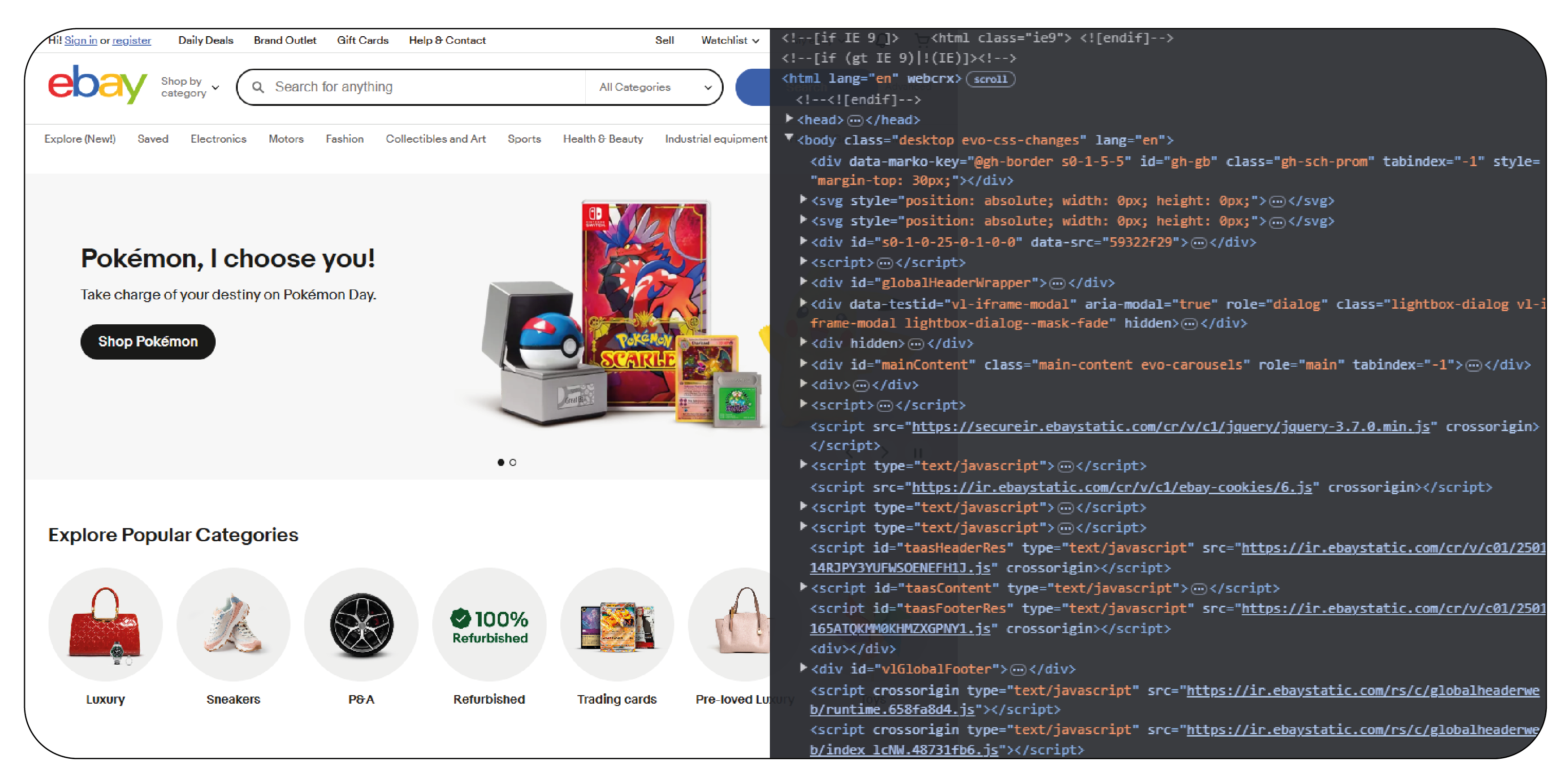

Step 4: Identify Target Website Structure

Understanding the website's structure is crucial for extracting accurate data:

- Use browser inspect elements (DevTools) to locate data points.

- Identify the HTML tags, classes, or IDs containing relevant information.

- Check for AJAX or JavaScript-rendered content that may require Selenium or Puppeteer.

Step 5: Write the Scraping Script

A simple Python web scraping script using BeautifulSoup:

import requests

from bs4 import BeautifulSoup

url = 'https://example.com/product-page'

headers = {'User-Agent': 'Mozilla/5.0'}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, 'html.parser')

# Extract product title

title = soup.find('h1', class_='product-title').text

print("Product Title:", title)

- Handle pagination for multi-page data scraping.

- Implement error handling for failed requests.

- Use delays to avoid triggering anti-scraping mechanisms.

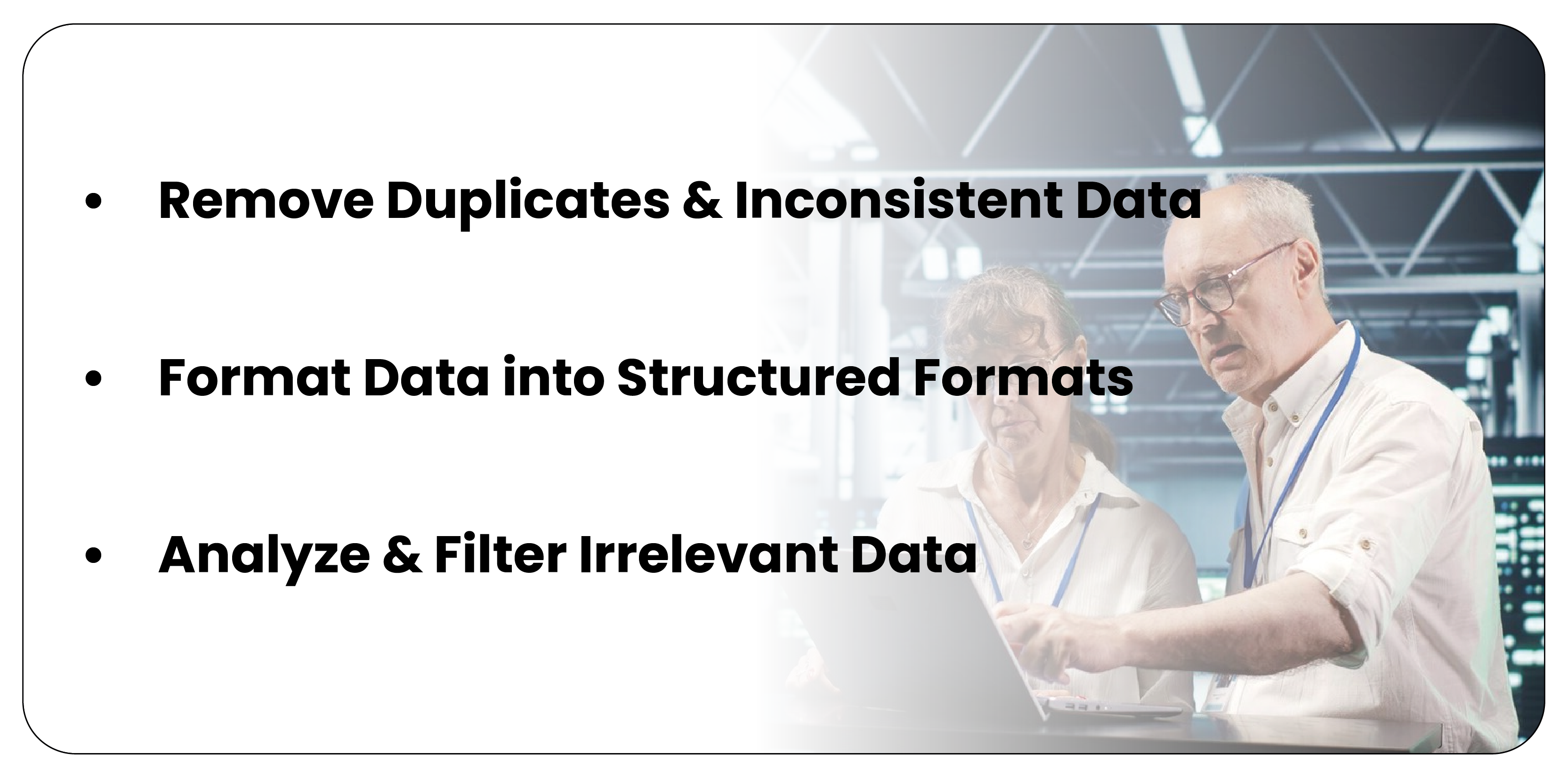

Step 6: Data Cleaning & Storage

Once data is extracted, it needs to be processed:

- Remove Duplicates & Inconsistent Data

- Format Data into Structured Formats (CSV, JSON, or database storage)

- Analyze & Filter Irrelevant Data

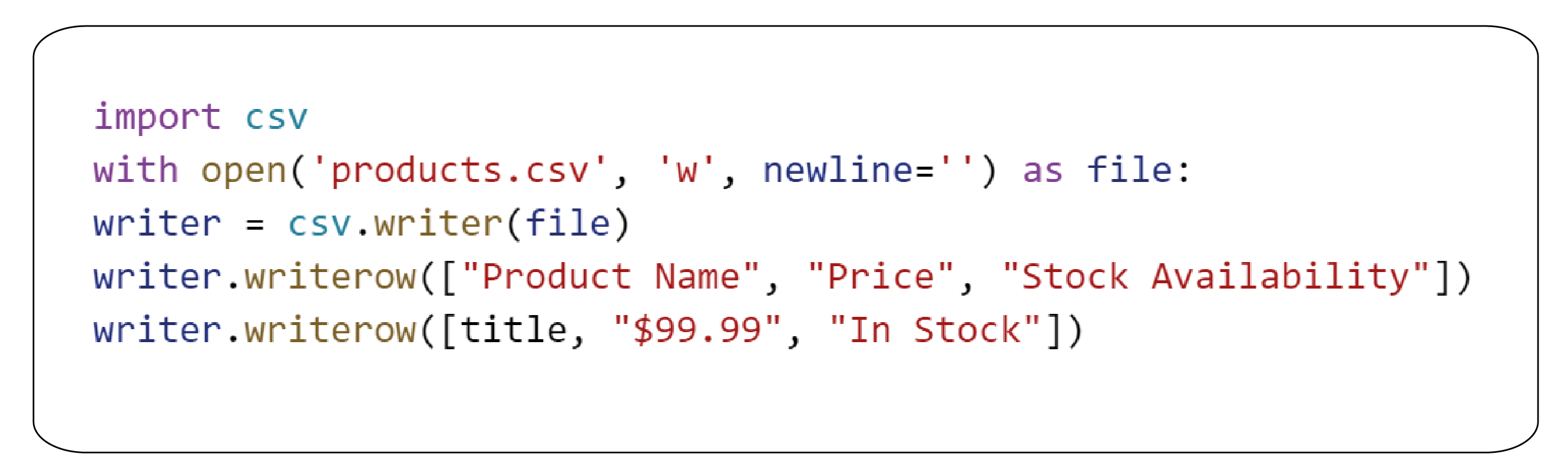

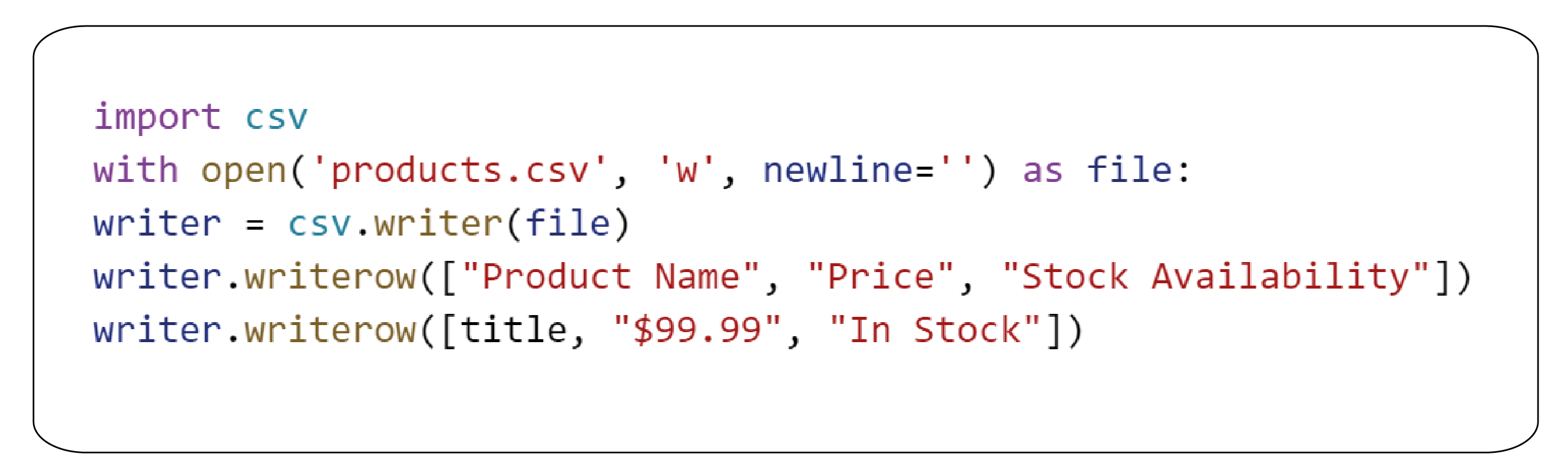

Example of saving scraped data to a CSV file:

Step 7: Avoiding Scraping Restrictions

E-commerce platforms implement anti-scraping mechanisms. Here's how to bypass restrictions:

- Use Proxies & VPNs to prevent IP bans.

- Rotate User-Agents to mimic real-user behavior.

- Limit Request Frequency with time.sleep() to avoid detection.

- Use Headless Browsers like Puppeteer or Selenium for JavaScript-heavy sites.

- Respect Robots.txt files to ensure ethical scraping.

Step 8: Automate & Schedule Scraping

For continuous data collection, automate the scraping process:

- Use Cron Jobs to schedule scripts at regular intervals.

- Cloud-based Scrapers like AWS Lambda for large-scale scraping.

- Database Integration to update scraped data dynamically.

Example of a scheduled Python script:

crontab -e

0 9 * * * /usr/bin/python3 /home/user/scraper.py

Step 9: Analyzing Scraped Data

Raw scraped data is analyzed for business intelligence:

- Use Excel, Power BI, or Python Pandas for data visualization.

- Apply Machine Learning for customer behavior predictions.

- Generate dynamic reports on product trends and competitor pricing.

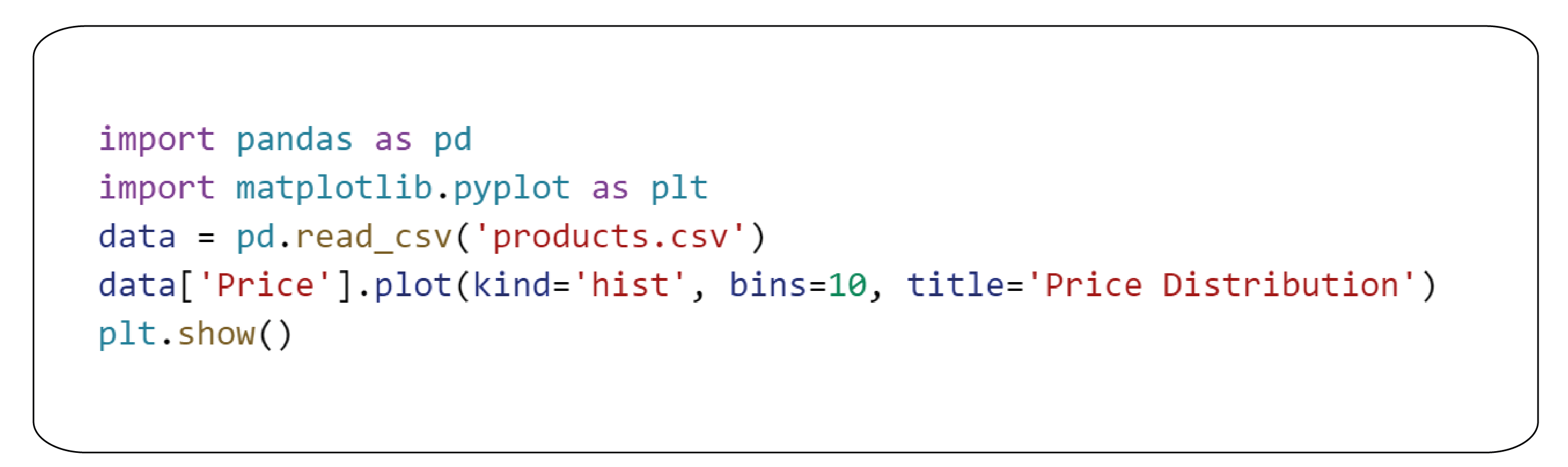

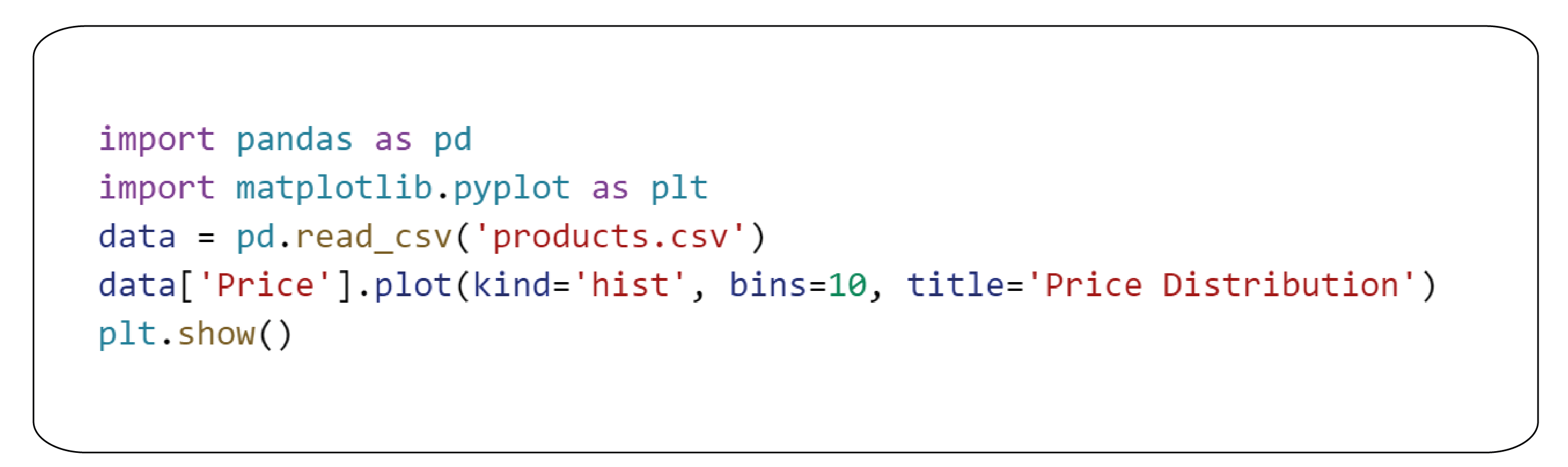

Example of data visualization using Pandas:

How Product Data Scrape Can Help You?

1. Accurate & High-Quality Data Extraction – We use advanced techniques to extract e-commerce data precisely, covering product details, pricing, reviews, and stock levels.

2. Customized Web Scraping Solutions – Our web scraping for e-commerce websites is tailored to meet specific business needs, ensuring relevant and actionable insights.

3. Real-Time Competitive Intelligence – Our services enable businesses to dynamically track competitors' pricing, promotions, and product trends using eCommerce dataset scraping.

4. Scalable & Automated Processes – We provide scalable scraping solutions that handle vast amounts of data efficiently, ensuring timely updates for market analysis.

5. Ethical & Secure Scraping – We follow best practices and compliance guidelines to ensure legal, ethical, and secure data collection, minimizing risks and maximizing business value.

Conclusion

E-commerce data scraping is a powerful technique for businesses seeking insights into pricing, market trends, and customer behavior. By leveraging web scraping e-commerce websites, companies can efficiently gather, clean, and analyze vast amounts of data to drive strategic decisions.

This step-by-step approach helps businesses extract e-commerce data for pricing optimization, product trend analysis, and competitive benchmarking. Utilizing automation, cloud computing, and AI-powered analytics enhances the efficiency of data collection and interpretation.

However, responsible scraping is essential—adhering to legal guidelines and ethical considerations ensures compliance with website policies. When implemented correctly, eCommerce dataset scraping provides businesses with actionable intelligence, enabling them to stay competitive and maximize growth in the evolving digital marketplace.

At Product Data Scrape, we strongly emphasize ethical practices across all our services, including Competitor Price Monitoring and Mobile App Data Scraping. Our commitment to transparency and integrity is at the heart of everything we do. With a global presence and a focus on personalized solutions, we aim to exceed client expectations and drive success in data analytics. Our dedication to ethical principles ensures that our operations are both responsible and effective.

.webp)