This tutorial is an educational resource to learn how to build a web scraping

tool. It emphasizes understanding the code and its functionality rather than simply copying and

pasting. It is important to note that websites may change over time, requiring adaptations to

the code for continued functionality. The objective is to empower learners to customize and

maintain their web scrapers as websites evolve.

We will utilize Python 3 and commonly used Python libraries to simplify the process. Additionally, we will leverage a potent and free liquor scraping tool called Selectorlib. This combination of tools will make our liquor product data scraping tasks more efficient and manageable.

List of Data Fields

- Name

- Size

- Price

- Quantity

- InStock – whether the liquor is in stock

- Delivery Available: Whether the liquor is delivered

- URL

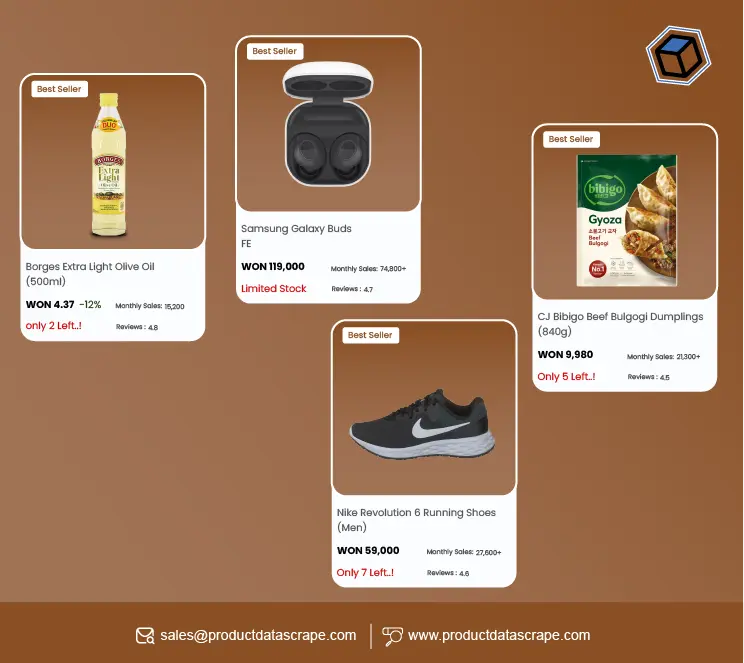

Save the data in Excel Format. It will appear like this:

Installing The Required Packages for Running Total

To Scrape liquor prices and delivery status from Total Wine and More store, we

will follow these steps

To follow along with this web scraping tutorial, having Python 3 installed on your

system is recommended. You can install Python 3 by following the instructions provided in the

official Python documentation.

Once you have Python 3 installed, you must install two libraries: Python Requests

and Selectorlib. Install these libraries using the pip3 command to scrape liquor prices and

delivery data, which is the package installer for Python 3. Open your terminal or command prompt

and run the following commands:

The Python Code

Develop a file named products.py and copy the following Python code within it:

The provided code performs the following actions:

Reads a list of URLs from a file called "urls.txt" containing the URLs of Total Wine and More

product pages.

Utilizes a Selectorlib YAML file, "selectors.yml," to specify the data elements

to scrape TotalWine.com product data.

Performs total wine product data collection by requesting the specified URLs and extracting the desired data using the Selectorlib library.

Stores the scraped data in a CSV spreadsheet named "data.csv."

Create the YAML file "selectors.yml"

We utilized a file called "selectors.yml" to specify the data elements we wanted to extract total wine product data. Create the file using a web scraping tool called Selectorlib.

Selectorlib is a powerful tool that simplifies selecting, highlighting up, and extracting data from web pages. With the Chrome Extension of Selectorlib Web Crawler, you can easily mark the data you need to collect and generate the corresponding CSS selectors or XPaths.

Selectorlib can make the data extraction process more visual and intuitive,

allowing us to focus on the specific data elements we want to extract without manually writing

complex CSS selectors.

To leverage Selectorlib, you can install the Chrome Extension of Selectorlib Web crawler and use it to mark and extract the desired data from web pages. The tool will then develop the imoportant CSS selectors or XPaths, which can be saved in a YAML file like "selectors.yml" and used in your Python code for efficient data extraction.

Follow these steps to mark up the fields for the data you need to perform liquor product data scraping using the Selectorlib Chrome Extension. First, install the Chrome Extension of Selectorlib Web crawler and navigate to the web page you want to crawl Once on the page, open the Selectorlib Chrome Extension by clicking its icon. Then, use the provided tools to select and mark the fields you want to collect. You can hover over elements to highlight them and click to select them. After marking all the desired fields, click the "Highlight" button to preview the selectors and ensure they select the correct data. If the preview looks good, click the "Export" button to download the selectors.yml file. This file contains the CSS selectors generated by the Selectorlib Chrome Extension, which you can use in your Python code to collect the specified data from web pages. Save the selectors.yml file in the same directory as your Python script for easy access.

The template file looks like this:

Functioning of Total Wine and More Scraper

To specify the URLs you want to scrape, create a text file named as "urls.txt" in the same directory as your Python script. Inside the "urls.txt" file, add the URLs you need to scrape liquor product data , each on a new line. For example:

Run the Total Wine data scraper with the following command:

Common Challenges and Limitations of Self-Service Web Scraping Tools and Copied Internet Scripts

Unmaintained code and scripts pose significant pitfalls as they deteriorate over

time and become incompatible with website changes. Regular maintenance and updates maintain the

functionality and reliability of these code snippets. Websites undergo continuous updates and

modifications, which can render existing code ineffective or even break it entirely. It is

essential to prioritize regular maintenance to ensure long-term functionality and reliability,

enabling the code to adapt to evolving website structures and maintain its intended purpose. By

staying proactive and keeping code up-to-date, developers can mitigate issues and ensure the

continued effectiveness of their scripts.

Here are some common issues that can arise when using unmaintained tools:

Changing CSS Selectors: If the website's structure changes, the CSS

selectors are used to extract data, such as the "Price" selector in the selectors.yaml file may

become outdated or ineffective. Regular updates are needed to adapt to these changes and ensure

accurate data extraction.

Location Selection Complexity: Websites may require additional

variables or methods to select the user's "local" store beyond relying solely on geolocated IP

addresses. Please handle this complexity in the code to avoid difficulties retrieving

location-specific data.

Addition or Modification of Data Points: Websites often introduce

new data points or modify existing ones, which can impact the code's ability to extract the

desired information. Without regular maintenance, the code may miss out on essential data or

attempt to extract outdated information.

User Agent Blocking: Websites may block specific user agents to

prevent automated scraping. If the code uses a blocked user agent, it may encounter restrictions

or deny website access.

Access Pattern Blocking: Websites employ security measures to

detect and block scraping activities based on access patterns. If the code follows a predictable

scraping pattern, it can trigger these measures and face difficulties accessing the desired

data.

IP Address Blocking: Websites may block specific IP addresses or

entire IP ranges to prevent scraping activities. If the code's IP address or the IP addresses

provided by the proxy provider are blocked, it can lead to restricted or denied access to the

website.

Conclusion: Utilizing a full-service solution, you can delve deeper

into data analysis and leverage it to monitor the prices and brands of your favorite wines. It

allows for more comprehensive insights and enables you to make informed decisions based on

accurate and up-to-date information.

At Product Data Scrape, we ensure that our Competitor

Price Monitoring Services and Mobile App Data Scraping maintain the highest standards of

business ethics and lead all operations. We have multiple offices around the world to fulfill

our

customers' requirements.

.webp)