AliExpress, an online retail service under the Alibaba Group's ownership,

operates as a conglomerate of small businesses primarily in China and other regions like

Singapore. Its extensive product catalog spans gadgets and apparel to home appliances and

electronics, catering to global online shoppers. Given this diversity, AliExpress is a rich data

source in the digital era.

This blog embarks on the journey of extracting AliExpress data. Specifically, we

will delve into scraping digital camera product data from AliExpress and storing it

systematically in a CSV file for analysis and reference. This tutorial opens the door to

leveraging web scraping techniques for market research and staying informed about market

conditions.

Why Scrape AliExpress?

Scraping data from AliExpress, the formidable e-commerce platform, holds numerous

compelling advantages extending to businesses and individuals. This practice offers a gateway to

strategic benefits, from market research to competitive analysis. Here are some noteworthy

reasons to engage in AliExpress data scraping:

Market Trends Analysis: Scrape AliExpress data to access an

extensive product listings, prices, and descriptions repository. This invaluable resource helps

to monitor evolving market trends, stay aligned with shifting consumer preferences, and identify

emerging product categories.

Competitor Insights: Delve into the strategies of your competitors.

Scraping product data from AliExpress allows you to closely track their product offerings,

pricing tactics, and customer engagement, offering valuable insights to refine your business

strategies and maintain a competitive edge.

Product Research and Development: AliExpress e-commerce data

scraping is a wellspring of product research and development inspiration. By examining customer

reviews, ratings, and feedback, you can pinpoint pain points and preferences, guiding your

innovation efforts effectively.

Pricing Strategy: Crafting a competitive pricing strategy is

paramount. Scraping pricing data from AliExpress empowers you to benchmark your prices against

similar products, ensuring that your pricing approach strikes the right balance between customer

attractiveness and profitability.

Increased Customer Understanding: The reviews and comments offered

by AliExpress customers offer valuable information into consumer behavior and preferences.

Harnessing this data enables you to tailor your products and marketing strategies, resonating

more effectively with your target audience.

Supply Chain Optimization: Retail data scraping streamlines supply

chain management for businesses engaged in dropshipping or sourcing products. Access to precise

and current product information facilitates informed inventory management and sourcing

decisions.

Data-Driven Decision-Making: In today's data-driven landscape,

informed choices are pivotal. Scrape AliExpress digital camera data to equip with the data

needed to make informed decisions, reducing risks and amplifying growth opportunities.

The Attributes

Before diving into the scraping process, we must define the specific attributes we

aim to extract for each product from AliExpress. These attributes serve as the building blocks

of our data collection:

Product URL: The unique web address pointing to a specific product

on the AliExpress website.

Product Name: It signifies the name or title assigned to the

product within the AliExpress platform.

Sale Price: This reflects the discounted selling price of a

product, which is the amount customers pay after any applicable discounts are applied.

MRP (Maximum Retail Price): It represents the market price or the

total retail price of the product without any discounts.

Discount Percentage: This attribute quantifies the percentage to

reduce MRP to arrive at the sale price, reflecting the value proposition offered to customers.

Rating: The overall rating assigned to the product based on

customer reviews and feedback, offering insights into its quality and satisfaction level.

Number of Reviews: The total number of customer reviews received

for the product indicates its popularity and engagement.

Seller Name: The name of the seller or store responsible for

selling the product on the AliExpress platform.

These attributes collectively form the foundation for our data extraction process,

enabling us to effectively compile comprehensive product information from AliExpress.

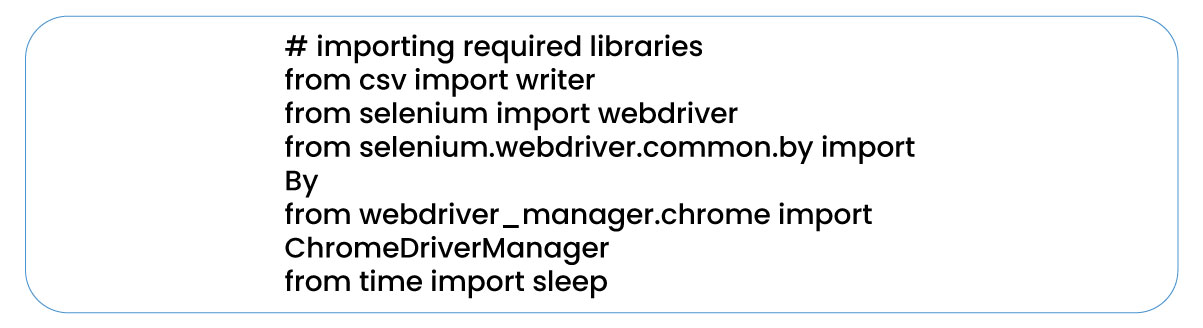

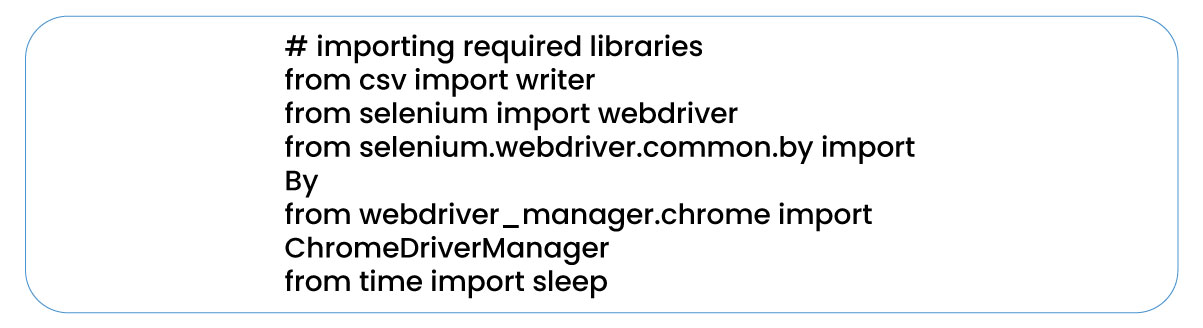

Import the Necessary Libraries

Once we've established the attributes to extract, the coding process for

scraping AliExpress can commence. We'll utilize Selenium, a powerful tool for automating web

browser actions, to achieve this. Our Aliexpress scraper will encompass several essential

libraries, ensuring a seamless execution of our scraping task. These libraries include:

Selenium WebDriver: This robust tool is the backbone of web

automation, enabling actions such as button clicks, form filling, and website navigation.

ChromeDriverManager: This library simplifies downloading and

installing the Chrome driver, an essential component for Selenium to effectively control the

Chrome web browser.

By Class (from selenium.webdriver.common.by): It's a vital utility

for locating elements on web pages, employing various strategies like ID, class name, XPath, and

more.

Writer Class (from the csv library): We'll harness this class for

reading and writing tabular data in CSV format, facilitating the storing and organizing of our

scraped data.

These libraries collectively empower us to automate web interactions, extract data

efficiently, and manage the scraped information systematically.

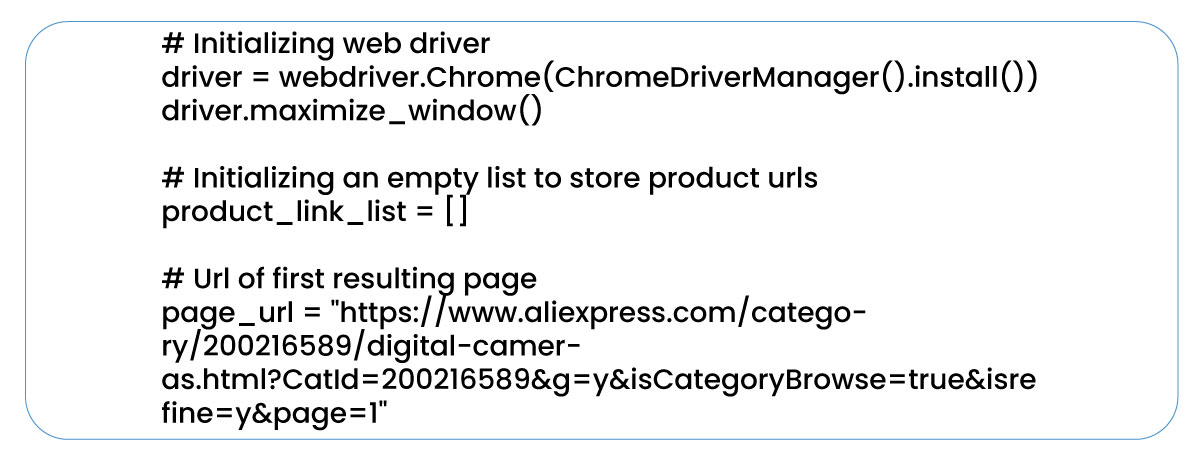

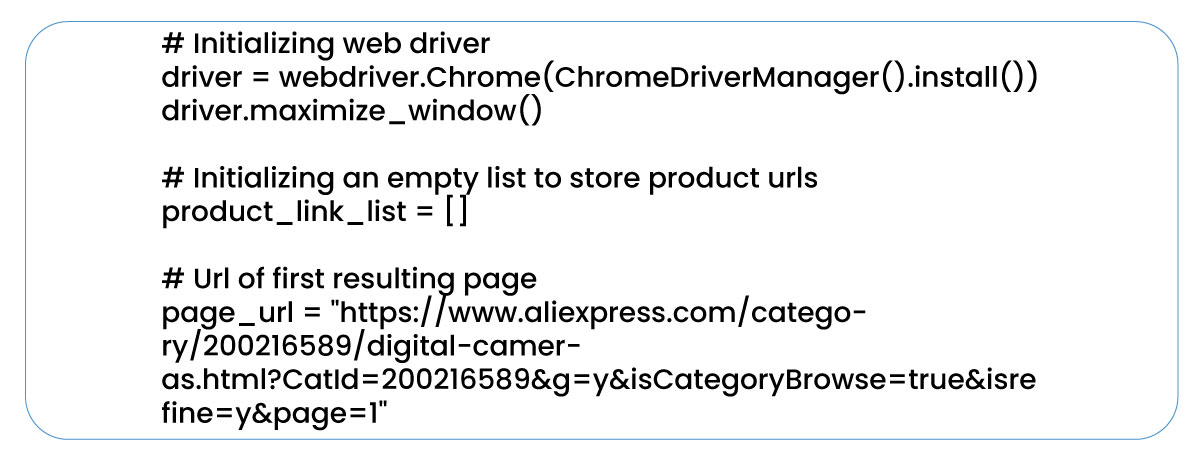

Initialization Process

After importing the necessary libraries, performing some essential initialization steps is crucial before we can proceed with scraping digital camera data from AliExpress. Here's a breakdown of this initialization procedures:

Web Driver Initialization: We begin by initializing a web driver. Accomplish it by creating an instance of the Chrome web driver using the ChromeDriverManager method. This step establishes a connection between our code and a Chrome web browser, enabling Selenium to interact with it effectively. Additionally, we maximize the browser window using the maximize_window() function for optimal visibility and interaction.

Product Link List: To store the links of digital camera products that we'll scrape from various pages, we initialize an empty list named product_link_list. This list will gradually accumulate all the product links we extract during scraping.

Page URL Initialization: To kickstart our scraping journey, we define a variable called page_url. This variable will hold the web page URL we are currently scraping. Initially, we set it to the link of the first page of search results for digital cameras. Update this variable to reflect the current URL as we progress through the pages.

With these initializations in place, we're well-prepared to scrape digital camera data from AliExpress.

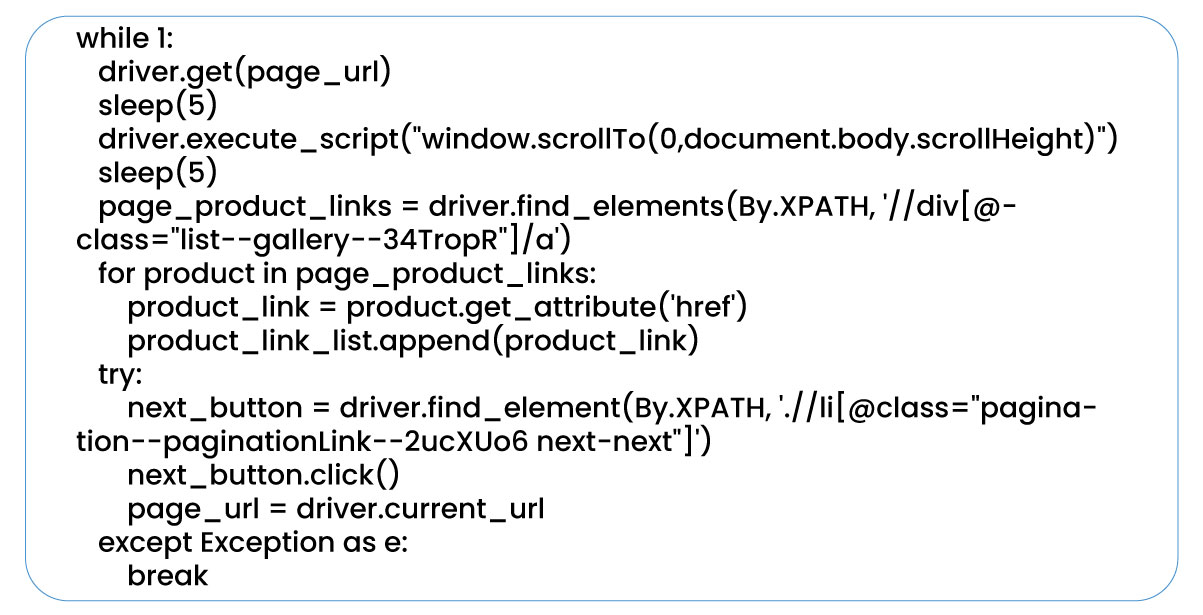

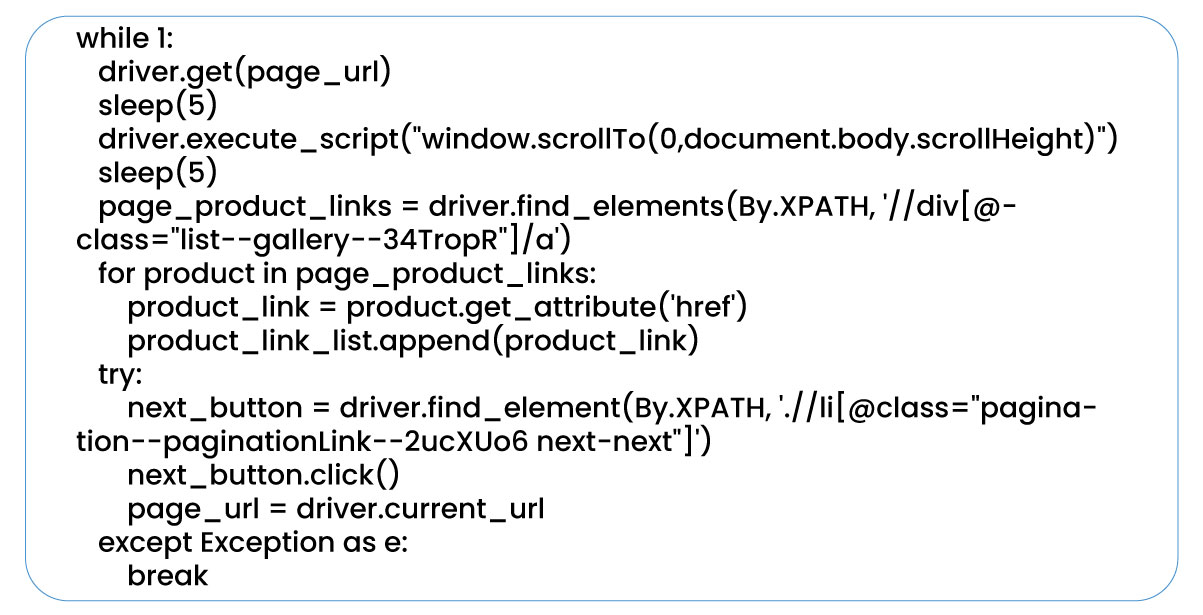

Extraction of Product URLs

As previously outlined, our initial task involves scraping the links of digital camera products from all the resulting pages generated by our search on AliExpress. Hence, the e-commerce data scraping services employ a while loop to achieve this dynamic process until we've traversed all the available pages. Here's the code that facilitates this operation:

Within the while loop, our Aliexpress data scraping services unfold methodically. We commence by invoking the get() function with page_url as its parameter. This function, predefined for web browsing, opens the specified URL. To cater to AliExpress's dynamic content loading mechanism, we employ the execute_script("window.scrollTo(0,document.body.scrollHeight)"). This script is crucial because AliExpress initially loads only a portion of the webpage's content. To trigger the loading of all products on the page, we simulate scrolling, prompting the website to load additional content dynamically.

With the webpage fully loaded, our next objective is to extract the product links. To achieve this, we utilize the find_elements() function, specifying the XPATH and employing the By class to locate the product link elements. Gather these elements as a list. To obtain the actual product links from these elements, we iterate through the list, invoking the get_attribute method for each element to retrieve its 'href' property. Aggregate these links into the product_link_list.

Our journey continues as we navigate to the subsequent page of results. Each page features a 'next' button at its conclusion, facilitating the transition to the next page. We locate this button using its XPATH and store it as next_button. Applying the click() function to this variable triggers the button's action, advancing us to the following page. The current_url function then retrieves the URL of the new page, which is available to the page_url variable.

However, the 'next' button is absent on the last page, leading to an error when locating it. Manage this situation gracefully by exiting the while loop, signifying the successful completion of our scraping endeavor. At this point, the product_link_list contains a comprehensive collection of links to all the scraped products, providing us with a valuable dataset for further analysis and insights.

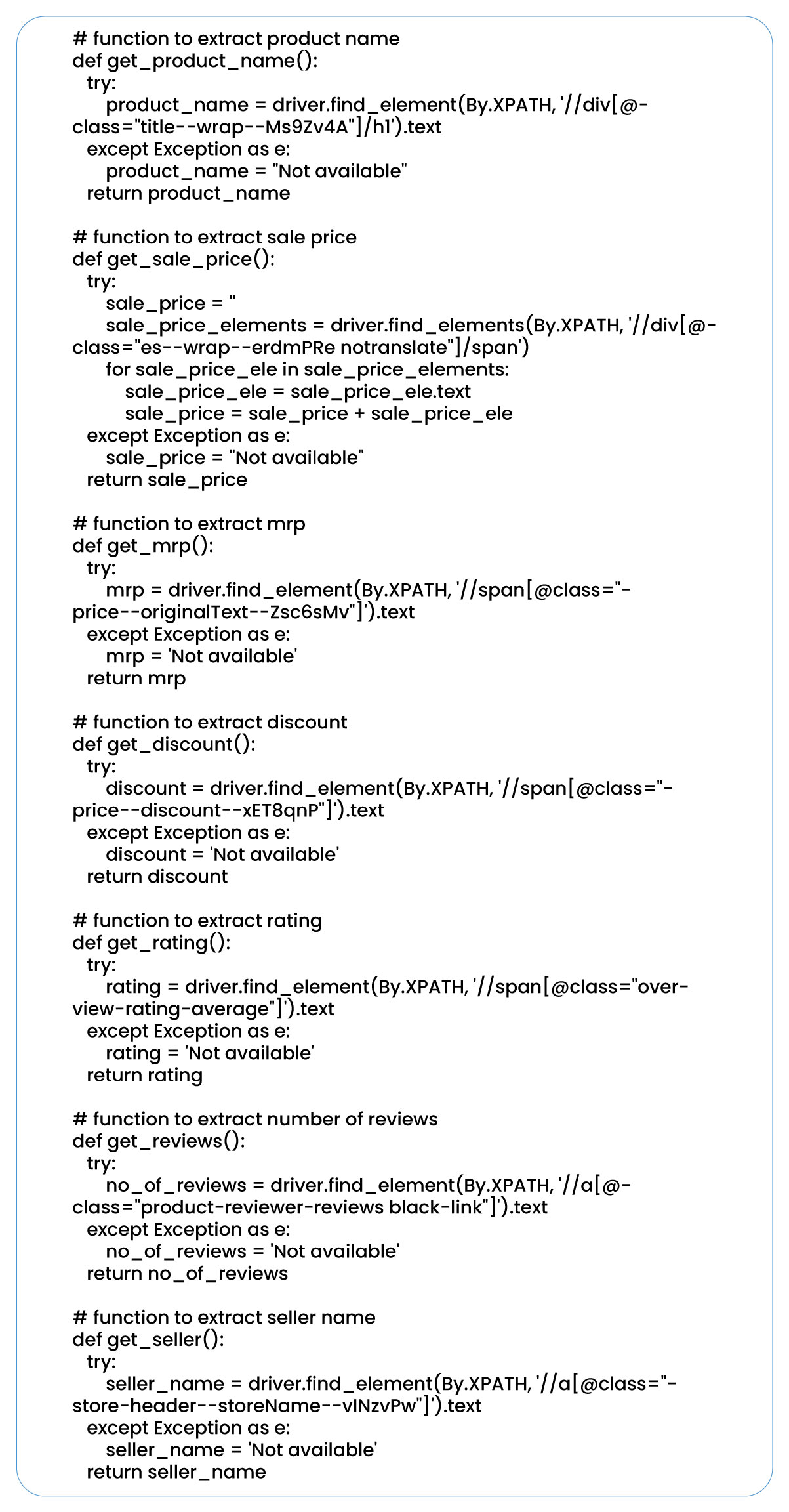

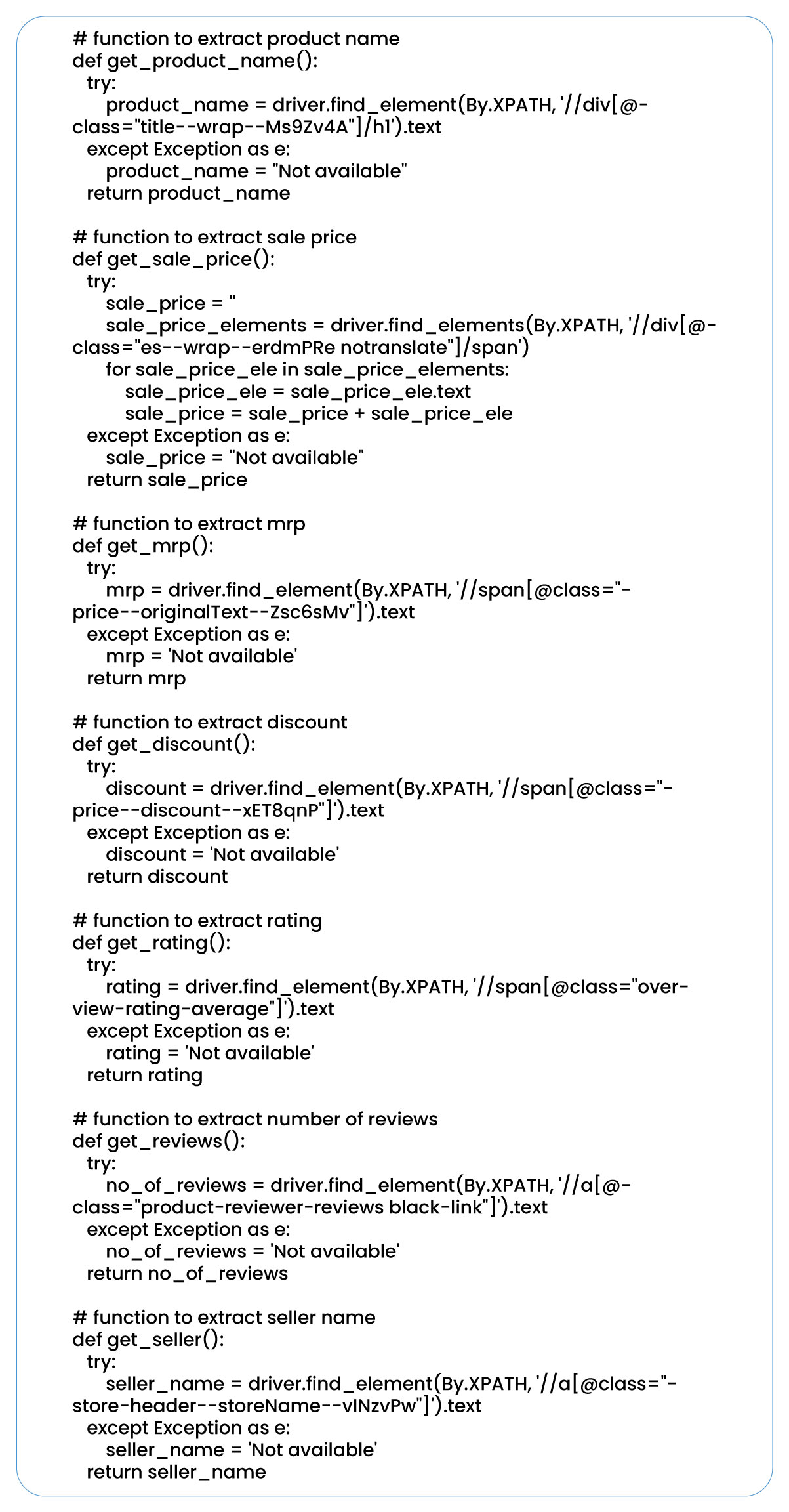

Define Functions

Our next step involves defining functions to extract specific attributes from the product pages.

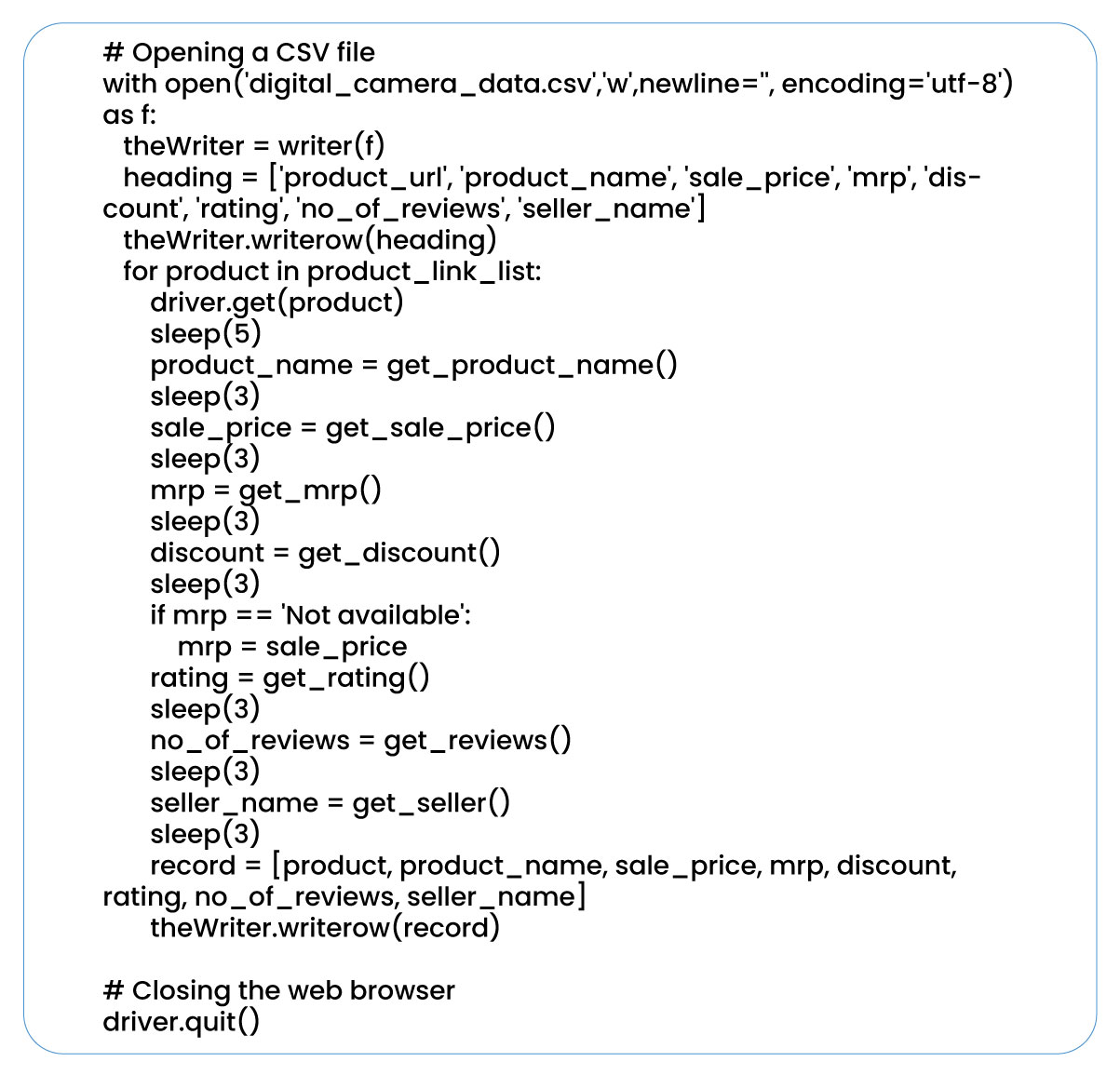

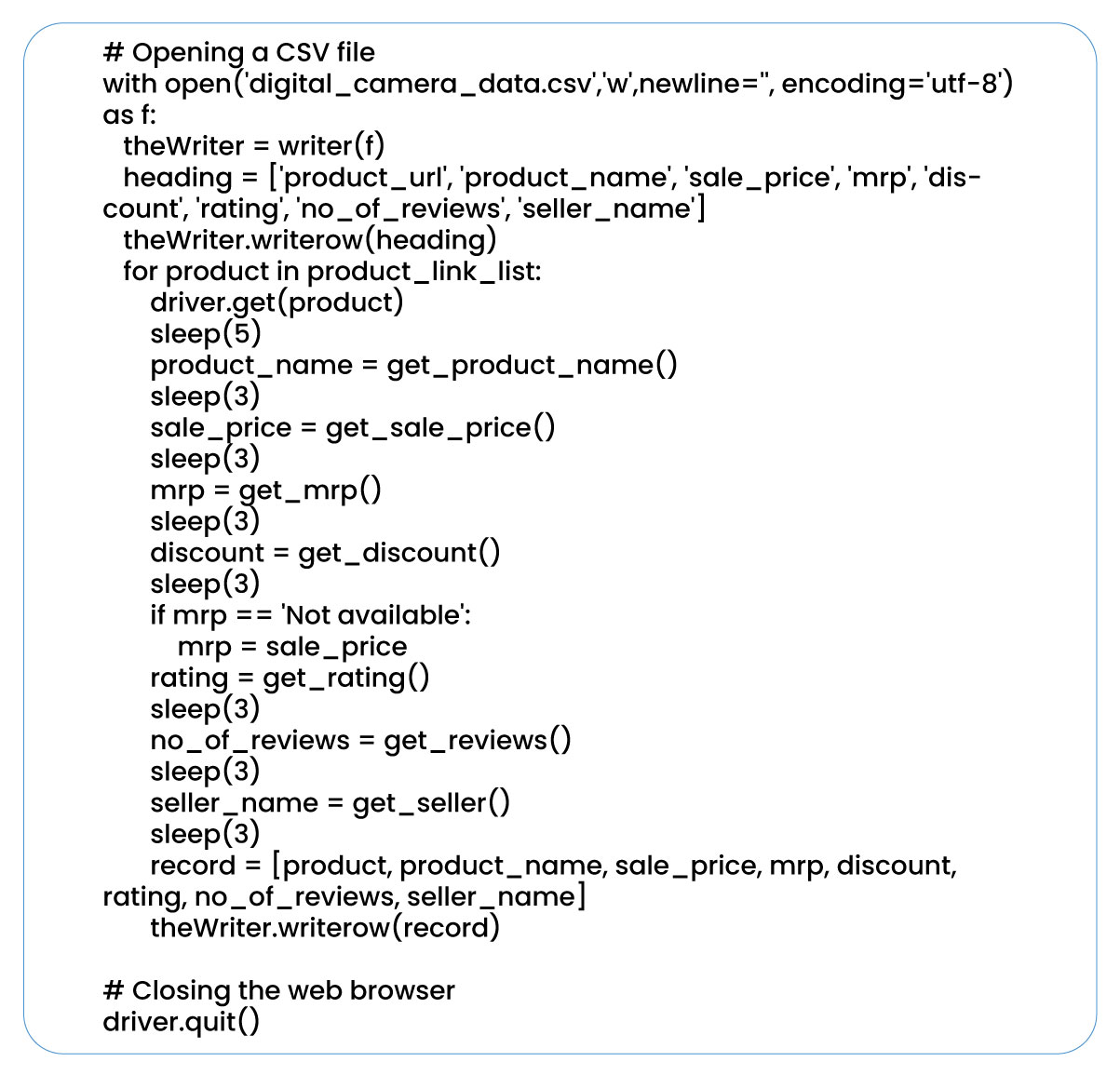

Writing to a CSV File

To efficiently store the extracted data for future use, we employ a structured process of saving it to a CSV file.

Here's a breakdown of the essential steps involved:

File Initialization: We initiate the process by opening a file named "digital_camera_data.csv" in write mode. To facilitate this, we create an object of the writer class called theWriter.

Column Headers: We begin by initializing the column headers, representing various data attributes, as a list. These headers are crucial for correctly organizing and labeling the data within the CSV file. We then employ the writerow() function to write these headers to the CSV file, ensuring that each column is appropriately named.

Data Extraction and Storage: The core of the process involves iterating through the product links stored in product_link_list. We utilize the get() function and the previously defined attribute-extraction functions for each product link to obtain the necessary product details. Store these extracted attribute values as a list.

Data Writing: To preserve the extracted data systematically, we write the attribute values for each product into the CSV file using the writerow() function. This sequential writing process ensures that each product's information occupies its respective row in the CSV file.

Browser Closure: Once all the necessary data has been extracted and stored, we invoke the quit() command to gracefully close the web browser opened by the Selenium web driver. It ensures proper termination of the scraping process.

Sleep Function: The sleep() function is strategically inserted between various function calls to introduce pauses or delays in the program's execution. These pauses help prevent potential blocking by the website and ensure smoother scraping operations.

Conclusion: In this blog, we have delved into the intricate process of extracting digital camera data from AliExpress, harnessing the capabilities of robust Python libraries and techniques. This harvested data holds immense significance, serving as a valuable resource for understanding market dynamics and the ever-evolving e-commerce realm. Its utility extends to businesses seeking to monitor pricing trends, gain competitive insights, and gauge customer sentiments, making it a crucial asset in online commerce.

.webp)