.webp)

Introduction

In today’s data-driven grocery ecosystem, retailers and analytics teams face a crucial decision: whether to rely on traditional scraping methods or shift to API-based data collection. This research explores Manual Scraping vs Using Grocery Data APIs, focusing on accuracy, scalability, operational effort, and long-term cost efficiency.

With growing SKU counts, dynamic pricing models, and omnichannel competition, modern businesses increasingly depend on real-time, reliable data streams. Solutions powered by a Web Data Intelligence API now offer structured access to pricing, inventory, and promotion insights—dramatically improving speed and reliability compared to legacy scraping techniques.

From 2020 to 2026, grocery data usage has expanded by more than 240%, driven by demand forecasting, price intelligence, and automated merchandising. This report provides a comprehensive comparison to help organizations choose the most sustainable and scalable data extraction approach for 2026 and beyond.

Evolving Data Collection Approaches in Grocery Retail

Comparing Manual Scraping vs Grocery APIs for Price Intelligence highlights how grocery analytics has transformed over the past six years. Early-stage businesses often relied on scripts to capture pricing data from competitor websites, but as SKU counts grew, maintaining accuracy became increasingly difficult. Today, structured access to a Grocery store dataset via APIs enables faster and more reliable decision-making.

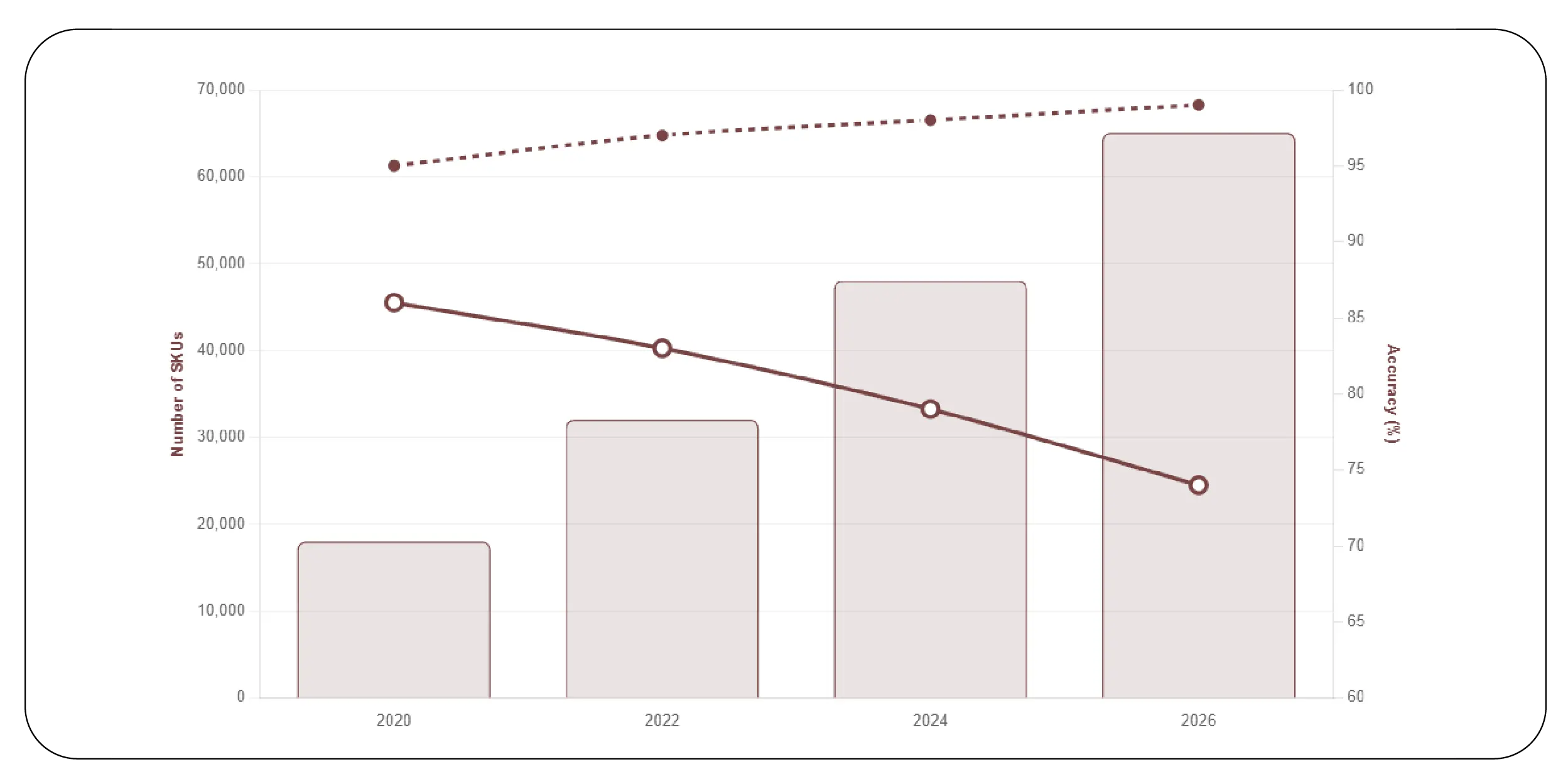

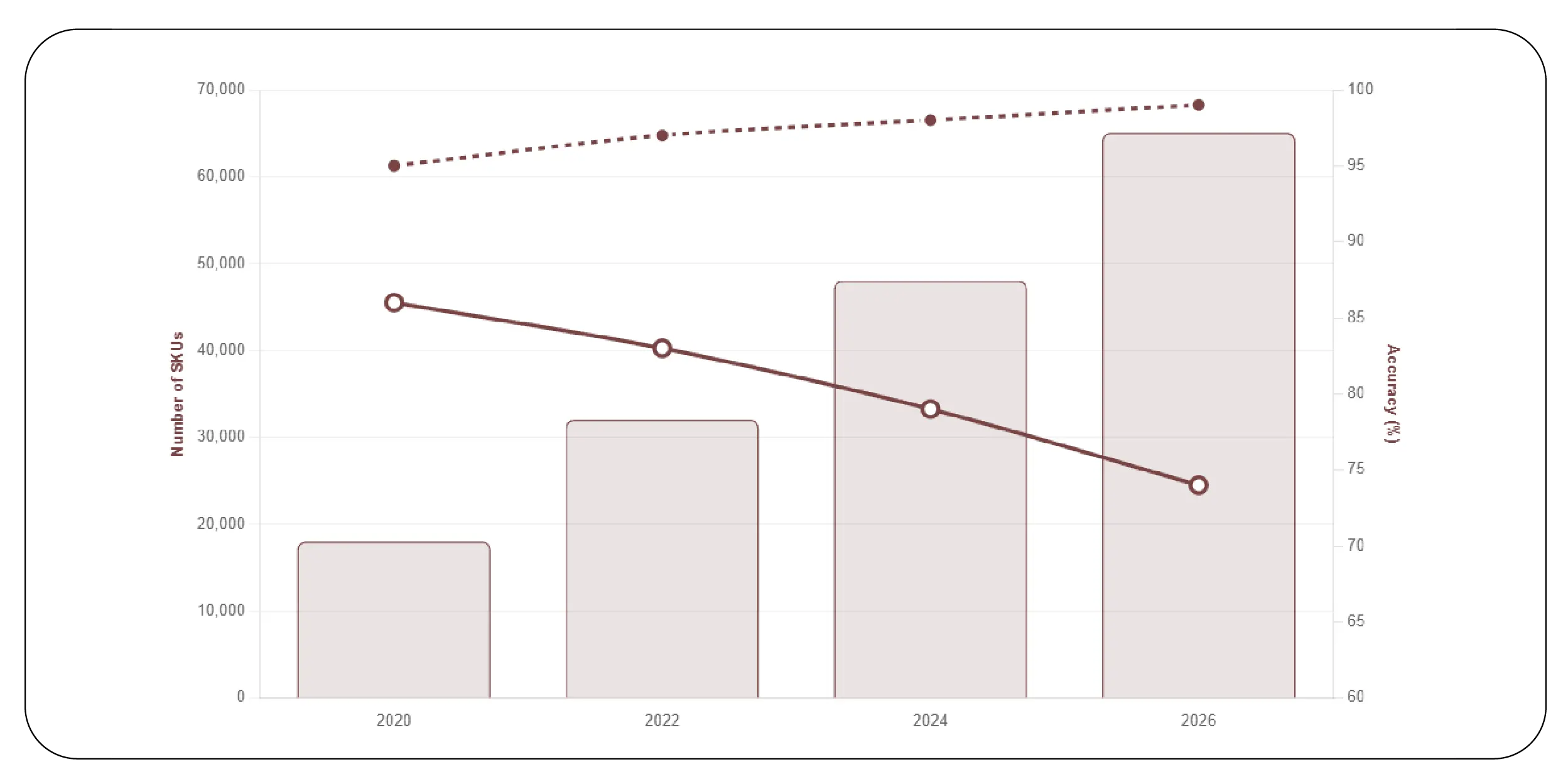

Between 2020 and 2026, grocery retailers expanded digital catalogs from an average of 18,000 SKUs to over 65,000 SKUs. This shift made manual scraping resource-intensive and error-prone.

| Year |

Avg. SKUs Monitored |

Manual Scraping Accuracy |

API Accuracy |

| 2020 |

18,000 |

86% |

95% |

| 2022 |

32,000 |

83% |

97% |

| 2024 |

48,000 |

79% |

98% |

| 2026 |

65,000 |

74% |

99% |

Retailers using APIs report faster data refresh cycles, averaging updates every 15 minutes, compared to 6–12 hour refresh cycles in manual systems. This performance gap directly impacts pricing accuracy, promotional effectiveness, and inventory forecasting across omnichannel operations.

Understanding the True Cost of Data Collection

A detailed cost analysis of grocery data APIs vs manual scraping reveals that initial setup costs can be misleading. While manual scraping appears cheaper in year one, maintenance, infrastructure, and staffing costs grow rapidly as data volume increases.

From 2020 to 2026, average monthly costs for manual scraping rose by 210%, driven by anti-bot defenses, site changes, and higher compute usage. In contrast, API costs increased by only 48% due to scalable pricing models.

| Cost Category (Monthly Avg.) |

Manual Scraping 2020 |

Manual Scraping 2026 |

API 2020 |

API 2026 |

| Infrastructure |

$1,200 |

$3,800 |

$800 |

$1,200 |

| Engineering Time |

$2,000 |

$5,500 |

$600 |

$900 |

| Data Validation |

$900 |

$2,300 |

$300 |

$500 |

| Total |

$4,100 |

$11,600 |

$1,700 |

$2,600 |

Organizations migrating to API-based extraction report average annual savings of 38% by year three, primarily due to reduced downtime and automation of validation processes.

Real-World Data Collection at Scale

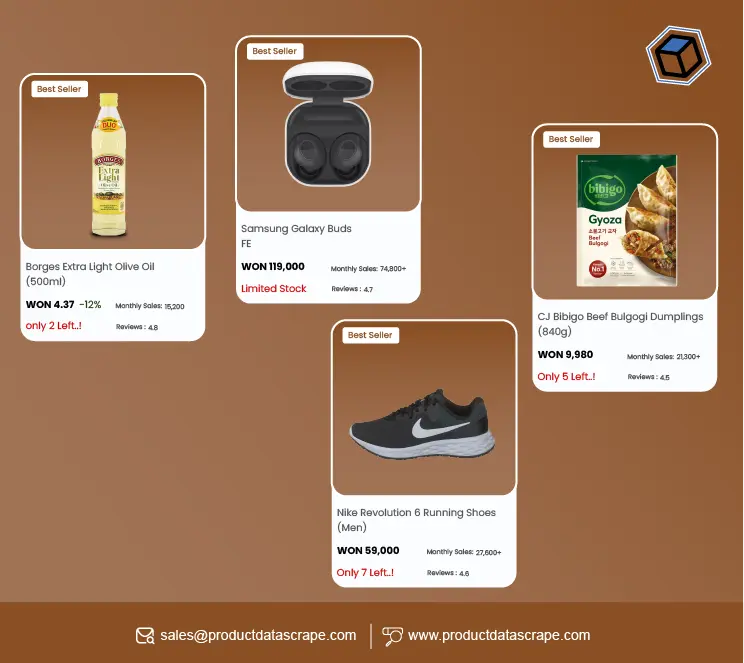

Enterprises extracting supermarket data often evaluate methods using high-volume platforms such as Scraping ASDA Supermarket Product Data to compare outcomes. Large retailers require near-perfect accuracy across pricing, promotions, and stock levels—making manual approaches increasingly unsustainable.

Between 2020 and 2026, ASDA product listings expanded from 22,000 to over 58,000 SKUs. Manual scraping teams required an average of 6 engineers to maintain pipelines, while API-driven workflows required only 1–2 specialists.

| Metric |

Manual Scraping |

API-Based Extraction |

| Avg. Downtime per Month |

18 hours |

2 hours |

| Data Loss Incidents/Year |

14 |

2 |

| Update Frequency |

2–3/day |

Every 15 minutes |

| Error Rate |

21% |

3% |

Retailers using APIs improved promotion tracking accuracy by 42%, directly improving campaign ROI and shelf competitiveness across digital channels.

Risk Exposure and Operational Stability

The comparison of Operational costs & risks of manual scraping vs APIs shows that beyond expenses, risk management is a major factor. Manual scraping faces constant threats from IP blocking, CAPTCHA enforcement, and frequent site redesigns. These disruptions translate into lost data coverage and delayed business decisions.

From 2020 to 2026, compliance-related risks also increased as organizations faced tighter data governance standards. API-based solutions significantly reduce exposure by offering compliant, structured access models.

| Risk Factor |

Manual Scraping |

API-Based |

| Legal & Compliance Risk |

High |

Low |

| System Downtime |

Frequent |

Rare |

| Maintenance Dependency |

High |

Minimal |

| Scalability Limit |

Medium |

Unlimited |

Enterprises adopting APIs reported 63% fewer operational incidents and 51% faster deployment of new data pipelines compared to traditional scraping environments.

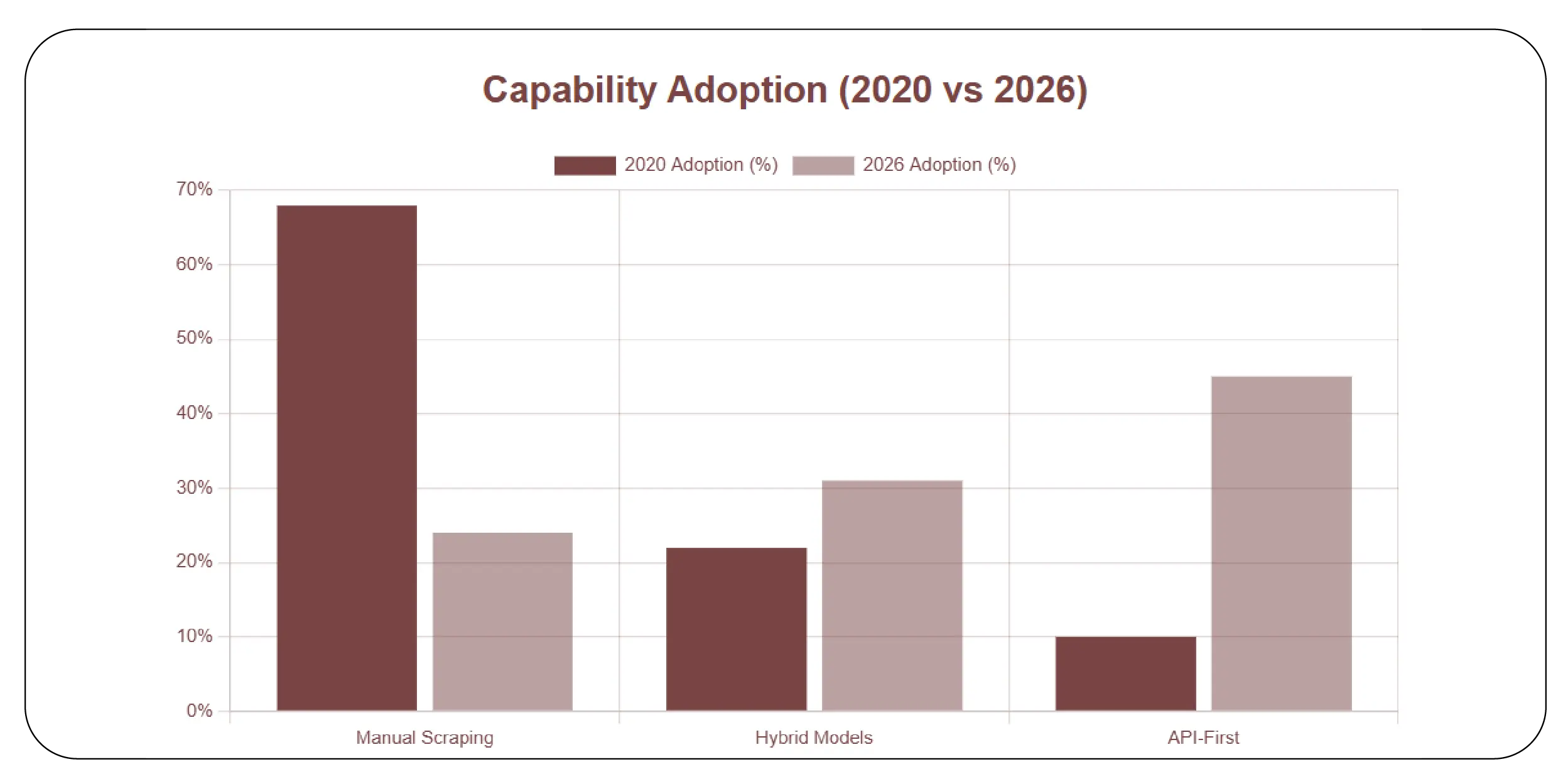

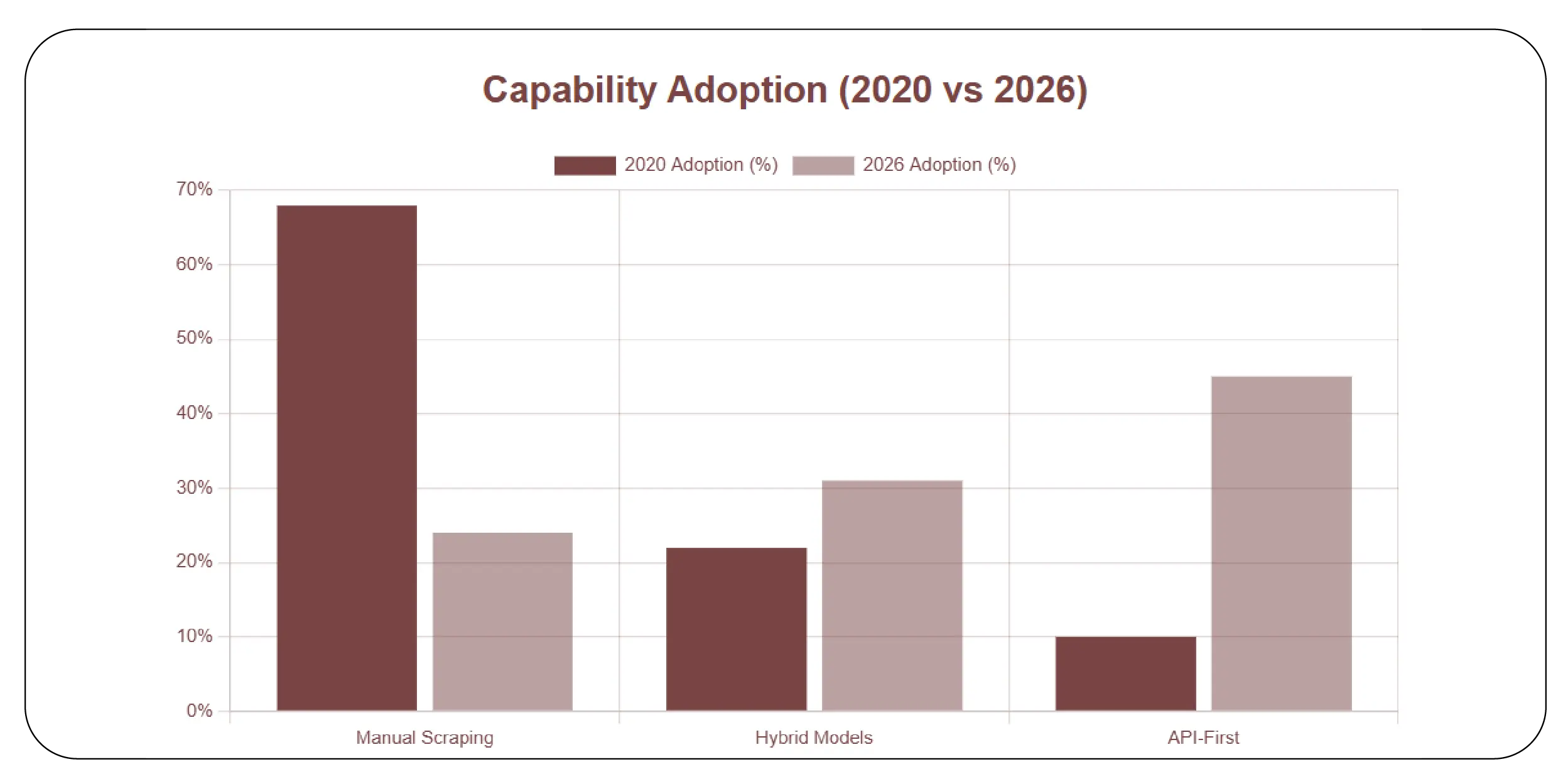

What 2026 Reveals About Grocery Data Strategies

A forward-looking 2026 analysis of grocery data extraction indicates that automation and AI-readiness now define competitive advantage. Retailers no longer seek raw data—they require structured feeds that integrate directly into pricing engines, forecasting tools, and BI platforms.

Between 2020 and 2026, grocery analytics maturity evolved rapidly:

| Capability Level |

2020 Adoption |

2026 Adoption |

| Manual Scraping |

68% |

24% |

| Hybrid Models |

22% |

31% |

| API-First |

10% |

45% |

API-first organizations reported:

• 34% faster price change execution

• 29% improvement in stock forecasting accuracy

• 41% reduction in data reconciliation time

This shift reflects how grocery retailers increasingly prioritize reliability, compliance, and integration speed over legacy extraction methods.

Scaling Grocery Intelligence Across Millions of SKUs

Modern retailers rely on Large-scale grocery SKU monitoring methods to manage vast assortments across physical and digital shelves. Monitoring thousands of SKUs manually introduces blind spots that impact pricing integrity and promotional timing.

From 2020 to 2026, average enterprise grocery catalogs grew from 40,000 to 120,000 SKUs. API-based systems now support real-time synchronization across channels, ensuring price consistency and stock visibility.

| Metric |

Manual Systems |

API-Driven Systems |

| Max SKUs Tracked |

50,000 |

500,000+ |

| Refresh Speed |

6–12 hours |

5–15 minutes |

| Automation Coverage |

35% |

92% |

| Analytics Readiness |

Low |

High |

This capability enables grocery brands to shift from reactive monitoring to predictive intelligence—transforming data into a strategic growth asset.

At Product Data Scrape, we help enterprises move beyond traditional collection models by delivering advanced Commerce Intelligence solutions. Our platform simplifies the transition from legacy systems to API-driven workflows, empowering organizations evaluating Manual Scraping vs Using Grocery Data APIs to make smarter, future-ready decisions. We provide structured grocery datasets, real-time monitoring, and seamless integration with analytics platforms—helping retailers reduce operational costs, improve accuracy, and scale effortlessly in fast-moving grocery markets.

Conclusion

As grocery ecosystems grow more complex, the debate around Manual Scraping vs Using Grocery Data APIs is no longer theoretical—it directly impacts profitability, speed, and resilience. Organizations that rely on outdated methods face rising costs, operational risks, and missed opportunities.

By adopting modern platforms that Extract Grocery & Gourmet Food Data, businesses unlock reliable, scalable intelligence that fuels smarter pricing, optimized inventory, and data-driven promotions.

Ready to future-proof your grocery analytics? Partner with Product Data Scrape today and transform how you collect, analyze, and act on market data!

.webp)

.webp)