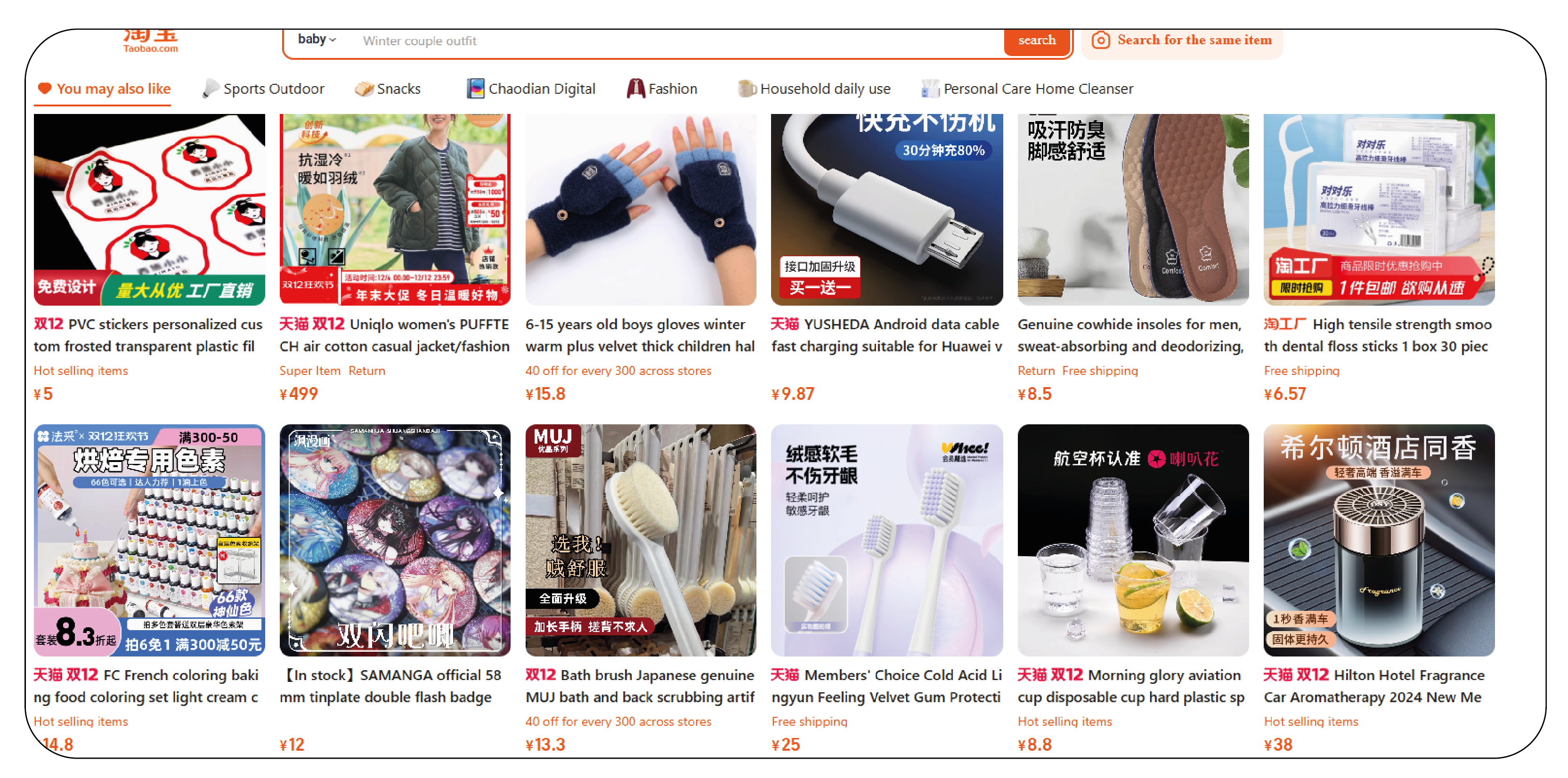

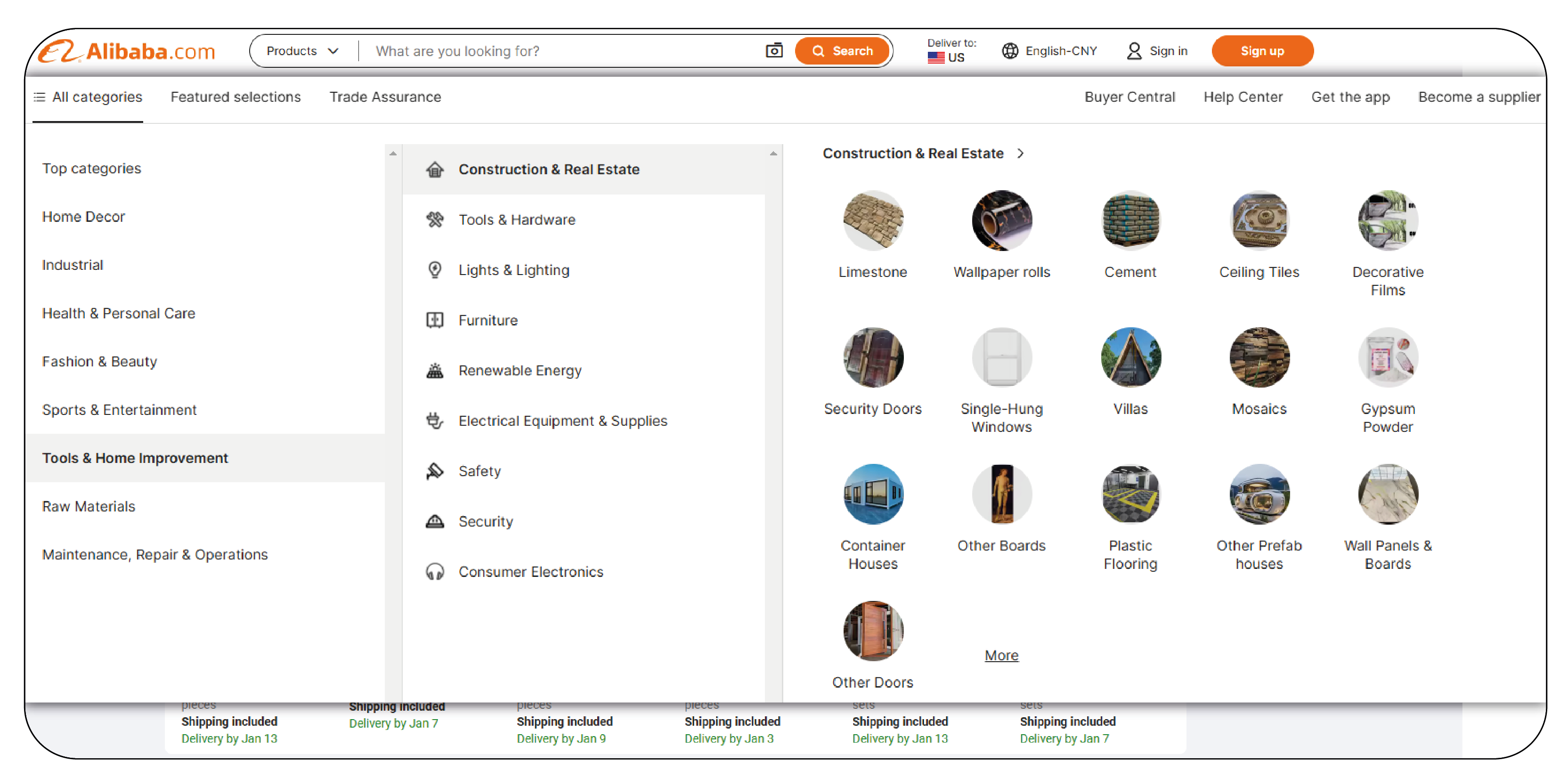

The rise of several e-commerce giants like Alibaba, JD.com, Taobao, and others has reshaped the global retail landscape. These platforms offer millions of products, including electronics, fashion, and consumer goods, making them valuable data sources for businesses looking to understand market trends, monitor competitors, or even source products. However, e-commerce dataset scraping service presents unique challenges due to anti-scraping measures. Developing a robust web scraping API that can efficiently collect product search results while overcoming these challenges is essential for achieving stable and reliable data extraction.

This article outlines the development of a scraping system that collects product search results from e-commerce platforms and delivers them through an API. The system must handle various anti-scraping mechanisms and ensure data integrity. We will explore the design of the scraping API, the use of proxies, rotating session keys (SKs), ShumeiIds, and relevant scraping libraries for scraping search results from e-commerce apps.

By implementing these solutions, businesses can extract valuable product data, stay ahead of market trends, and optimize their operations. The ability to efficiently scrape product search results from e-commerce platforms opens up opportunities for enhanced market intelligence, competitive analysis, and better sourcing decisions.

Why Scrape E-Commerce Applications?

Scraping data from e-commerce applications offers valuable insights into consumer behavior, market trends, and competitive analysis. By extracting product details, pricing, and reviews, businesses can enhance decision-making and optimize their strategies for the market.

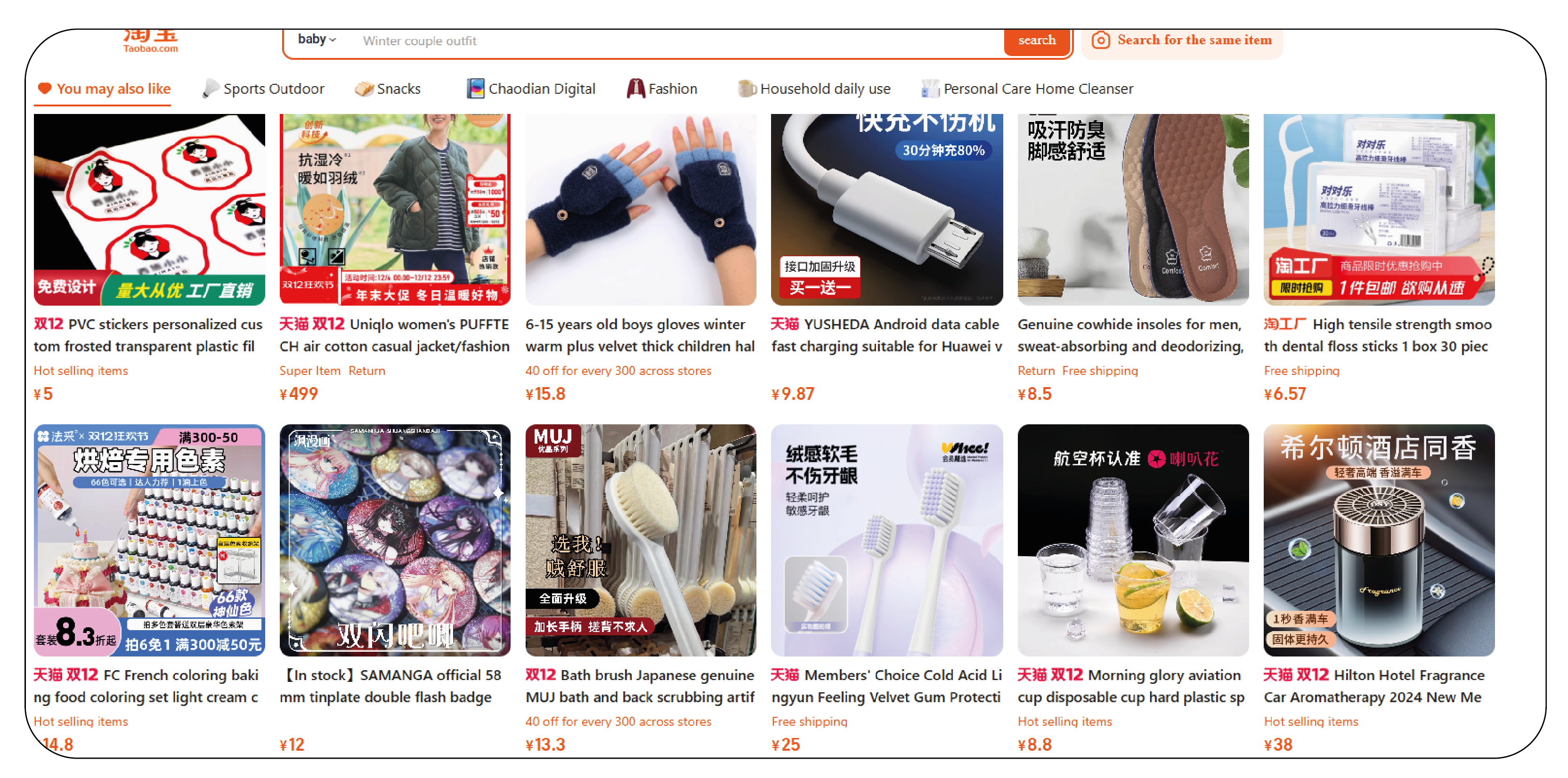

- Access to a Massive Market: The-commerce platforms like Alibaba, Taobao, JD.com, and Pinduoduo offer access to one of the largest consumer markets in the world. E-commerce search results scraping service enables businesses to gather insights about millions of products across diverse categories, such as electronics, fashion, and beauty. This data is crucial for understanding market trends, identifying in-demand products, and making data-driven business decisions, especially for companies looking to expand their market presence or source products globally.

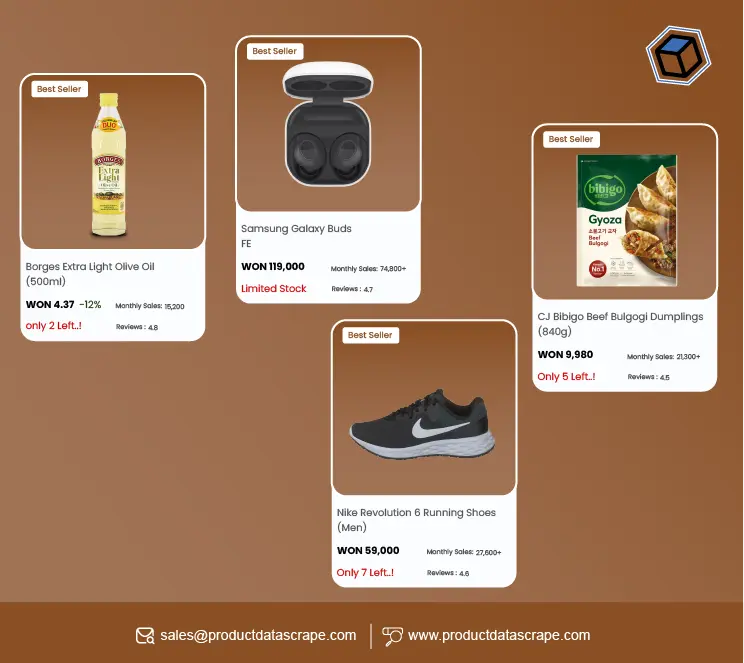

- Competitive Intelligence: Real-time product data scraping from apps allows businesses to conduct detailed competitive analysis. By gathering data on product pricing, features, customer reviews, and seller performance, companies can benchmark their offerings against competitors. This helps refine pricing strategies, identify market gaps, and discover successful product features or variations. Competitors' product catalogs can be continuously monitored to stay ahead in product innovation, customer engagement, and overall market positioning. Product data extraction from e-commerce platforms further aids in staying competitive.

- Market Trend Analysis: The e-commerce market is fast-moving, with frequent shifts in consumer preferences, seasonal trends, and emerging product categories. The app search interface scraping from platforms like Taobao or JD.com provides real-time insights into these trends. By tracking popular products, customer reviews, and keyword search volumes, businesses can better understand consumer behavior. This information can guide inventory management, marketing strategies, and product development, helping businesses respond quickly to market demands.

- Product Sourcing and Wholesale Opportunities: Many businesses and e-commerce platforms scrape e-commerce applications to identify suppliers and manufacturers for products they wish to source. Scraping product prices from e-commerce applications helps businesses gather data on pricing, product specifications, minimum order quantities (MOQ), and shipping details. This is particularly beneficial for companies engaged in drop shipping, reselling, or wholesale operations, as they can identify profitable products and negotiate directly with manufacturers and suppliers for better deals.

- Localization and Customization: For companies operating internationally, scraping data from e-commerce platforms allows for effective localization and customization of offerings. By analyzing product descriptions, features, and customer feedback on platforms such as Taobao or Pinduoduo, businesses can better tailor their products or services to suit local tastes and preferences. This is particularly valuable for companies expanding into the global market or those seeking to customize their products for specific regional markets. Real-time product data scraping from various sites is key in this process.

- Consumer Sentiment and Reviews Analysis: Customer reviews and ratings are an essential source of feedback on product quality, customer satisfaction, and service reliability. Web scraping e-commerce Websites allow businesses to gather large volumes of review data, which can be analyzed for consumer sentiment. By understanding what customers like and dislike about particular products, businesses can make informed decisions on product improvements, marketing messages, and customer service strategies. This review data can also be used for competitive analysis to gauge how products on competing platforms are performing. Price Monitoring is also facilitated through this data, ensuring businesses can maintain competitive pricing strategies.

Understanding the Anti-Scraping Mechanisms

Before developing a scraping solution, it is crucial to understand the anti-scraping measures implemented by e-commerce platforms. These measures include:

- Rate Limiting: Platforms can limit the requests made from a single IP address in a given time frame.

- CAPTCHAs: Websites may present CAPTCHAs to verify if the user is a human or a bot.

- Session Management: E-commerce platforms often rely on cookies, session IDs, and headers to identify and track users. Bots attempting to scrape data might be flagged based on suspicious patterns in these parameters.

- IP Blocking: Excessive requests from a single IP address may lead to IP bans or blacklisting.

- JavaScript Rendering: Some websites load their content using JavaScript, making it impossible to scrape product information using basic HTTP requests alone.

Given these anti-scraping tactics, building a robust web scraping system requires advanced techniques, including proxies, rotating session keys, handling CAPTCHAs, and managing JavaScript rendering.

Developing the Web Scraping API

The API should be built to interact with the e-commerce platform and handle all the complexities of scraping, data processing, and delivering results in a structured format. Below is an outline of the development of the scraping API in Python or Java.

Choosing the Right Programming Language and Libraries

- Python is a popular choice for web scraping due to its simplicity and the availability of powerful libraries.

⦁ Requests: These are for making HTTP requests to the e-commerce platform and receiving responses.

⦁ BeautifulSoup: This is for parsing HTML and extracting data from static pages.

⦁ Selenium: Useful for scraping dynamic pages where content is loaded using JavaScript.

⦁ Scrapy: A comprehensive web scraping framework in Python for handling large-scale scraping projects, offering features like crawling, data extraction, and exporting scraped data.

- Java can be used for more complex and high-performance applications, especially when scalability is a concern. Libraries like Jsoup (for HTML parsing) and Selenium can be used similarly to Python.

Web Scraping Workflow

- Request Initialization: The scraper begins by sending HTTP requests to the e-commerce platform's product search page.

- Session Handling: Proxies, rotating session keys (SKs), and ShumeiIds should be used to manage requests and avoid detection.

- Data Extraction: Once the request is made, data is extracted from the page using BeautifulSoup, Scrapy, or Selenium.

- Post-Processing: The extracted data should be cleaned and formatted into a structured form (e.g., JSON, CSV, or database entries).

- API Endpoint: The processed data is then exposed via an API endpoint, allowing external systems to request and retrieve the data.

Handling Anti-Scraping Measures

To ensure successful scraping while handling anti-scraping mechanisms, the following strategies must be employed:

- Proxies: A proxy pool can be used to avoid IP-based blocking. Proxies act as intermediaries that make requests for the scraping system, allowing it to mask its actual IP address and bypass rate limits. Proxy providers like ScraperAPI, ProxyMesh, or custom solutions can rotate IPs automatically.

- Rotating Session Keys (SKs): Many e-commerce platforms use session keys (SKs) to track user activity. To avoid session-based blocking, the scraper should regularly rotate session keys, creating new keys for each scraping session. This will prevent the platform from detecting abnormal request patterns from a single session.

- ShumeiIds: ShumeiIds are unique identifiers associated with each user session on some e-commerce platforms. These IDs can be rotated or changed frequently to avoid detection. Using ShumeiIds ensures the scraper can mimic user sessions effectively.

- CAPTCHA Handling: Services like 2Captcha or AntiCaptcha can be integrated into the scraping process to bypass CAPTCHAs. These services automatically solve CAPTCHAs in real time by employing human workers or AI algorithms, allowing the scraper to continue fetching data without manual intervention.

- User-Agent Rotation: E-commerce platforms often detect scraping attempts based on the user-agent header, which identifies the browser making the request. By rotating user-agent strings (i.e., the browser type) with each request, the scraper can disguise itself as different users accessing the website.

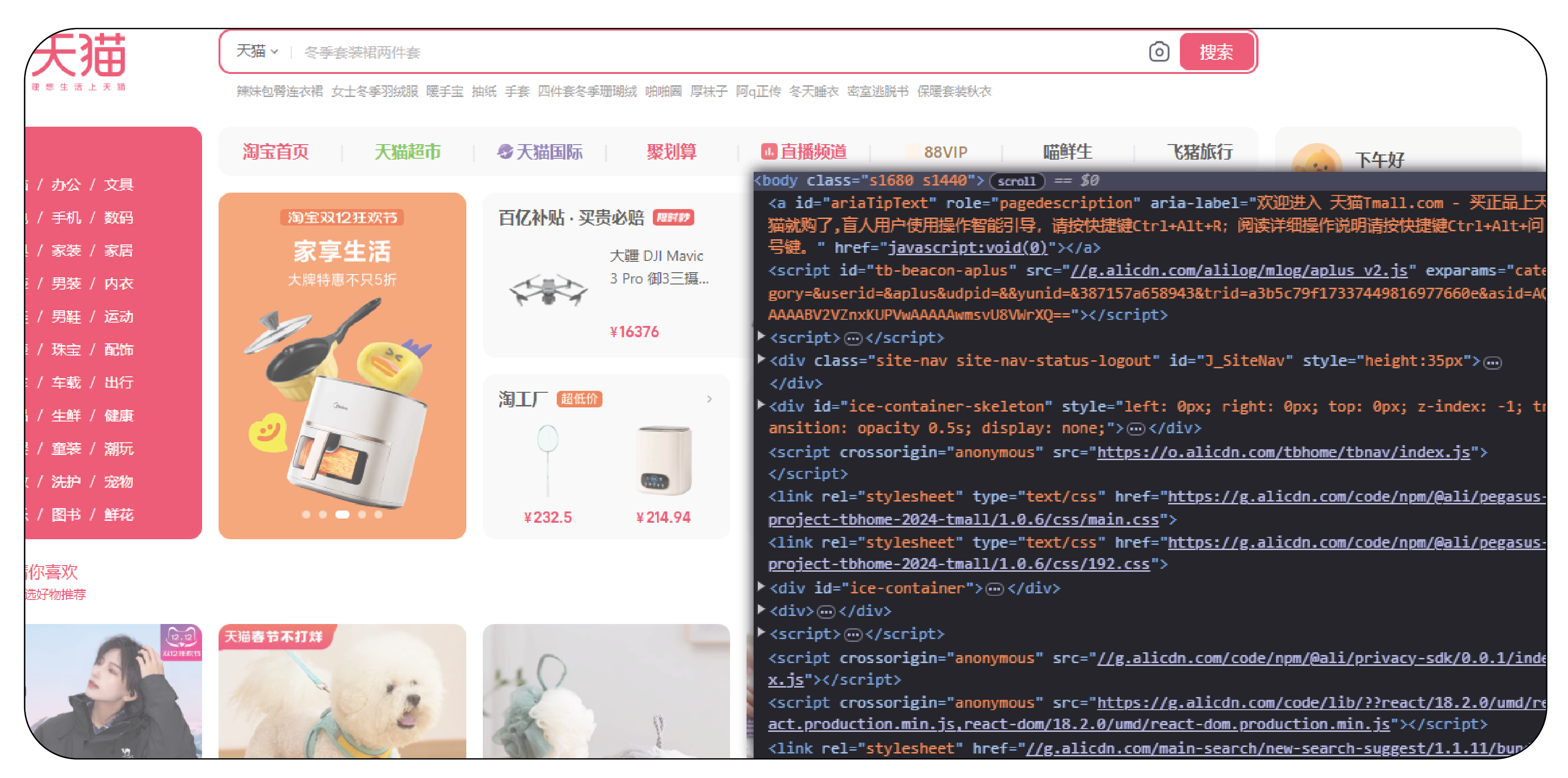

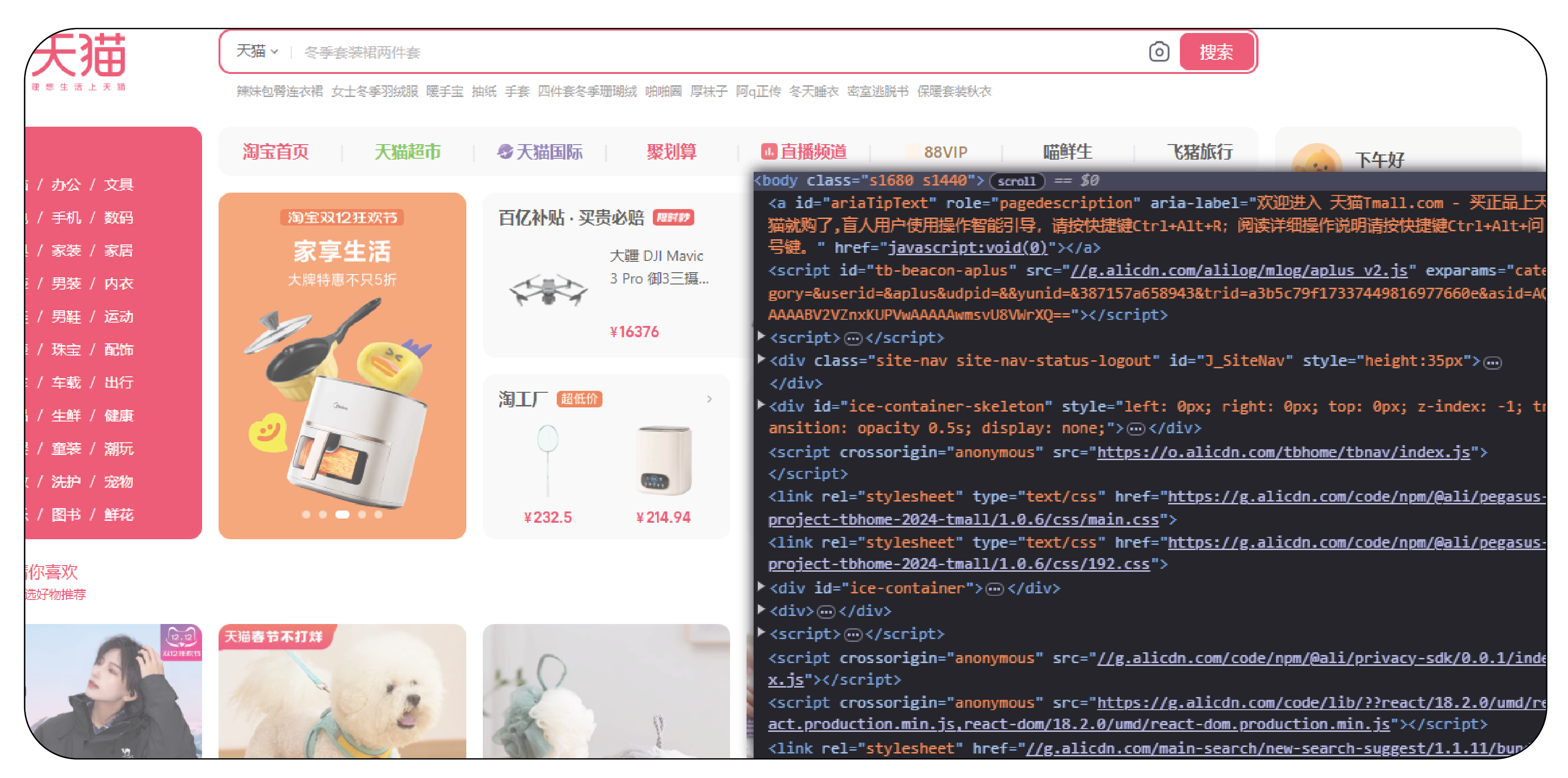

Handling Dynamic Content and JavaScript

Many e-commerce platforms render their product listings dynamically using JavaScript. Basic HTTP requests won't suffice to scrape will be needed as the product data is not immediately available in the raw HTML.

- Selenium is a powerful tool for handling JavaScript-heavy websites. It simulates fundamental browser interactions, allowing the scraper to render pages and extract content once JavaScript has loaded the data.

- Headless Browsers: Tools like Puppeteer (for Node.js) or Selenium WebDriver can be headless (without a visible browser window) to speed up the scraping process while still rendering JavaScript content.

Delivering Results via an API

Once the data has been scraped, it should be exposed through an API, allowing users to query the results in a structured format. Here's how to build the API:

API Design

- Endpoints: Define clear API endpoints for different functionalities, such as fetching product search results by category, keyword, or price range.

⦁ GET /api/products?category={category}&keyword={keyword}

⦁ GET /api/products/{product_id}

- Rate Limiting: Implement rate limiting in the API to avoid overwhelming the system and to mimic human-like query patterns.

- Authentication: Use API keys or OAuth to authenticate and authorize users who access the data. This will ensure that only authorized users can retrieve scraped data.

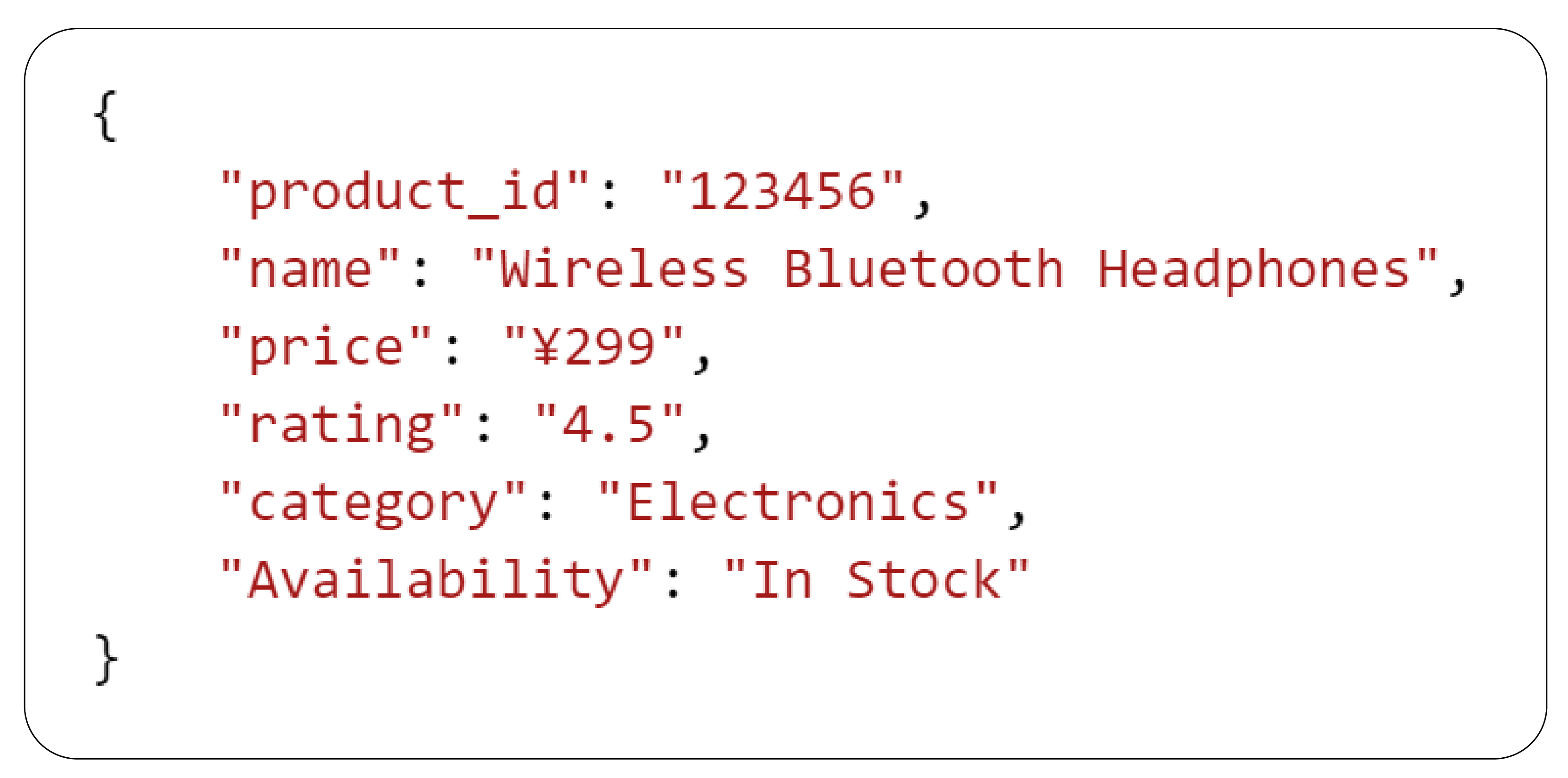

Data Format and Response

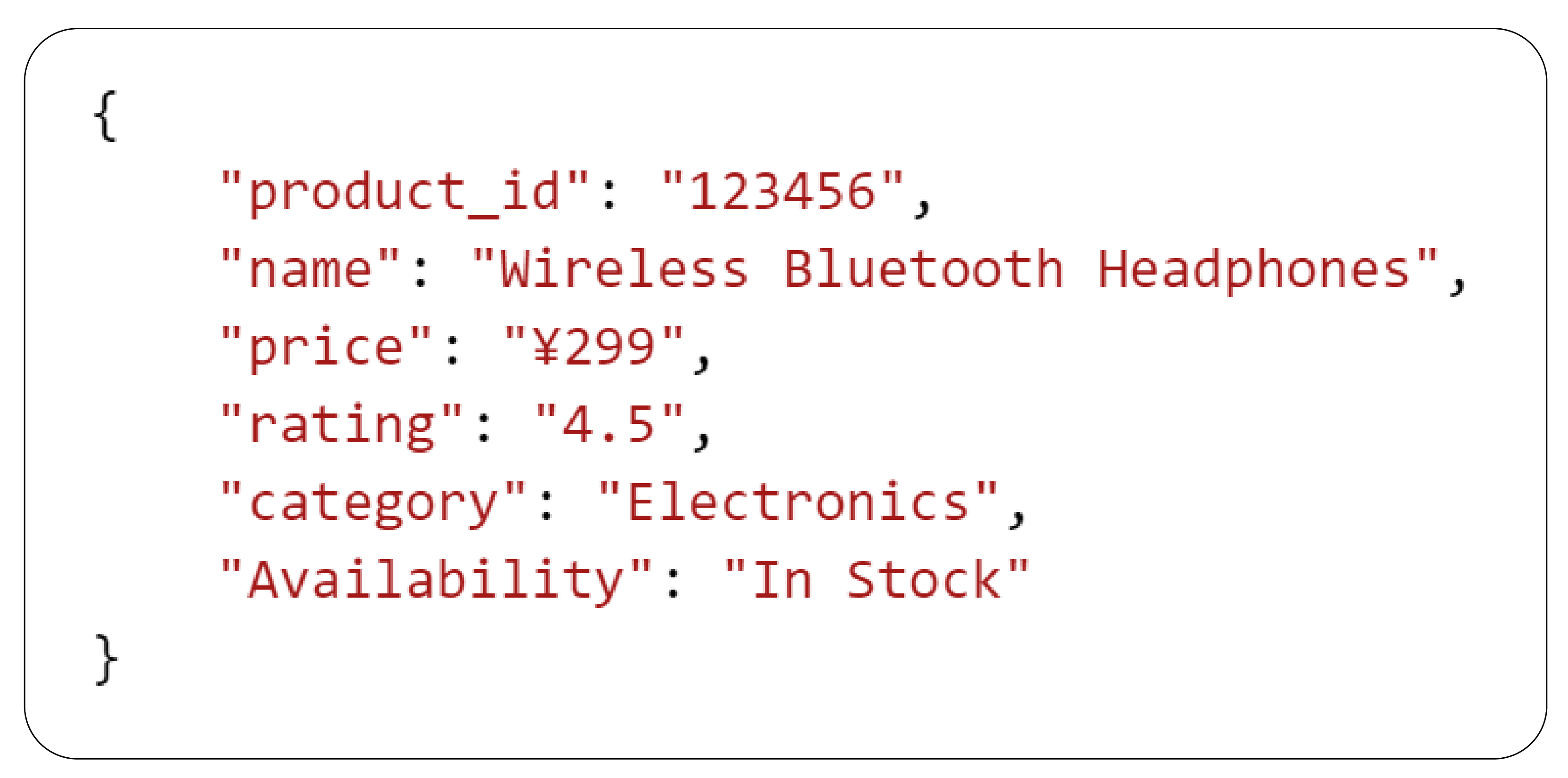

- JSON: JSON is the most common data format used for APIs. A response might look like this:

- Error Handling: Ensure proper error handling for failed requests or invalid inputs. Standard error codes like 400 (Bad Request), 404 (Not Found), and 500 (Internal Server Error) should be returned with a clear message.

Scalability and Performance

Handling large-scale scraping efficiently requires managing both performance and scalability. Consider the following strategies:

- Distributed Scraping: Distributing the scraping tasks across different machines using multiple servers or containers can improve scalability. Technologies like Docker or Kubernetes can be used to deploy and manage scraping services.

- Caching: Implement caching mechanisms (e.g., using Redis or Memcached) to store frequently accessed data and reduce redundant scraping requests.

- Error Handling and Retry Logic: Implement retry logic in case of failed requests to ensure stability. This will handle temporary failures like network issues, rate limits, or CAPTCHAs.

Conclusion

Building a robust and efficient web scraping API for extracting product search results from e-commerce platforms involves navigating complex anti-scraping mechanisms and utilizing various tools and techniques. You can successfully scrape data by incorporating proxies, rotating session keys, ShumeiIds, and using libraries like Selenium and Requests while maintaining anonymity and preventing detection. Exposing the scraped data via an API enables external applications to interact with it in real-time, making it a valuable resource for businesses. As the demand for data-driven insights continues to grow, scraping e-commerce platforms presents a unique opportunity to tap into one of the largest e-commerce markets in the world. Pricing Strategies can be optimized by analyzing the data, while eCommerce dataset scraping provides businesses with the raw data needed for competitive analysis and market trend insights.

At Product Data Scrape, we strongly emphasize ethical practices across all our services, including Competitor Price Monitoring and Mobile App Data Scraping. Our commitment to transparency and integrity is at the heart of everything we do. With a global presence and a focus on personalized solutions, we aim to exceed client expectations and drive success in data analytics. Our dedication to ethical principles ensures that our operations are both responsible and effective.

.webp)