Web scraping, the process of collecting data from websites, has become vital for businesses and individuals seeking valuable information. While web data scraping offers numerous benefits, it also involves ethical and legal considerations that must be navigated carefully. Understanding the dos and don'ts of web scraping ensures that your activities are effective and compliant. Adhering to best practices, whether using website data scraping services or employing a scraper, is crucial to avoid potential pitfalls and legal issues. These practices include respecting website policies, managing request rates, and avoiding collecting sensitive data. By following these guidelines, you can leverage its power to gain insights and drive decisions without violating ethical standards or legal requirements.

Dos of Web Scraping

Understanding the dos using Pricing Strategy and ECommerce Product Data Scraping Services is essential for anyone looking to ethically and effectively collect data from websites. These best practices ensure that your activities are legal, respectful of website policies, and efficient.

- Do Understand the Legal Landscape: Before you start scraping website data, it's essential to understand the legal implications. Different jurisdictions have varying laws regarding web scraping, and some websites explicitly prohibit it in their terms of service. Review the terms and conditions of the websites you intend to collect to avoid legal issues. If you need clarification on the legality, consulting with a legal expert can provide clarity.

- Do Respect Robots.txt Files: A robots.txt file is a standard website used to communicate with web crawlers and data scrapers. This file specifies which parts of the site should not be crawled. Respecting the directives in the robots.txt file is crucial. Ignoring these directives can lead to blocking or legal action from the website owner. Before collecting website data, always check and adhere to the robots.txt file.

- Do Use Appropriate Request Rates: Sending too many requests to a website quickly can overload the server, leading to potential bans or negative impacts on the website's performance. Implementing appropriate request rates, including rate limiting and delays between requests, is essential to avoid overloading the server. This practice ensures smoother operations and reduces the risk of getting blocked.

- Do Use Proxies and Rotate Ips: Proxies and rotating IP addresses are standard in web data extraction to avoid detection and ensure anonymity. This helps distribute the requests across multiple IPs, reducing the chances of being blocked by the target website. Use reliable proxy services to maintain a consistent and efficient process.

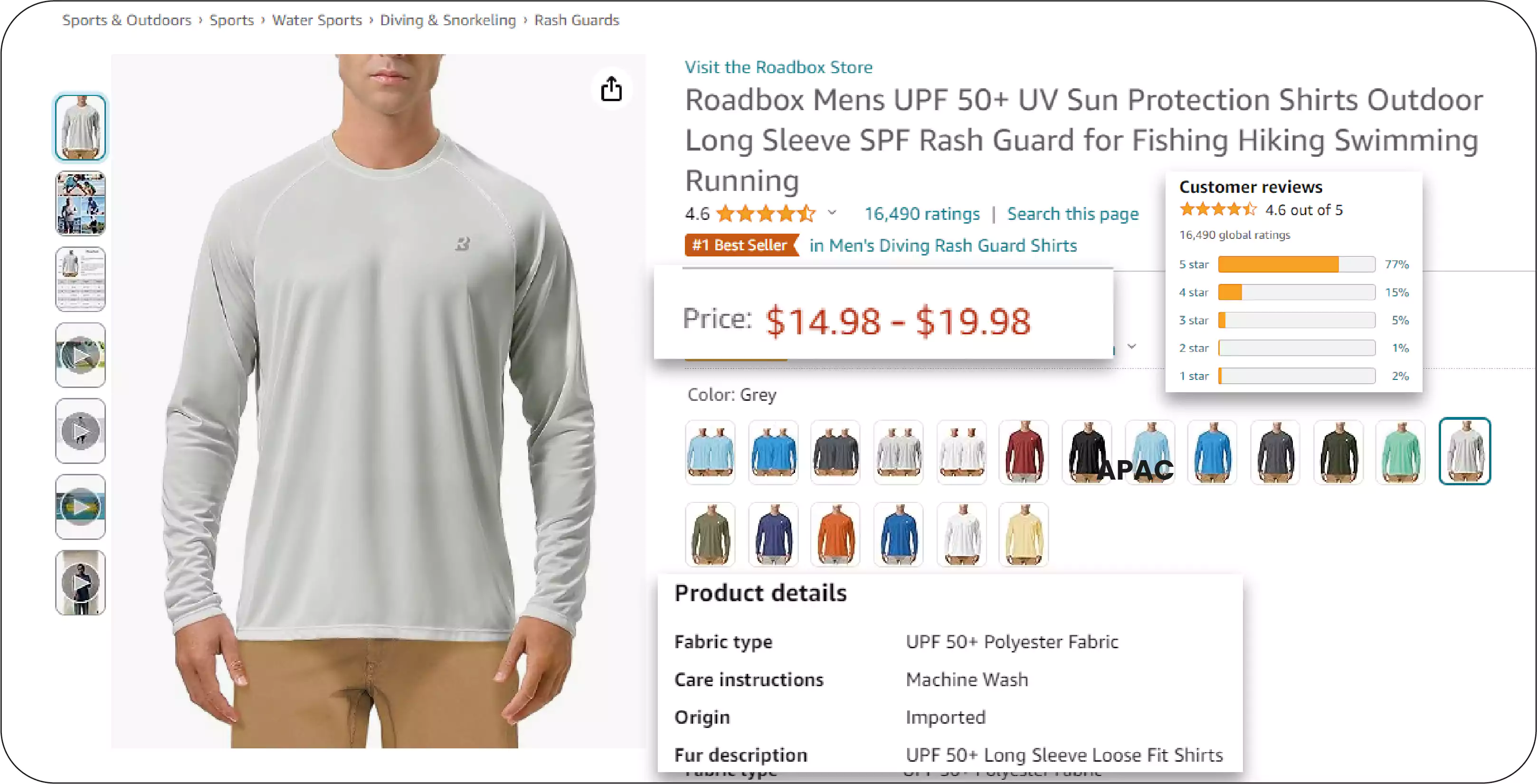

- Do Clean and Validate Data: After extracting website data, cleaning and validating the extracted information is essential. This process involves removing duplicates, handling missing values, and ensuring the data is in the correct format. Clean and validated data is crucial for accurate analysis and decision-making.

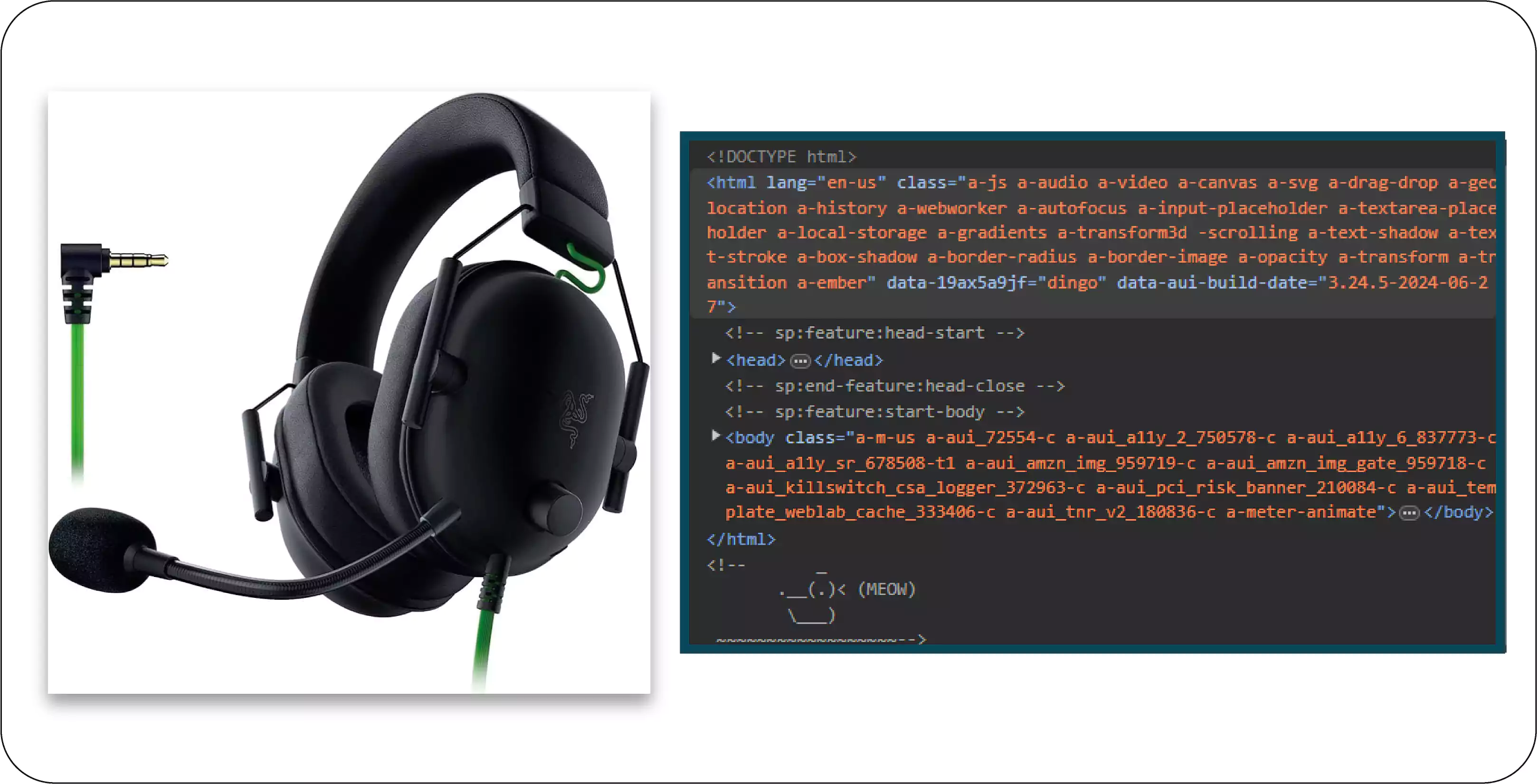

- Do Monitor and Respect Changes to Websites: Websites frequently update their structure and content, which can affect your activities. Regularly monitor the target websites for changes and adjust your web data extractor accordingly. Adapting to these changes can result in accurate or complete data extraction.

Don'ts of Web Scraping

Knowing the don'ts is crucial to avoid legal issues and maintain ethical standards. These guidelines help prevent overloading servers, violating terms of service, and collecting sensitive data, ensuring responsible and compliant practices.

- Don't Scrape Without Permission: Scraping website data without permission can lead to legal consequences and damage your reputation. While some websites openly allow scraping, others strictly prohibit it. Always seek permission from the website owner if the terms of service are unclear or explicitly prohibit extraction. Respecting the website's rules and obtaining consent is crucial for ethical web data collection.

- Don't Ignore Website Policies: Ignoring a website's terms of service or guidelines can lead to bans or legal actions. Many websites provide specific guidelines for extracting activities. Ignoring these policies not only risks legal repercussions but also undermines ethical standards. Always adhere to the website's policies and extracting guidelines.

- Don't Overload the Server: Sending a high volume of requests quickly can overwhelm the target server, causing performance issues or downtime. The website often detects and blocks such behavior. Implementing rate limits and respecting the server's capacity is essential to avoid negative impacts on the website's functionality.

- Don't Scrape Sensitive or Personal Data: Scraping sensitive or personal data, such as user information or confidential business details, is unethical and illegal. Data privacy laws, such as GDPR and CCPA, strictly regulate the collection and use of personal data. Ensure that your web data collecting activities comply with privacy regulations and avoid collecting sensitive information without explicit consent.

- Don't Use Poorly Coded Scrapers: Poorly coded scrapers can result in inefficient data extraction, potential detection, and website blocking. Invest in well-designed, efficient tools or services that follow best practices and ensure accurate and reliable data extraction. Quality tools can significantly improve the efficiency and success rate of your activities.

- Remember to Document Your Process: Documenting your process is essential for maintaining transparency and reproducibility. This includes recording the URLs, the data extracted, the scraping frequency, and any issues encountered. Proper documentation helps troubleshoot, maintain consistency, and ensure compliance with legal and ethical standards.

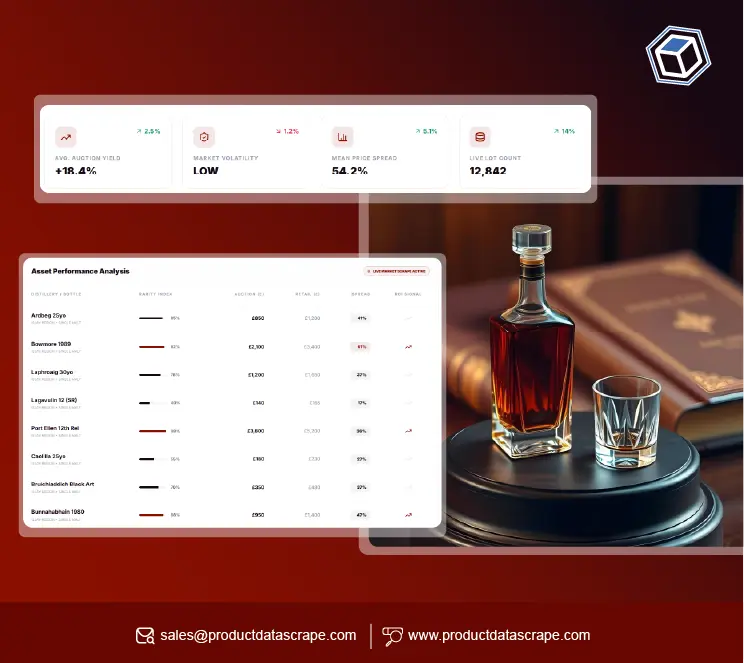

Best Practices for Using Website Data Scraping Services

Adhering to best practices when using website data scraping services ensures accurate, ethical, and compliant data extraction. Selecting reputable providers, clearly communicating requirements, and regularly reviewing the data are critical steps to achieving an effective and responsible process.

Choose Reputable Services: When opting for professional services, select reputable providers known for ethical practices and high-quality tools. Reputable services ensure compliance with legal standards and offer reliable support. Research and review service providers before making a decision.

Communicate Requirements Clearly: Communicate your requirements and objectives to the service provider. This includes specifying the target websites, the type of data needed, and the extracting frequency. Clear communication ensures the service provider understands your needs and delivers accurate results.

Monitor and Review the Data: Regularly monitor and review the data provided by website data scraping services. This ensures the extracted data meets your quality standards and objectives. Promptly address discrepancies or issues with the service provider to maintain data accuracy.

Conclusion: Web scraping is a powerful technique for extracting valuable data from websites, but it must be approached with caution and respect for legal and ethical standards. Following the dos and don'ts ensures that your activities are effective, ethical, and compliant. Whether using a web data extracting tool or relying on professional services, adhering to best practices is essential for successful and responsible data extraction. As the digital landscape evolves, staying informed and compliant will help you leverage the full potential of web scraping while maintaining integrity and legality.

At Product Data Scrape, ethical principles are central to our operations. Whether it's Competitor Price Monitoring Services or Mobile App Data Scraping, transparency and integrity define our approach. With offices spanning multiple locations, we offer customized solutions, striving to surpass client expectations and foster success in data analytics.

.webp)